Week 2: Machine Learning, Training Data, and Biases

DSAN 5450: Data Ethics and Policy

Spring 2026, Georgetown University

Schedule

Today’s Planned Schedule:

| Start | End | Topic | |

|---|---|---|---|

| Lecture | 3:30pm | 4:00pm | Making and Evaluating Ethical Arguments → |

| 4:00pm | 4:10pm | Key Takeaways for Policy Whitepapers → | |

| 4:10pm | 4:50pm | Data Ethics + Policy Conceptual Toolkit → | |

| Break! | 4:50pm | 5:00pm | |

| 5:00pm | 5:30pm | Context-Free (Naïve) Fairness (HW1) → | |

| 5:30pm | 6:00pm | 🚫 “Fairness Through Unawareness” 🙅 (HW1) → |

Machine Learning at 30,000 Feet

Three Component Parts of Machine Learning

- A cool algorithm 😎😍

- [Possibly benign but possibly biased] Training data ❓🧐

- Exploitation of below-minimum-wage human labor 😞🤐 (Dube et al. 2020, like and subscribe yall ❤️)

A Cool Algorithm 😎😍

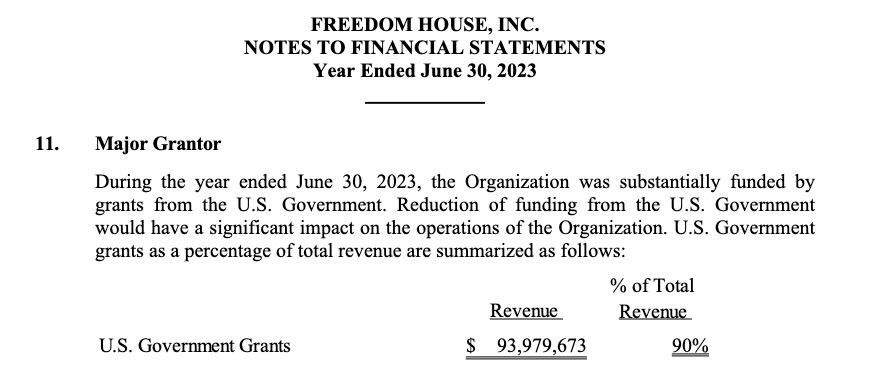

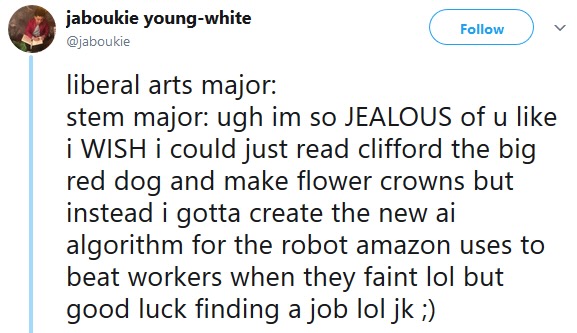

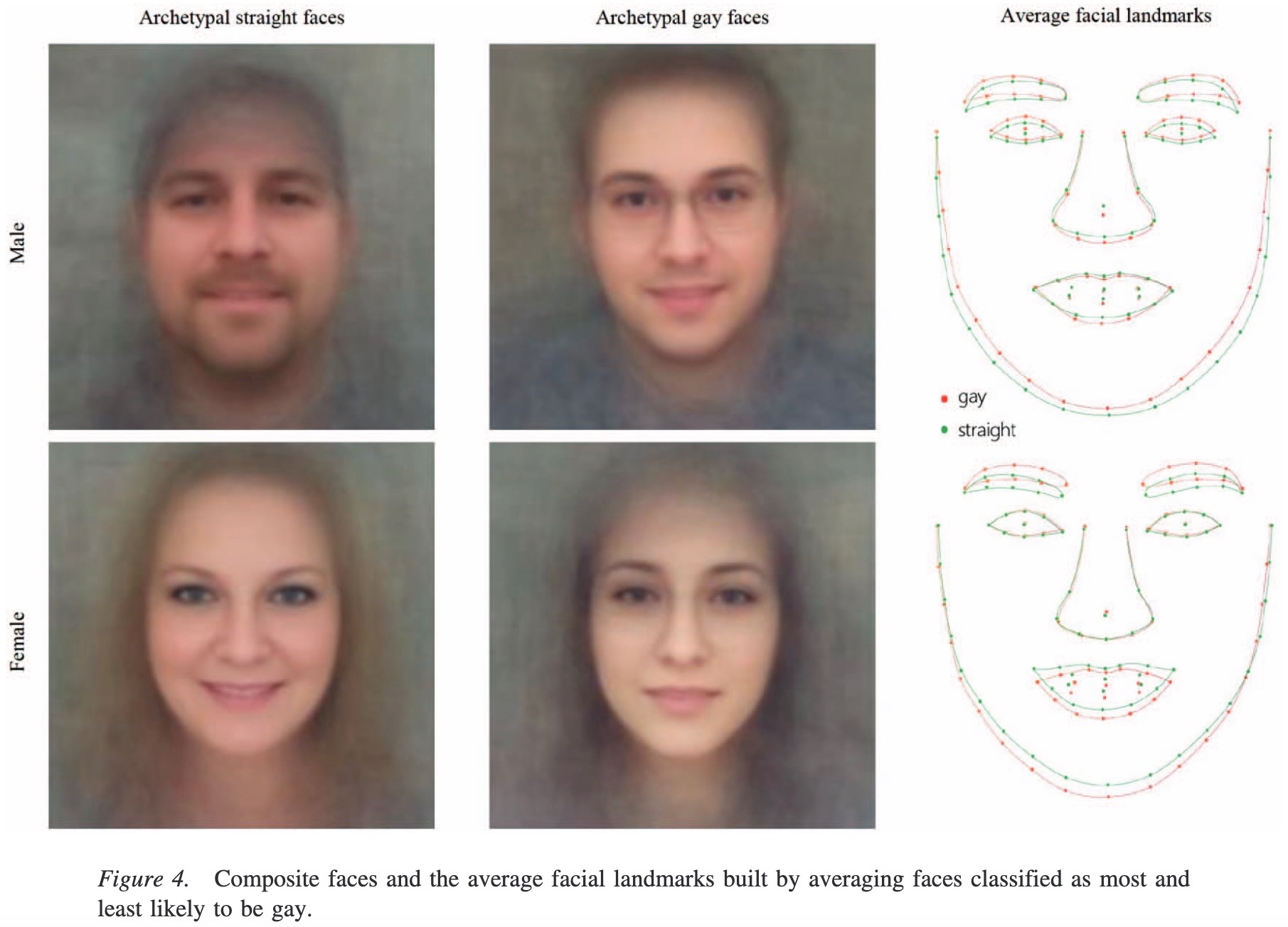

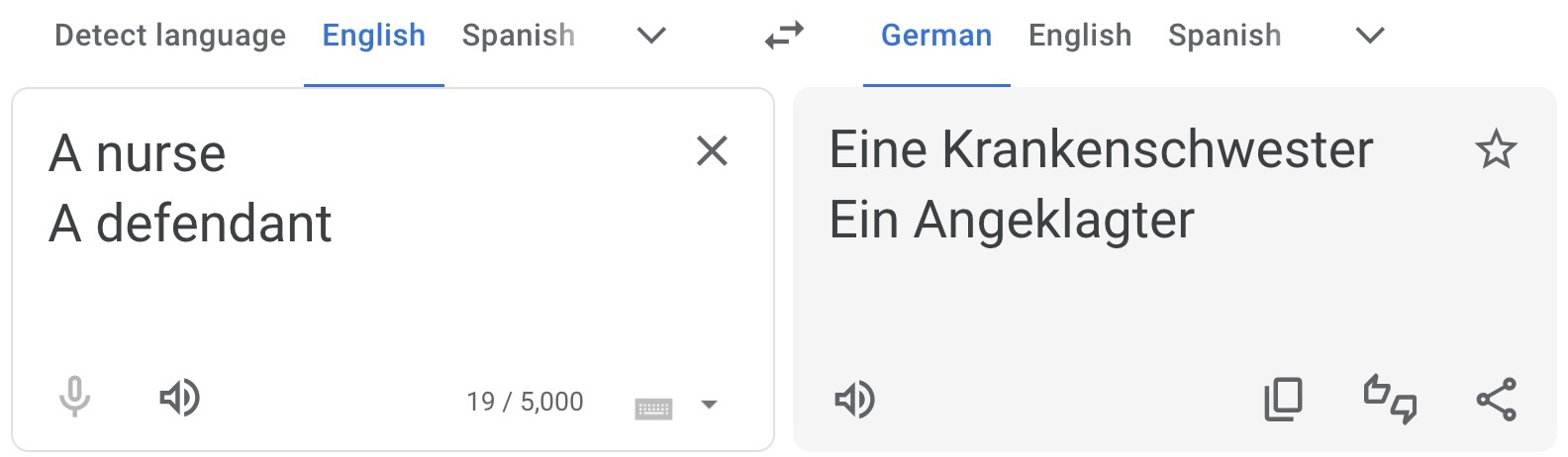

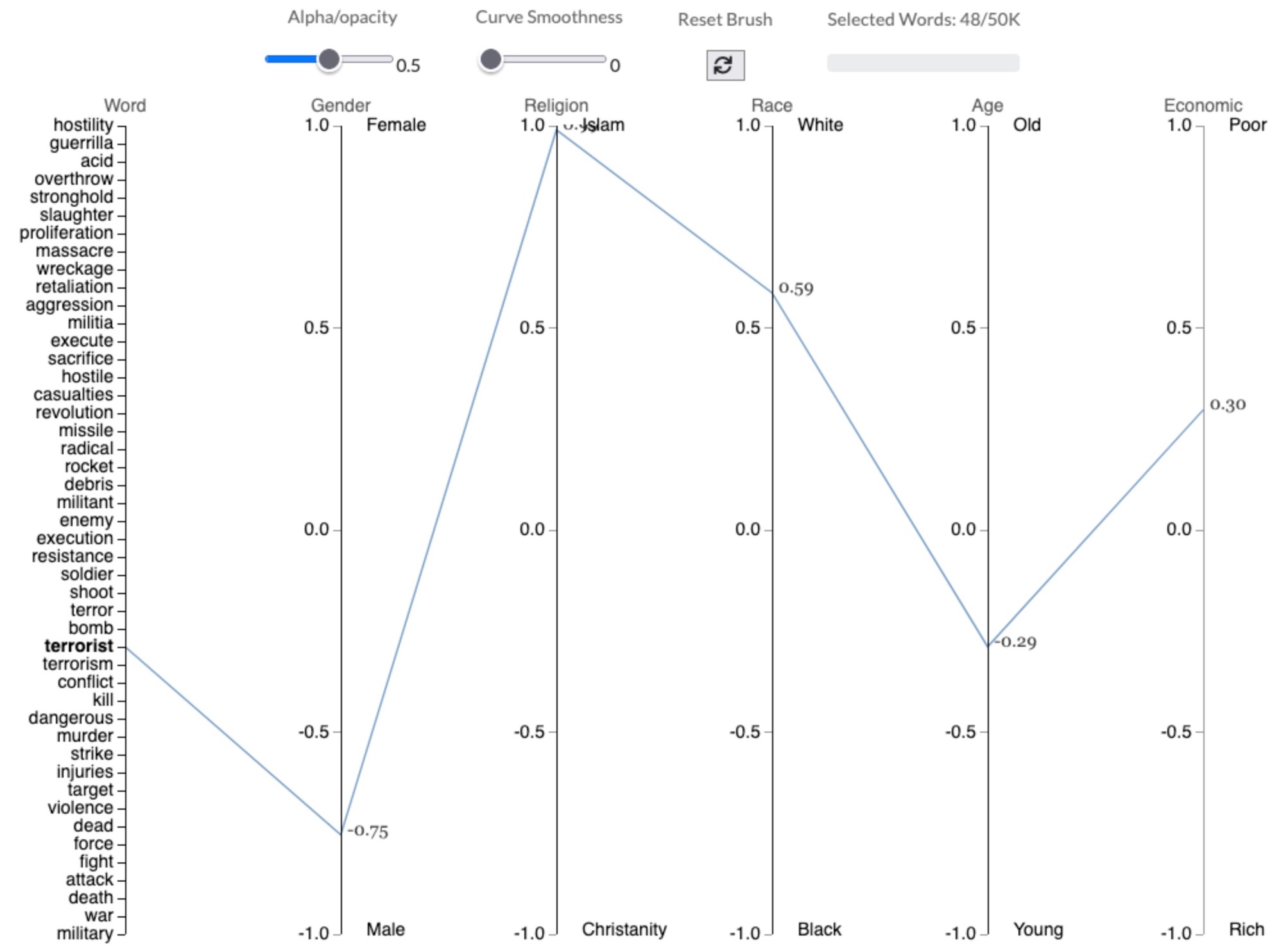

Training Data With Acknowledged Bias

- One potentially fruitful approach to fairness: since we can’t eliminate it, bring it out into the open and study it!

- This can, at very least, help us brainstorm how we might “correct” for it (next slides!)

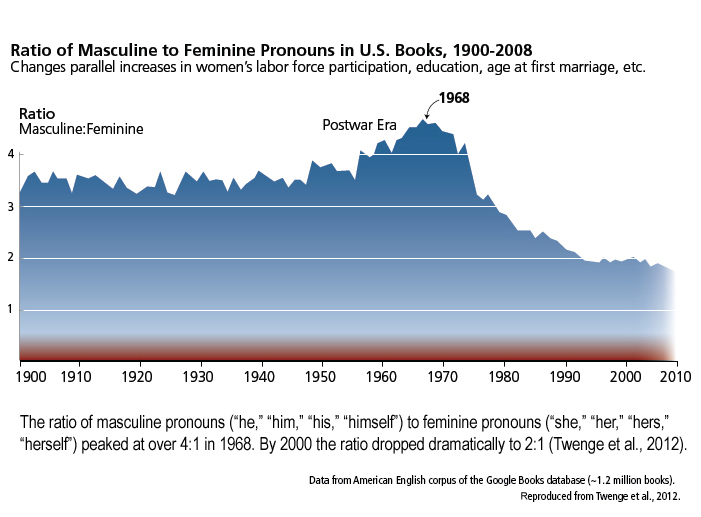

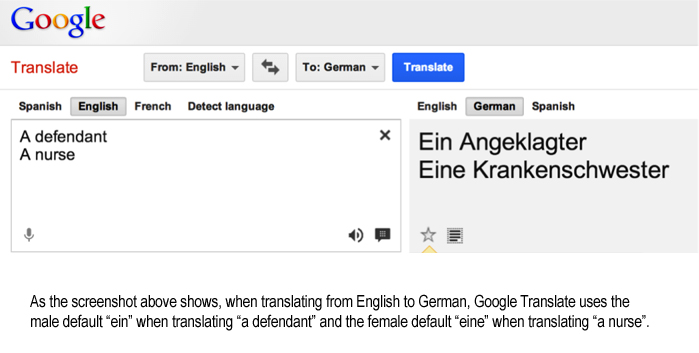

From Gendered Innovations in Science, Health & Medicine, Engineering, and Environment

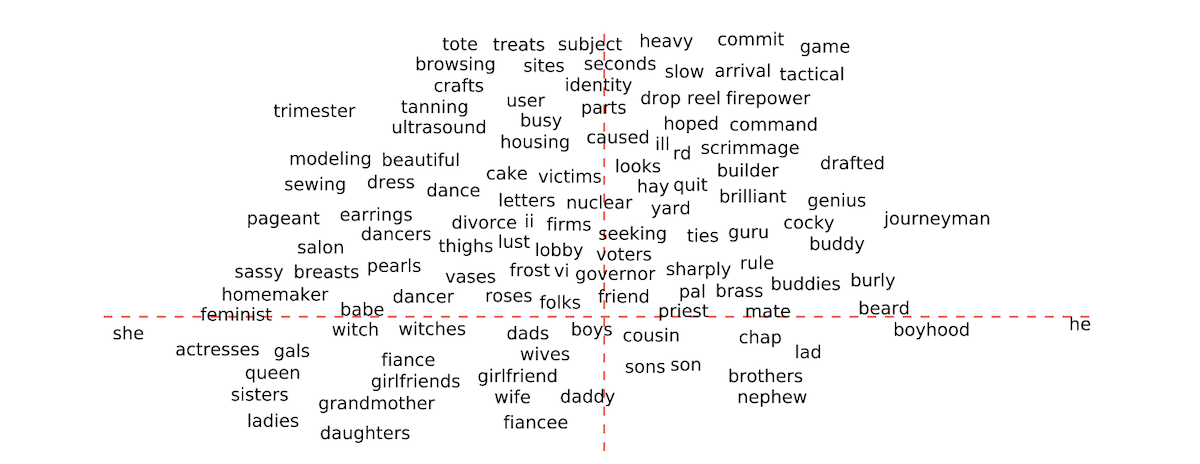

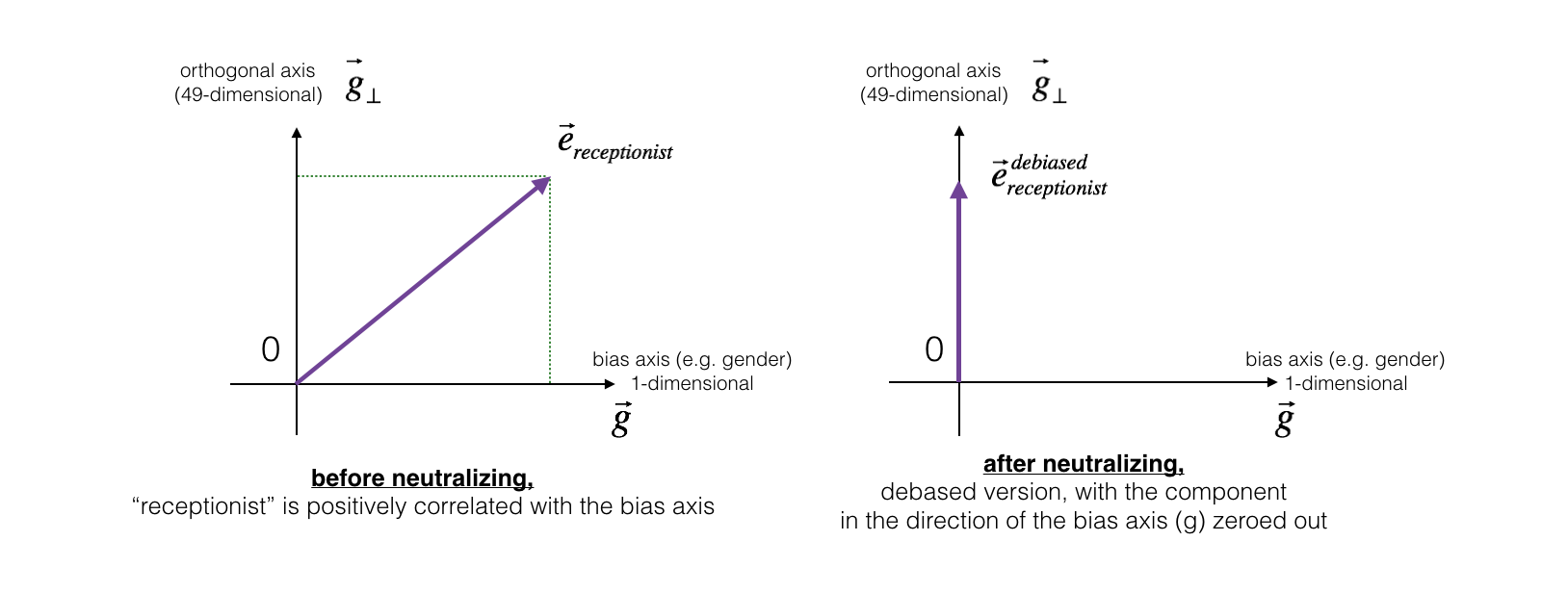

Word Embeddings

- Notice how the \(x\)-axis has been selected by the researcher specifically to draw out (one) gendered dimension of language!

- \(\overrightarrow{\texttt{she}}\) mapped to \(\langle -1,0\rangle\), \(\overrightarrow{\texttt{he}}\) mapped to \(\langle 1,0 \rangle\), others projected onto this dimension

Removing vs. Studying Biases

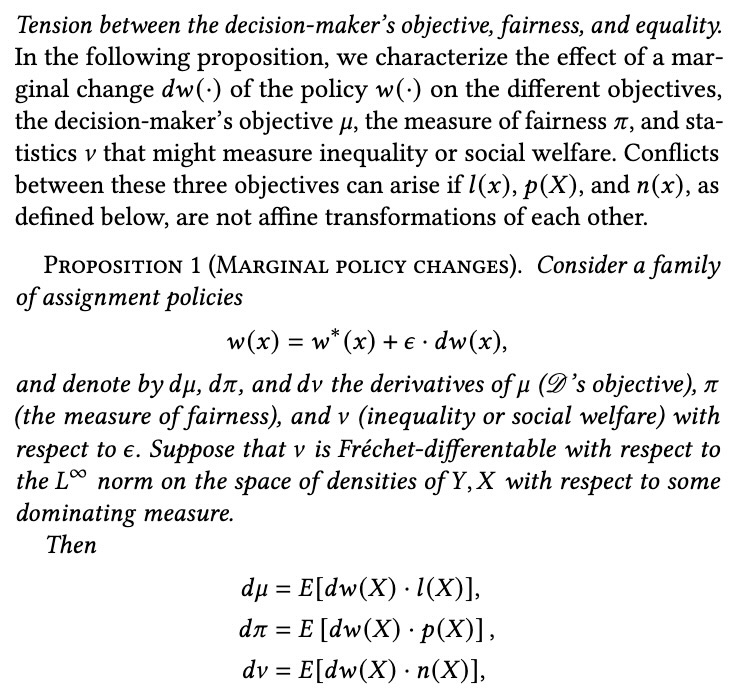

Context-Free Fairness

- Who Remembers 🎉Confusion Matrices!!!🎉

- Terrifyingly higher stakes than in DSAN 5000! Now \(D = 1\) could literally mean “shoot this person” or “throw this person in jail for life”

Categories of Fairness Criteria

Roughly, approaches to fairness/bias in AI can be categorized as follows:

- Single-Threshold Fairness

- Equal Prediction

- Equal Decision

- Fairness via Similarity Metric(s)

- Causal Definitions

- [Week 3] Context-Free Fairness: Easier to grasp from CS/data science perspective; rooted in “language” of ML (you already know much of it, given DSAN 5000!)

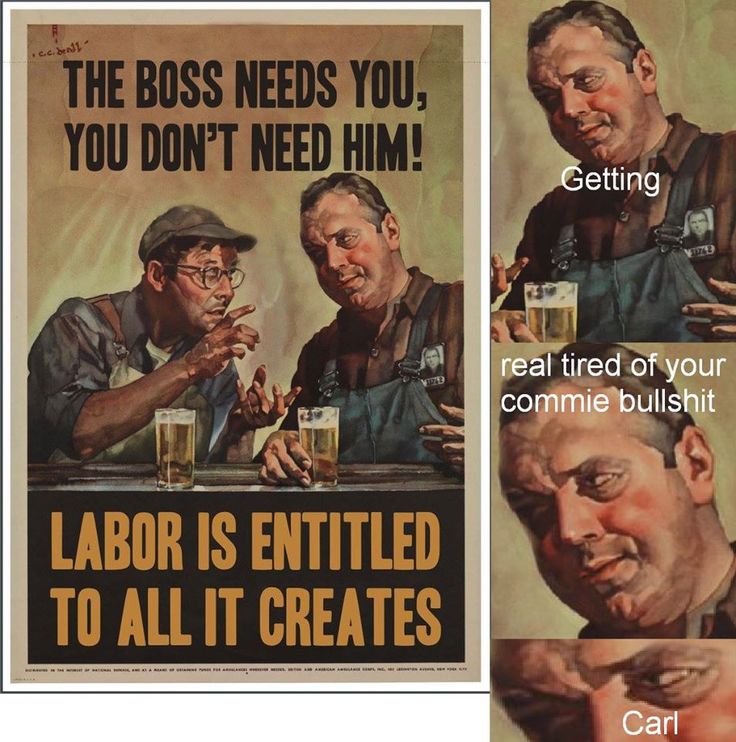

- But easy-to-grasp notion \(\neq\) “good” notion!

- Your job: push yourself to (a) consider what is getting left out of the context-free definitions, and (b) the loopholes that are thus introduced into them, whereby people/computers can discriminate while remaining “technically fair”

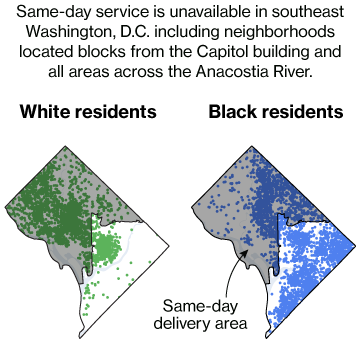

Laws: Often Perfectly “Technically Fair” (Context-Free Fairness)

Ah, la majestueuse égalité des lois, qui interdit au riche comme au pauvre de coucher sous les ponts, de mendier dans les rues et de voler du pain!

(Ah, the majestic equality of the law, which prohibits rich and poor alike from sleeping under bridges, begging in the streets, and stealing loaves of bread!)

Anatole France, Le Lys Rouge (France 1894)

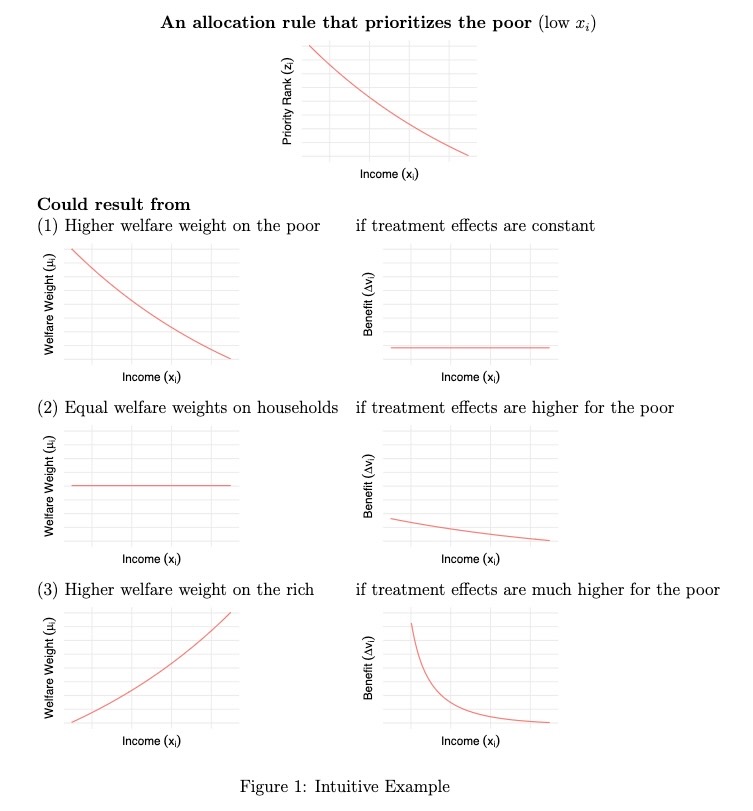

Context-Sensitive Fairness… 🧐

Decisions at Individual Level (Micro)

\(\leadsto\)

Emergent Properties (Macro)

…Enables INVERSE Fairness 🤯

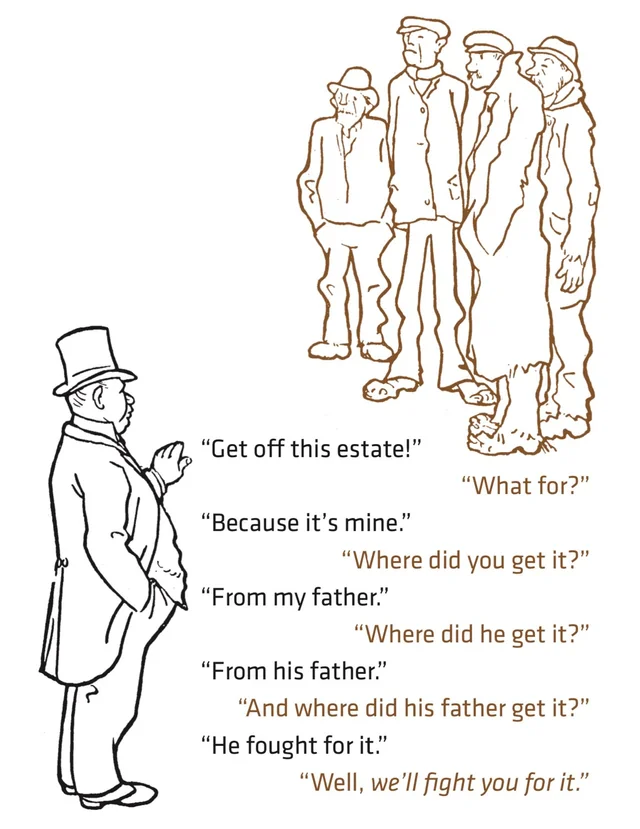

Context-Sensitive Fairness \(\Leftrightarrow\) Unraveling History

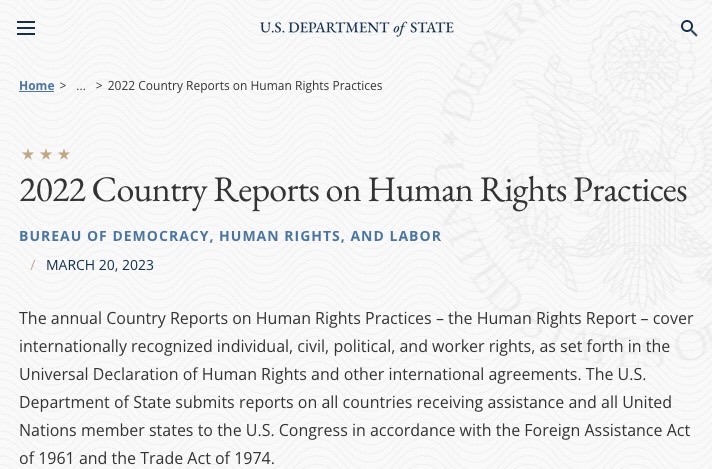

News: “A litany of events with no beginning or end, thrown together because they occurred at the same time, cut off from antecedents and consequences” (Bourdieu 2010)

Do media outlets optimize for explaining? Understanding?

Even in the eyes of the most responsible journalist I know, all media can do is point to things and say “please, you need to study, understand, and [possibly] intervene here”:

If we [journalists] have any reason for our existence, it must be our ability to report history as it happens, so that no one will be able to say, “We’re sorry, we didn’t know—no one told us.” (Fisk 2005)

Unraveling History

(Someday I will do something with this)

In the long evenings in West Beirut, there was time enough to consider where the core of the tragedy lay. In the age of Assyrians, the Empire of Rome, in the 1860s perhaps? In the French Mandate? In Auschwitz? In Palestine? In the rusting front-door keys now buried deep in the rubble of Shatila? In the 1978 Israeli invasion? In the 1982 invasion? Was there a point where one could have said: Stop, beyond this point there is no future? Did I witness the point of no return in 1976? That 12-year-old on the broken office chair in the ruins of the Beirut front line? Now he was, in his mid-twenties (if he was still alive), a gunboy no more. A gunman, no doubt… (Fisk 1990)

Context-Sensitive Fairness \(\Leftrightarrow\) Unraveling History

(Reminder: Miracle of Immaculate Genocide)

![From Cheng (2018) The Art of Logic [plz watch if you can!]](images/cheng_plane.jpg)