import pandas as pd

pop_df = pd.DataFrame({'quote_unquote_race': [0, 1], 'prop_uses_drugs': [0.16, 0.18]})

pop_df| quote_unquote_race | prop_uses_drugs | |

|---|---|---|

| 0 | 0 | 0.16 |

| 1 | 1 | 0.18 |

As an opening disclaimer: recall that we know from statistical theory (e.g., the material that forms the basis of DSAN5100) that, given a population of size \(\nu\) (“nu”, the Greek version of \(n\)), even if we conduct smaller samples of size \(n \ll \nu\), we can infer fairly accurate estimates of some property of the population, especially as \(n \rightarrow \nu\).

So, given that framework, and our goal of laying out operationalization as clearly as possible, you can keep in mind here that:

Studies of drug usage, drawing on a range of anonymized surveys, have slowly started to come up with estimates of population drug usage by self-reported race, which tend to find that the rate of narcotics usage is slightly higher for the white population than for the black population in the US (left panel):

Mathematically, then, let’s start laying out our axioms or antecedents, that we’ll work with in building up descriptive definitions of fairness.

Let \(\overline{\Pi}\) represent the entire population of the US, so that e.g. \(\Pr_{\overline{\Pi}}(E)\) represents the probability that a randomly-chosen person from the population satisfies event \(E\). Let \(\overline{\mathcal{W}}\) and \(\overline{\mathcal{B}}\) represent the white and black populations of the US respectively (recall from e.g. DSAN5100 the use of Greek letters, or at least curly capitalized Latin letters, to represent population parameters! And, as for why they have \(\overline{\text{overlines}}\) above them, read onwards).

Let’s first attempt (and fail) to define a Random Variable \(\widetilde{A}\) (short for “Protected Attribute” in this case) representing the race self-reported to the US Census for a randomly-chosen person from the population of the US (\(\overline{\Pi}\)), such that

\[ \widetilde{A} = \begin{cases} 0 &\text{if Self-Reported White} \\ 1 &\text{if Self-Reported Black} \end{cases} \]

Why is there a tilde (~) above the \(A\) there (and why does this fail to serve as a valid Random Variable as defined in probability theory)? Because, before we can even get off the ground, we have to have the background knowledge that individuals responding to the Census’ questions can list more than one race: there are many individuals in the US for whom the event \(\widetilde{A} = 0\) and \(\widetilde{A} = 1\) both occur if they happen to be the randomly-chosen person. Thus, since Random Variables are by definition:

\(\widetilde{A}\) is straightforwardly not a valid Random Variable—the conditions of the Kolmogorov axioms, which enable expressions in probability theory to be “true” in the same way that the ZFC axioms enable \(1 + 1 = 2\) to be “true”, do not permit non-mutually-exclusive outcomes.

So, if we simply ignore this fact and “jump” directly to white vs. black in the way that this is usually done—a jump that, admittedly, we made ourselves in Question 4 of HW1—this should trigger a “red flag” in your mind, with respect to the question from the Operationalization slides about, “is this variable really measuring what it says it is measuring?”

To address this issue, at minimum, we’ll need to define two Random Variables \(B\) and \(W\), such that

\[ \begin{align*} B &= \begin{cases} 0 &\text{if Did Not Self-Report Black} \\ 1 &\text{if Self-Reported Black} \end{cases} \\ W &= \begin{cases} 0 &\text{if Did Not Self-Report White} \\ 1 &\text{if Self-Reported White} \end{cases} \end{align*} \]

So that now \(B = 1\) and \(W = 1\) can both be true for an individual, which does not violate any Kolmogorov axioms!

Then, we can read more into the methodology that the Hamilton Project/Brookings study cited above uses, to find that they

So, solely for the (descriptive) purpose of matching their provided data on drug usage with base rate information from the Census (see below), we’ll now define a non-tilde version of \(A\) which is what the two bars in the above plots really represent. Letting

\[ S = \begin{cases} 0 &\text{if Multiple Self-Reported Races} \\ 1 &\text{if Single Self-Reported Race} \end{cases} \]

we can now handle the first bullet point by defining a new sub-population \(\Sigma \subset \overline{\Pi}\) consisting of all Census respondents who self-reported only one race, i.e., all Census respondents in \(\overline{\Pi}\) for whom \(S = 1\).

But, to handle the second bullet point, we need to define a second sub-population \(\Pi \subset \Sigma \subset \overline{\Pi}\), of those individuals in \(\Sigma\) for whom \(W = 1\) or \(B = 1\).

It is with respect to this second sub-population \(\Pi\) that we can now finally define a valid binary Random Variable \(A\) as

\[ A = \begin{cases} 0 &\text{if }W = 1 \\ 1 &\text{if }B = 1 \end{cases} \]

where we need to keep in mind that \(A\) is only well-defined with respect to \(\Pi \subset \Sigma \subset \overline{\Pi}\).

Now, as the Hamilton Institute/Brookings study (and many many, probably most, studies of race in the US) defines it implicitly, we can therefore be more explicit here that we are:

Correspondingly, we can map:

I will drop the scare-quotes on “Black” and “White” in a lot of places going forward, so your job is to insert them in your mind when you read the two non-scare-quoted words! I understand if that strikes you as pedantic at first, but please keep in mind the goal of transparency and reproducibility in science (those aren’t even from this class, they’re from the core DSAN5000 class, week 1!): the point is to enable people who are (rightfully) skeptical about data scientists studying race to at least be able to scroll up here and uncover some of the layers of assumptions undergirding our operationalization of “race” here.

Now, to characterize the height of the bar plotted in the figure’s left panel (as opposed to the split of the population into two separate bars), we need to define \(D\) as a Random Variable (short for “Drugs” in this case) representing the drug use of a randomly-chosen person from \(\Pi\), such that

\[ D = \begin{cases} 0 &\text{if Doesn't Use Drugs} \\ 1 &\text{if Uses Drugs} \end{cases} \]

And now we can represent the two bar heights, the two population-level parameters, as:

\[ \begin{align*} \mathbb{E}[D \mid A = 0] = \Pr(D = 1 \mid A = 0) &\approx 0.18 \\ \mathbb{E}[D \mid A = 1] = \Pr(D = 1 \mid A = 1) &\approx 0.16 \end{align*} \]

Where the expectation and probability measures are equal in this case because the Random Variable \(D\) is binary (0/1)[1].

Notice how, there are implementation factors coming into play in moving from this information towards the Fairness in AI material below, since we have a somewhat weird case of something (drug use) that we can infer at the population level despite not being able to easily observe it at the individual level. In other words, to move to the next “step” from this one, we’re already pushing a lot of stuff-from-weeks-1-and-2 (for example, the ethics of elicitation of sensitive data—an individual is not going to be as forthcoming in their illegal drug usage as they would be their eye color).

\[ \mathbb{E}[X] \overset{\text{def}}{=} \sum_{v_X \in \mathcal{R}_X}v_X \cdot \Pr(X = v_X) = 0 \cdot \Pr(X = 0) + 1 \cdot \Pr(X = 1) = \Pr(X = 1) \]

Although the population-level data above is technically available for use by researchers, on its own it doesn’t help very much for researchers working with ML-based classifiers for example, since it basically represents a dataset with \(N = 2\) observations:

import pandas as pd

pop_df = pd.DataFrame({'quote_unquote_race': [0, 1], 'prop_uses_drugs': [0.16, 0.18]})

pop_df| quote_unquote_race | prop_uses_drugs | |

|---|---|---|

| 0 | 0 | 0.16 |

| 1 | 1 | 0.18 |

To even get started in terms of being able to use this data to evaluate fairness, we need to also know the base rates of the two subgroups \(\mathcal{B}\) and \(\mathcal{W}\) with respect to their combined population \(\Sigma\). For example, Chapter 3 of Barocas et al. (2024) builds its description of fairness in AI around classification as the problem of determining (predicting) values of \(y\) for given values of \(x\), rooted in jointly distributed Random Variables \(X\) and \(Y\), with a particular collected dataset viewed as samples from

a probability distribution over pairs of values \((x,y)\) that the random variables \((X,Y)\) might take on.

In our case, notice how we currently only have the two conditional expectations written above, not a full joint distribution of \(D\) and \(A\). So, we should be able to identify the missing piece by writing out the joint distribution as a function of conditional and marginal distributions (in prob/stats textbooks, this is usually introduced as the definition of conditional probability):

\[ \Pr(D \mid A) = \frac{\Pr(D, A)}{\Pr(A)} \implies \Pr(D, A) = \underbrace{\Pr(D \mid A)}_{\text{We have this}}\underbrace{\Pr(A)}_{\text{We don't have this}} \]

So, we have to go out and find the missing term \(\Pr(A)\). Thankfully for this case, the US Census Bureau’s job is to take censuses of the self-reported race of the US population. Less thankfully, these are reported with respect to \(\overline{\Pi}\), not \(\Pi\), so we’ll need to re-normalize.

You can find the Census population percentages here, which tell us that:

First, since the Census provides \(\Pr_{\overline{\Pi}}(S = 0)\), but the Hamilton/Brookings study’s population is those for whom \(S = 1\), we’ll need to use the Kolmogorov axioms to derive

\[ \textstyle \Pr_{\overline{\Pi}}(S = 1) = 1 - \Pr_{\overline{\Pi}}(S = 0) = 0.969. \]

From this quantity we can derive

\[ \begin{align*} \textstyle \Pr_{\overline{\Pi}}(W = 1 \mid S = 1) &= \frac{\Pr_{\overline{\Pi}}(W = 1, S = 1)}{\Pr_{\overline{\Pi}}(S = 1)} = \frac{0.753}{0.969} \approx 0.777 \\ \textstyle \Pr_{\overline{\Pi}}(B = 1 \mid S = 1) &= \frac{\Pr_{\overline{\Pi}}(B = 1, S = 1)}{\Pr_{\overline{\Pi}}(S = 1)} = \frac{0.137}{0.969} \approx 0.141 \\ \end{align*} \]

therefore giving us (by the way we defined \(\Sigma\) above):

\[ \begin{align*} \textstyle \Pr_{\Sigma}(W = 1) &= 0.777 \\ \textstyle \Pr_{\Sigma}(B = 1) &= 0.141 \end{align*} \]

and finally, by the way we defined \(\Pi\) (where, since this is our target population, the one we’d like to use for the remainder of the demo, we define \(\Pr_{\Pi}(E) \equiv \Pr(E)\)),

\[ \begin{align*} \Pr(A = 0) &= \frac{\Pr_{\Sigma}(W = 1)}{\Pr_{\Sigma}(W = 1 \vee B = 1)} = \frac{0.777}{0.777 + 0.141} \approx 0.846 \\ \Pr(A = 1) &= \frac{\Pr_{\Sigma}(B = 1)}{\Pr_{\Sigma}(W = 1 \vee B = 1)} = \frac{0.141}{0.777 + 0.141} \approx 0.154 \end{align*} \]

Now that we have the missing piece allowing us to fully characterize the joint distribution, we can (finally) start deriving a few of the non-immediately-obvious implications from the data we have. For example:

The probability of being a drug user

\[ \begin{align*} \Pr(D = 1) &= \Pr(D = 1, A = 0) + \Pr(D = 1, A = 1) \\ &= \Pr(D = 1 \mid A = 0)\Pr(A = 0) + \Pr(D = 1 \mid A = 1)\Pr(A = 1) \\ &= (0.18)(0.846) + (0.16)(0.154) \approx 0.177 \end{align*} \]

The probability of being a non-drug user (a sanity check to make sure our probabilities satisfy Kolmogorov axioms)

\[ \begin{align*} \Pr(D = 0) &= \Pr(D = 0, A = 0) + \Pr(D = 0, A = 1) \\ &= \Pr(D = 0 \mid A = 0)\Pr(A = 0) + \Pr(D = 0 \mid A = 1)\Pr(A = 1) \\ &= (0.82)(0.846) + (0.84)(0.154) \approx 0.823 \end{align*} \]

The probability that someone is black given that they are a drug user

\[ \begin{align*} \Pr(A = 1 \mid D = 1) &\overset{\text{Bayes}}{\underset{\text{Thm}}{=}} \frac{\Pr(D = 1 \mid A = 1)\Pr(A = 1)}{\Pr(D = 1)} = \frac{(0.16)(0.154)}{0.177} \\ &\approx 0.139 \end{align*} \]

The probability that someone is white given that they are a drug user

\[ \begin{align*} \Pr(A = 0 \mid D = 1) &\overset{\text{Bayes}}{\underset{\text{Thm}}{=}} \frac{\Pr(D = 1 \mid A = 0)\Pr(A = 0)}{\Pr(D = 1)} = \frac{(0.18)(0.846)}{0.177} \\ &\approx 0.860 \end{align*} \]

And, more generally, we can write out the entire joint distribution in a table like

| \(D = 0\) | \(D = 1\) | |

|---|---|---|

| \(A = 0\) | \(\Pr(A = 0, D = 0)\) | \(\Pr(A = 0, D = 1)\) |

| \(A = 1\) | \(\Pr(A = 1, D = 0)\) | \(\Pr(A = 1, D = 1)\) |

Which we compute using Python here to save time (though in general the types of calculations above are fair game for assignments / exams!)

import numpy as np

pA0 = 0.846

pA1 = 1 - pA0 # Hooray for mantissas (...mantissae? mantissi?)

pD1_given_A0 = 0.18

pD0_given_A0 = 1 - pD1_given_A0

pD1_given_A1 = 0.16

pD0_given_A1 = 1 - pD1_given_A1

# Joint probabilities

pD1_A0 = pD1_given_A0 * pA0

print(pD1_A0)

pD1_A1 = pD1_given_A1 * pA1

print(pD1_A1)

pD0_A0 = pD0_given_A0 * pA0

print(pD0_A0)

pD0_A1 = pD0_given_A1 * pA1

print(pD0_A1)

joint_dist = np.array([

[pD0_A0, pD1_A0],

[pD0_A1, pD1_A1]

])

print(joint_dist)

print(np.sum(joint_dist))

print(pD0_A0 + pD0_A1 + pD1_A0 + pD1_A1) # Coolio0.15228

0.024640000000000006

0.69372

0.12936000000000003

[[0.69372 0.15228]

[0.12936 0.02464]]

1.0

1.0Now, if we re-do everything above but with drug usage rates operationalized using arrest rates, we instead have

\[ \begin{align*} \Pr(D = 1 \mid A = 0) &= 0.0040 \\ \Pr(D = 1 \mid A = 1) &= 0.0105 \end{align*} \]

def gen_noisy_feature(pop_race_vec):

X_bin = pop_df['A'].apply(lambda x: 1 if x == 1 else -1)

# Random (low chance) flip

X_mult = rng.choice([1,-1], size=len(pop_race_vec), p=[0.95,0.05])

X_result = X_bin * X_mult

X_noise = rng.normal(0, 0.1, size=len(pop_df))

X_final = X_result + X_noise

return X_final

def gen_random_feature(pop_race_vec):

X_rand = rng.normal(0, 0.1, size=len(pop_race_vec))

return X_rand

def classify(test_Xmat, d_prob_thresh = 0.17):

test_Xmat_pred = clf.predict_proba(test_Xmat)

test_d_prob = test_Xmat_pred[:,1]

test_d_class = np.where(test_d_prob > d_prob_thresh, 1, 0)

return test_d_class

def construct_population(pD1_given_A0, pD1_given_A1, nu=1000):

pA0 = 0.846

pA1 = 1 - pA0

pD0_given_A0 = 1 - pD1_given_A0

pD0_given_A1 = 1 - pD1_given_A1

# Joint probabilities

pD1_A0 = pD1_given_A0 * pA0

#print(pD1_A0)

pD1_A1 = pD1_given_A1 * pA1

#print(pD1_A1)

pD0_A0 = pD0_given_A0 * pA0

#print(pD0_A0)

pD0_A1 = pD0_given_A1 * pA1

#print(pD0_A1)

joint_dist = np.array([

[pD0_A0, pD1_A0],

[pD0_A1, pD1_A1]

])

print(joint_dist)

nu = 1000

pop_df = pd.DataFrame({'A': [0]*nu, 'D': [0]*nu})

joint_freqs = nu * joint_dist

D0_A0_df = pd.DataFrame([{'D': 0, 'A': 0}] * round(joint_freqs[0,0]))

D0_A1_df = pd.DataFrame([{'D': 0, 'A': 1}] * round(joint_freqs[1,0]))

D1_A0_df = pd.DataFrame([{'D': 1, 'A': 0}] * round(joint_freqs[0,1]))

D1_A1_df = pd.DataFrame([{'D': 1, 'A': 1}] * round(joint_freqs[1,1]))

pop_df = pd.concat([D0_A0_df, D0_A1_df, D1_A0_df, D1_A1_df], axis=0, ignore_index=True)

#display(pop_df)

pop_df['X0'] = gen_noisy_feature(pop_df['A'])

pop_df['X1'] = gen_random_feature(pop_df['A'])

return pop_df

arrest_df = construct_population(0.0040, 0.0105)

display(arrest_df)[[0.842616 0.003384]

[0.152383 0.001617]]| D | A | X0 | X1 | |

|---|---|---|---|---|

| 0 | 0 | 0 | -0.971645 | -0.024211 |

| 1 | 0 | 0 | -1.176896 | 0.039959 |

| 2 | 0 | 0 | -1.008070 | -0.026912 |

| 3 | 0 | 0 | -0.999462 | 0.021601 |

| 4 | 0 | 0 | -0.944086 | -0.149199 |

| ... | ... | ... | ... | ... |

| 995 | 1 | 0 | 0.925104 | -0.033928 |

| 996 | 1 | 0 | 1.013372 | -0.130119 |

| 997 | 1 | 0 | 0.898179 | 0.108746 |

| 998 | 1 | 1 | 0.887125 | -0.123374 |

| 999 | 1 | 1 | 0.848386 | -0.082399 |

1000 rows × 4 columns

# Classify

def thresh_classify(cur_clf, test_Xmat, d_prob_thresh = 0.17):

test_Xmat_pred = cur_clf.predict_proba(test_Xmat)

test_d_prob = test_Xmat_pred[:,1]

test_d_class = np.where(test_d_prob > d_prob_thresh, 1, 0)

return test_d_class

pop_clf = LogisticRegression()

X_pop = arrest_df[['X0','X1']].copy()

d_pop = arrest_df['D'].copy()

pop_clf.fit(X_pop, d_pop)

optimal_pr_d_hat = thresh_classify(pop_clf, X_pop, 0.5)

fair_pr_d_hat = thresh_classify(pop_clf, X_pop, 0.03)

unfair_pr_d_hat = thresh_classify(pop_clf, X_pop, 0.025)def display_confusion(race_vec, classification_vec):

return ConfusionMatrixDisplay.from_predictions(race_vec, classification_vec) #, display_labels=['not_drug_user','drug_user'])

def display_confusion_normalized(race_vec, classification_vec):

return ConfusionMatrixDisplay.from_predictions(race_vec, classification_vec, normalize='true') #, display_labels=['not_drug_user','drug_user'])For optimal classifier:

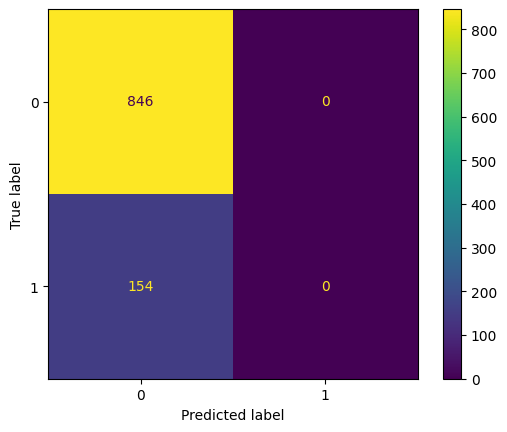

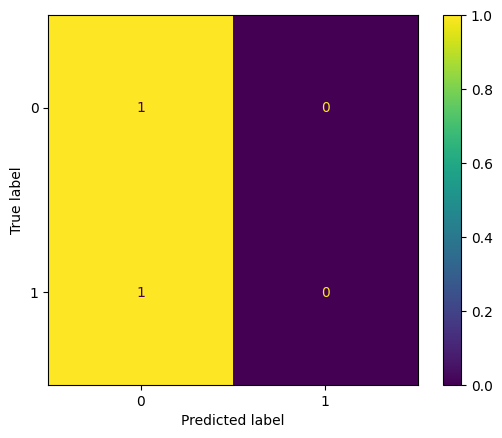

display_confusion(arrest_df['A'], optimal_pr_d_hat)

display_confusion_normalized(arrest_df['A'], optimal_pr_d_hat)

For effective threshold classifier:

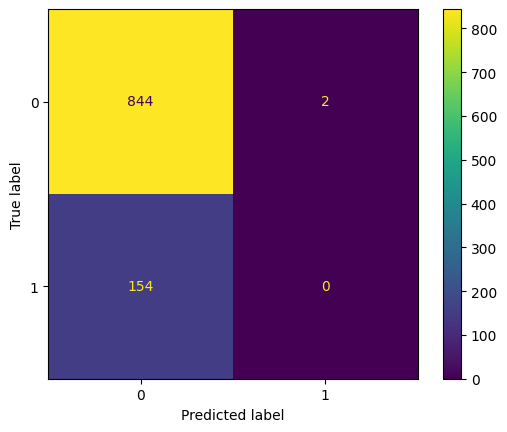

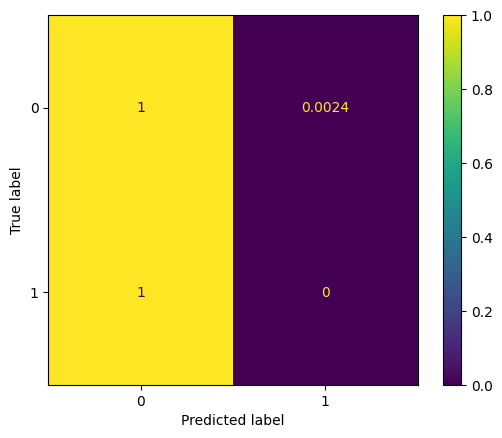

display_confusion(arrest_df['A'], fair_pr_d_hat)

display_confusion_normalized(arrest_df['A'], fair_pr_d_hat)

def compute_unfairness(race_vec, d_hat):

cmat = confusion_matrix(race_vec, d_hat, normalize='true')

pr_DH1_A0 = cmat[0,1]

pr_DH1_A1 = cmat[1,1]

return abs(pr_DH1_A1 - pr_DH1_A0)

def compute_accuracy(d_vec, d_hat):

cmat = confusion_matrix(d_vec, d_hat, normalize='all')

pr_DH0_H0 = cmat[0,0]

pr_DH1_H1 = cmat[1,1]

return pr_DH0_H0 + pr_DH1_H1

print(compute_unfairness(arrest_df['A'], fair_pr_d_hat))

print(compute_accuracy(arrest_df['D'], fair_pr_d_hat))0.002364066193853428

0.993

thresh_vals_macro = np.arange(0, 0.04, 0.0001)

def gen_unfairness_curve(thresh_vals):

unfairness_data = []

for cur_thresh in thresh_vals:

cur_pr_d_hat = thresh_classify(pop_clf, X_pop, cur_thresh)

cur_unfairness = compute_unfairness(arrest_df['A'], cur_pr_d_hat)

cur_accuracy = compute_accuracy(arrest_df['D'], cur_pr_d_hat)

cur_data = {

'thresh': cur_thresh,

'unfairness': 10*cur_unfairness,

'accuracy': cur_accuracy,

}

unfairness_data.append(cur_data)

unfairness_df = pd.DataFrame(unfairness_data)

return unfairness_df

unfairness_macro_df = gen_unfairness_curve(thresh_vals_macro)unfairness_macro_df| thresh | unfairness | accuracy | |

|---|---|---|---|

| 0 | 0.0000 | 0.0 | 0.005 |

| 1 | 0.0001 | 0.0 | 0.005 |

| 2 | 0.0002 | 0.0 | 0.005 |

| 3 | 0.0003 | 0.0 | 0.005 |

| 4 | 0.0004 | 0.0 | 0.005 |

| ... | ... | ... | ... |

| 395 | 0.0395 | 0.0 | 0.995 |

| 396 | 0.0396 | 0.0 | 0.995 |

| 397 | 0.0397 | 0.0 | 0.995 |

| 398 | 0.0398 | 0.0 | 0.995 |

| 399 | 0.0399 | 0.0 | 0.995 |

400 rows × 3 columns

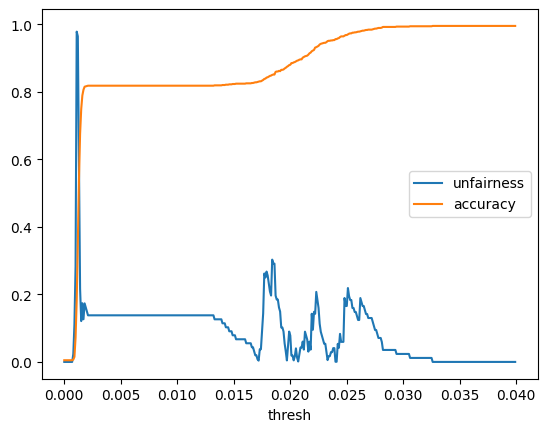

unfairness_macro_df.plot(x='thresh', y=['unfairness','accuracy'])

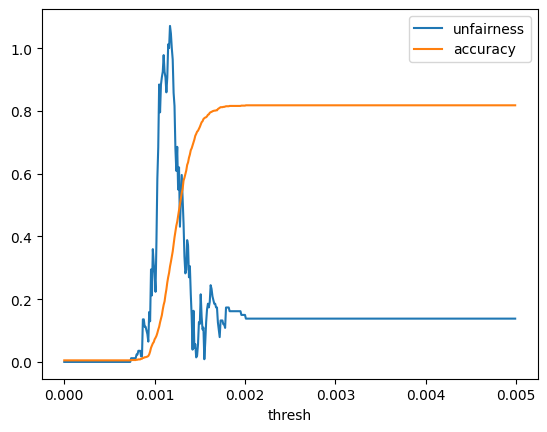

thresh_vals_micro = np.arange(0, 0.005, 0.00001)

unfairness_micro_df = gen_unfairness_curve(thresh_vals_micro)

unfairness_micro_df.plot(x='thresh', y=['unfairness','accuracy'])

Building on Statistical Parity, Predictive Parity incorporates the actual accuracy of the predictions, requiring only that the rate of correct predictions is equal for \(A = 0\) and \(A = 1\):

\[ \Pr(D = 1 \mid \widehat{D} = 1, A = 0) = \Pr(D = 1 \mid \widehat{D} = 1, A = 1) \]

For any given estimation “score” \(s(X)\) used by the algorithm as a proxy for estimating \(\widehat{\Pr}(D = 1)\)[2], which in turns gets used (e.g., via thresholding) to generate a final prediction \(\widehat{D}\), the probability of using drugs \(\Pr(D = 1)\) is equal for \(A = 0\) and \(A = 1\):

\[ \Pr(D = 1 \mid s(X) = s, A = 0) = \Pr(D = 1 \mid s(X) = s, A = 1), \; \forall s \in [0, 1] \]

This measure is the least bamboozling in the sense that it directly addresses the underlying “risk scores” which, if a given machine learning algorithm does not directly compute itself, can at least be inferred by treating the algorithm like a “black box”.