3 Random Variables and Distributions

3.1 Random Variables and Discrete Distributions

A random variable is a real-valued function defined on a sample space. Random variables are the main tools used for modeling unknown quantities in statistical analyses. For each random variable \(X\) and each set \(C\) of real numbers, we could calculate the probability that \(X\) takes its value in \(C\). The collection of all of these probabilities is the distribution of \(X\). There are two major classes of distributions and random variables: discrete (this section) and continuous (Section 3.2). Discrete distributions are those that assign positive probability to at most countably many different values. A discrete distribution can be characterized by its probability mass function (pmf), which specifies the probability that the random variable takes each of the different possible values. A random variable with a discrete distribution will be called a discrete random variable.

Definition of a Random Variable

Definition 3.1 (Definition 3.1.1: Random Variable) Let \(S\) be the sample space for an experiment. A real-valued function that is defined on \(S\) is called a random variable.

For example, in Example 3.1 the number \(X\) of heads in the 10 tosses is a random variable. Another random variable in that example is \(Y = 10 − X\), the number of tails.

Example 3.2 (Example 3.1.2: Measuring a Person’s Height.) Consider an experiment in which a person is selected at random from some population and her height in inches is measured. This height is a random variable.

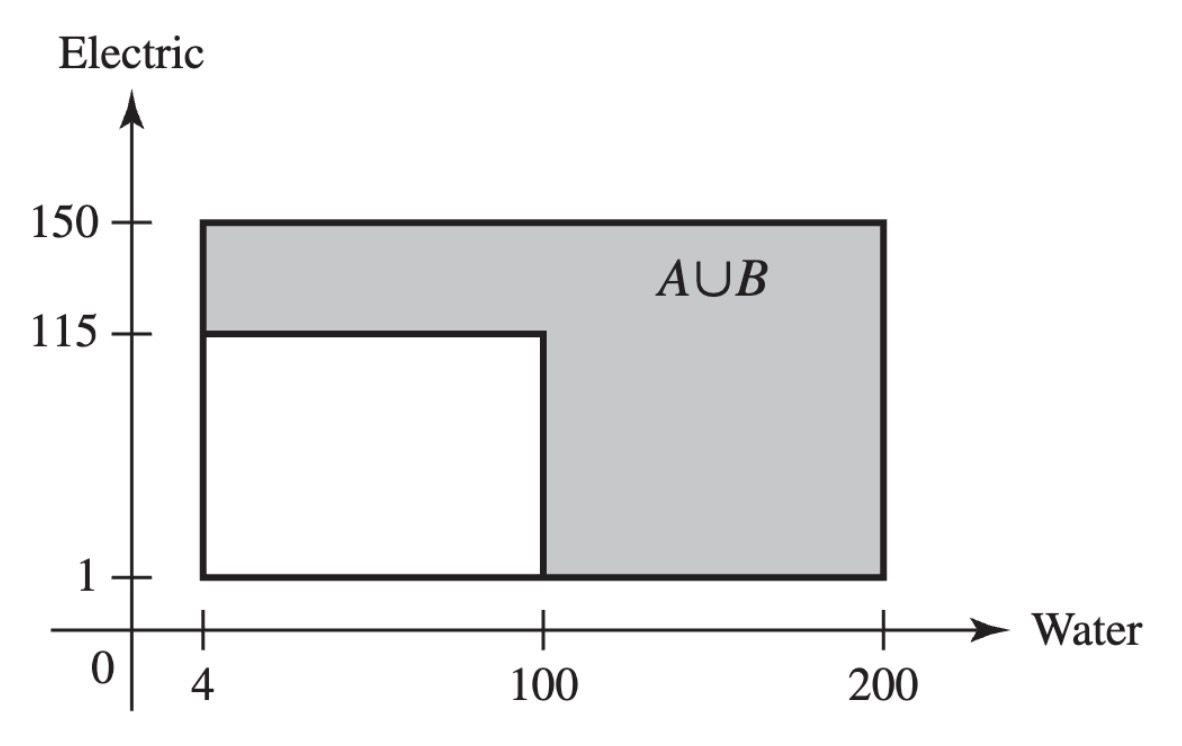

Example 3.3 (Example 3.1.3: Demands for Utilities) Consider the contractor in Example 1.9 who is concerned about the demands for water and electricity in a new office complex. The sample space was pictured in Figure 1.5, and it consists of a collection of points of the form \((x, y)\), where \(x\) is the demand for water and \(y\) is the demand for electricity. That is, each point \(s \in S\) is a pair \(s = (x, y)\). One random variable that is of interest in this problem is the demand for water. This can be expressed as \(X(s) = x\) when \(s = (x, y)\). The possible values of \(X\) are the numbers in the interval \([4, 200]\). Another interesting random variable is \(Y\), equal to the electricity demand, which can be expressed as \(Y(s) = y\) when \(s = (x, y)\). The possible values of \(Y\) are the numbers in the interval \([1, 150]\). A third possible random variable \(Z\) is an indicator of whether or not at least one demand is high. Let \(A\) and \(B\) be the two events described in Example 1.9. That is, \(A\) is the event that water demand is at least 100, and \(B\) is the event that electric demand is at least 115. Define

\[ Z(s) = \begin{cases} 1 &\text{if }s \in A \cup B, \\ 0 &\text{if }s \notin A \cup B. \end{cases} \]

The possible values of \(Z\) are the numbers \(0\) and \(1\). The event \(A \cup B\) is indicated in Figure 3.1.

The Distribution of a Random Variable

When a probability measure has been specified on the sample space of an experiment, we can determine probabilities associated with the possible values of each random variable \(X\). Let \(C\) be a subset of the real line such that \(\{X \in C\}\) is an event, and let \(\Pr(X \in C)\) denote the probability that the value of \(X\) will belong to the subset \(C\). Then \(\Pr(X \in C)\) is equal to the probability that the outcome \(s\) of the experiment will be such that \(X(s) \in C\). In symbols,

\[ \Pr(X \in C) = \Pr(\{s: X(s) \in C\}). \tag{3.1}\]

Definition 3.2 (Definition 3.1.2: Distribution) Let \(X\) be a random variable. The distribution of \(X\) is the collection of all probabilities of the form \(\Pr(X \in C)\) for all sets \(C\) of real numbers such that \(\{X \in C\}\) is an event.

It is a straightforward consequence of the definition of the distribution of \(X\) that this distribution is itself a probability measure on the set of real numbers. The set \(\{X \in C\}\) will be an event for every set \(C\) of real numbers that most readers will be able to imagine.

Example 3.4 (Example 3.1.4: Tossing a Coin) Consider again an experiment in which a fair coin is tossed 10 times, and let \(X\) be the number of heads that are obtained. In this experiment, the possible values of \(X\) are \(0, 1, 2, \ldots, 10\). For each \(x\), \(\Pr(X = x)\) is the sum of the probabilities of all of the outcomes in the event \(\{X = x\}\). Because the coin is fair, each outcome has the same probability \(1/2^{10}\), and we need only count how many outcomes \(s\) have \(X(s) = x\). We know that \(X(s) = x\) if and only if exactly \(x\) of the 10 tosses are \(H\). Hence, the number of outcomes \(s\) with \(X(s) = x\) is the same as the number of subsets of size \(x\) (to be the heads) that can be chosen from the 10 tosses, namely, \(\binom{10}{x}\), according to Definitions 1.14 and 1.15. Hence,

\[ \Pr(X = x) = \binom{10}{x}\frac{1}{2^{10}} \; \text{ for }x = 0, 1, 2, \ldots, 10. \]

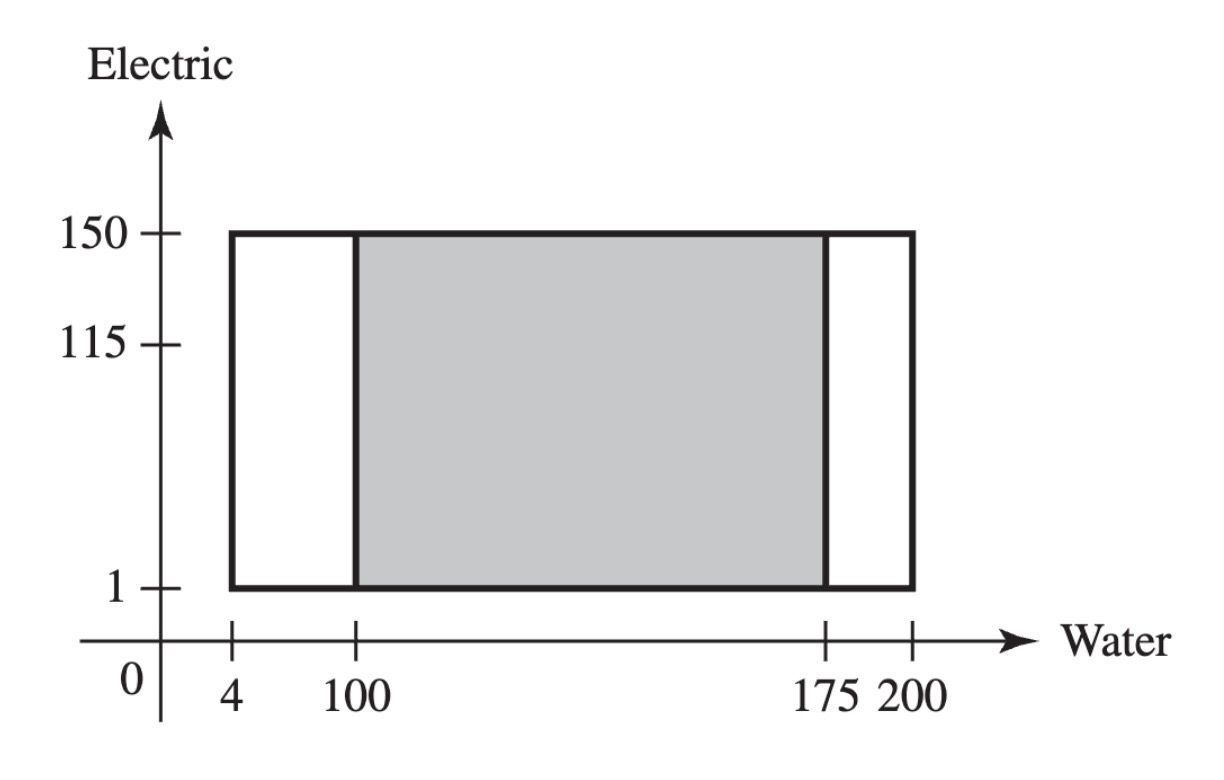

Example 3.5 (Example 3.1.5: Demands for Utilities) In Example 1.9, we actually calculated some features of the distributions of the three random variables \(X\), \(Y\), and \(Z\) defined in Example 3.3. For example, the event \(A\), defined as the event that water demand is at least 100, can be expressed as \(A = \{X \geq 100\}\), and \(\Pr(A) = 0.5102\). This means that \(\Pr(X \geq 100) = 0.5102\). The distribution of \(X\) consists of all probabilities of the form \(\Pr(X \in C)\) for all sets \(C\) such that \(\{X \in C\}\) is an event. These can all be calculated in a manner similar to the calculation of \(\Pr(A)\) in Example 1.9. In particular, if \(C\) is a subinterval of the interval \([4, 200]\), then

\[ \Pr(X \in C) = \frac{ (150-1) \times (\text{length of interval }C) }{ 29,204 }. \tag{3.2}\]

For example, if \(C\) is the interval \([50,175]\), then its length is 125, and \(\Pr(X \in C) = 149 \times 125/29,204 = 0.6378\). The subset of the sample space whose probability was just calculated is drawn in Figure 3.2.

The general definition of distribution in Definition 3.2 is awkward, and it will be useful to find alternative ways to specify the distributions of random variables. In the remainder of this section, we shall introduce a few such alternatives.

Discrete Distributions

Definition 3.3 (Definition 3.1.3: Discrete Distribution / Random Variable) We say that a random variable \(X\) has a discrete distribution or that \(X\) is a discrete random variable if \(X\) can take only a finite number \(k\) of different values \(x_1, \ldots, x_k\) or, at most, an infinite sequence of different values \(x_1, x_2, \ldots\).

Random variables that can take every value in an interval are said to have continuous distributions and are discussed in Section 3.2.

Definition 3.4 (Definition 3.1.4: Probability Mass Function/pmf/Support) If a random variable \(X\) has a discrete distribution, the probability mass function (abbreviated pmf) of \(X\) is defined as the function \(f\) such that for every real number \(x\),

\[ f(x) = \Pr(X = x). \]

The closure of the set \(\{x \mid f(x) > 0\}\) is called the support of (the distribution of) \(X\).

Example 3.6 (Example 3.1.6: Demands for Utilities) The random variable \(Z\) in Example 3.3 equals 1 if at least one of the utility demands is high, and \(Z = 0\) if neither demand is high. Since \(Z\) takes only two different values, it has a discrete distribution. Note that \(\{s \mid Z(s) = 1\} = A \cup B\), where \(A\) and \(B\) are defined in Example 1.9. We calculated \(\Pr(A \cup B) = 0.65253\) in Example 1.9. If \(Z\) has pmf \(f\), then

\[ f(z) = \begin{cases} 0.65253 &\text{if }z = 1, \\ 0.34747 &\text{if }z = 0, \\ 0 &\text{otherwise.} \end{cases} \]

The support of \(Z\) is the set \(\{0, 1\}\), which has only two elements.

Example 3.7 (Example 3.1.7: Tossing a Coin) The random variable \(X\) in Example 3.4 has only 11 different possible values. Its pmf \(f\) is given at the end of that example for the values \(x = 0, \ldots, 10\) that constitute the support of \(X\); \(f(x) = 0\) for all other values of \(x\).

Here are some simple facts about probability mass functions.

Theorem 3.1 (Theorem 3.1.1) Let \(X\) be a discrete random variable with pmf \(f\). If \(x\) is not one of the possible values of \(X\), then \(f(x) = 0\). Also, if the sequence \(x_1, x_2, \ldots\) includes all the possible values of \(X\), then \(\sum_{i=1}^{\infty}f(x_i) = 1\).

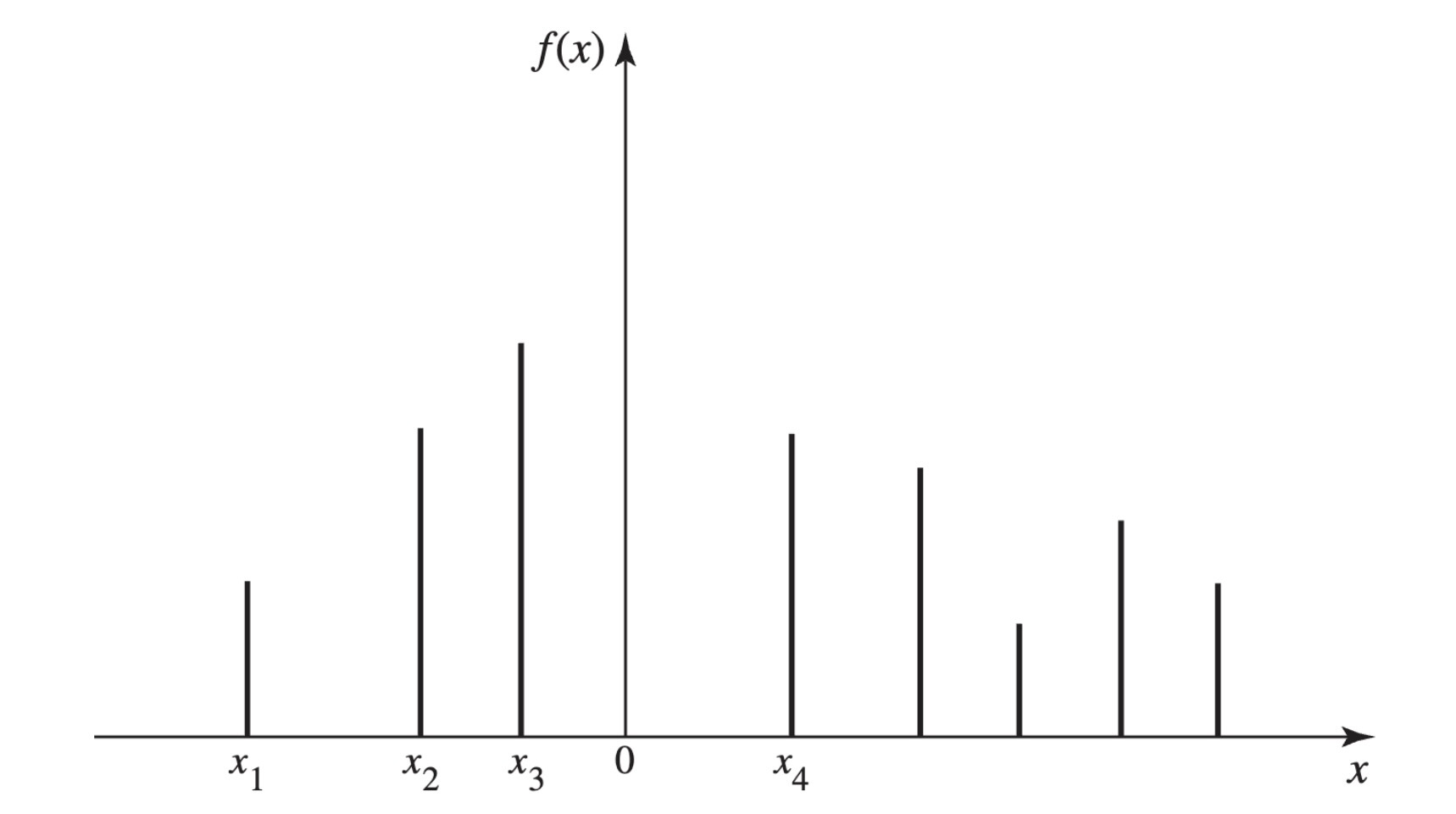

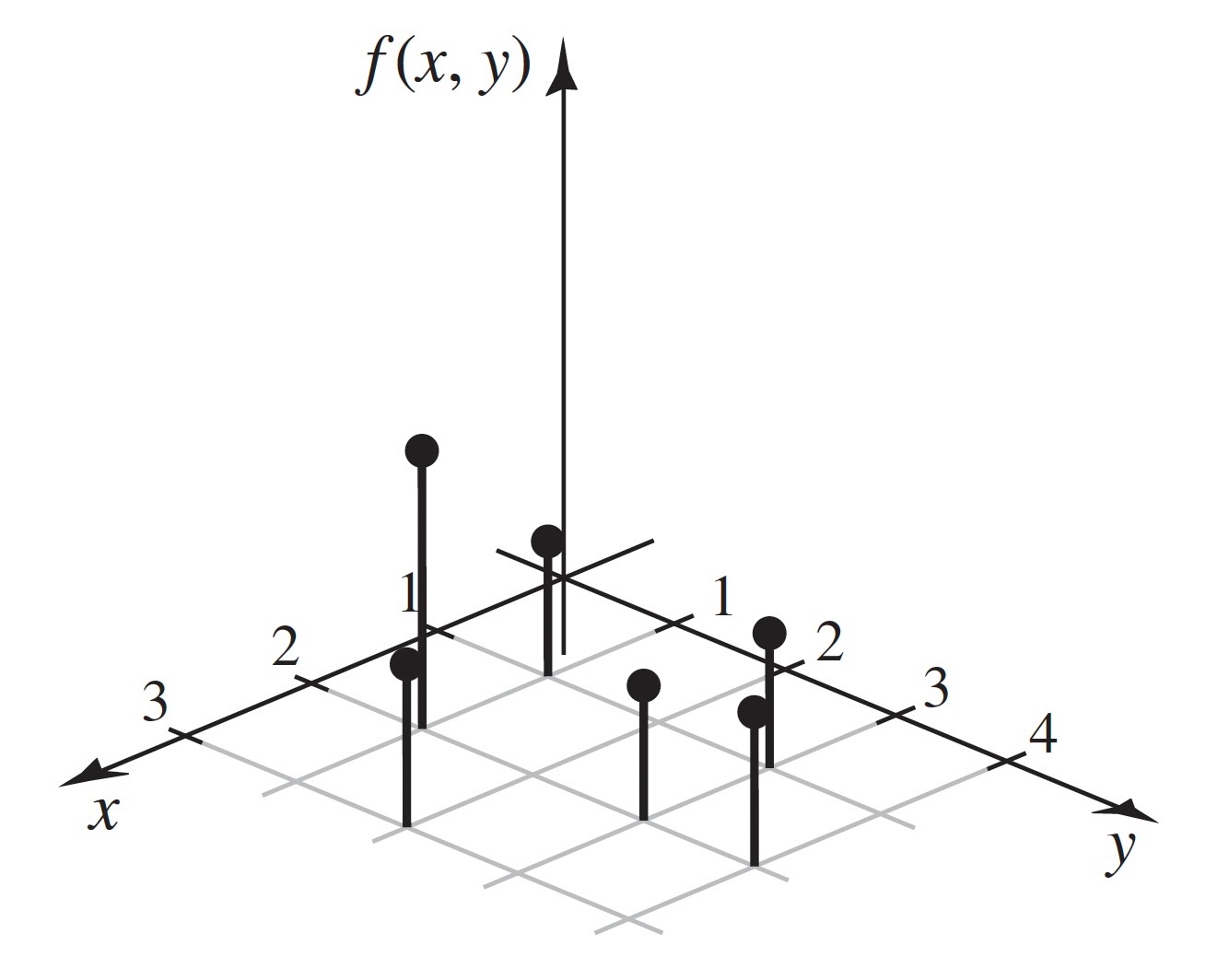

A typical pmf is sketched in Figure 3.3, in which each vertical segment represents the value of \(f(x)\) corresponding to a possible value \(x\). The sum of the heights of the vertical segments in Figure 3.3 must be 1.

Theorem 3.2 shows that the pmf of a discrete random variable characterizes its distribution, and it allows us to dispense with the general definition of distribution when we are discussing discrete random variables.

Theorem 3.2 (Theorem 3.1.2) If \(X\) has a discrete distribution, the probability of each subset \(C\) of the real line can be determined from the relation

\[ \Pr(X \in C) = \sum_{x_i \in C}f(x_i). \]

Some random variables have distributions that appear so frequently that the distributions are given names. The random variable \(Z\) in Example 3.6 is one such example.

Definition 3.5 (Definition 3.1.5: Bernoulli Distribution/Random Variable (DeGroot and Schervish, p. 97)) A random variable \(Z\) that takes only two values \(0\) and \(1\) with \(\Pr(Z = 1) = p\) has the Bernoulli distribution with parameter \(p\). We also say that \(Z\) is a Bernoulli random variable with parameter \(p\).

The \(Z\) in Example 3.6 has the Bernoulli distribution with parameter 0.65252. It is easy to see that the name of each Bernoulli distribution is enough to allow us to compute the pmf, which, in turn, allows us to characterize its distribution.

We conclude this section with illustrations of two additional families of discrete distributions that arise often enough to have names.

Uniform Distributions on Integers

Example 3.8 (Example 3.1.8: Daily Numbers) A popular state lottery game requires participants to select a three-digit number (leading \(0\)s allowed). Then three balls, each with one digit, are chosen at random from well-mixed bowls. The sample space here consists of all triples \((i_1, i_2, i_3)\) where \(i_j \in \{0, \ldots, 9\}\) for \(j = 1, 2, 3\). If \(s = (i_1, i_2, i_3)\), define \(X(s) = 100i_1 + 10i_2 + i_3\). For example, \(X(0, 1, 5) = 15\). It is easy to check that \(\Pr(X = x) = 0.001\) for each integer \(x \in \{0, 1, \ldots, 999\}\).

Definition 3.6 (Definition 3.1.6: Uniform Distribution on Integers (DeGroot and Schervish, p. 97)) Let \(a \leq b\) be integers. Suppose that the value of a random variable \(X\) is equally likely to be each of the integers \(a, \ldots, b\). Then we say that \(X\) has the uniform distribution on the integers \(a, \ldots, b\).

The \(X\) in Example 3.8 has the uniform distribution on the integers \(0, 1, \ldots, 999\). A uniform distribution on a set of \(k\) integers has probability \(1/k\) on each integer. If \(b > a\), there are \(b − a + 1\) integers from \(a\) to \(b\) including \(a\) and \(b\). The next result follows immediately from what we have just seen, and it illustrates how the name of the distribution characterizes the distribution.

Theorem 3.3 (Theorem 3.1.3) If \(X\) has the uniform distribution on the integers \(a, \ldots, b\), the pmf of \(X\) is

\[ f(x) = \begin{cases} \frac{1}{b-a+1} &\text{for }x = a, \ldots, b, \\ 0 &\text{otherwise.} \end{cases} \]

The uniform distribution on the integers \(a, \ldots, b\) represents the outcome of an experiment that is often described by saying that one of the integers \(a, \ldots, b\) is chosen at random. In this context, the phrase “at random” means that each of the \(b − a + 1\) integers is equally likely to be chosen. In this same sense, it is not possible to choose an integer at random from the set of all positive integers, because it is not possible to assign the same probability to every one of the positive integers and still make the sum of these probabilities equal to 1. In other words, a uniform distribution cannot be assigned to an infinite sequence of possible values, but such a distribution can be assigned to any finite sequence.

Note: Random Variables Can Have the Same Distribution without Being the Same Random Variable. Consider two consecutive daily number draws as in Example 3.8. The sample space consists of all 6-tuples \((i_1, \ldots, i_6)\), where the first three coordinates are the numbers drawn on the first day and the last three are the numbers drawn on the second day (all in the order drawn). If \(s = (i_1, \ldots, i_6)\), let \(X_1(s) = 100i_1 + 10i_2 + i_3\) and let \(X_2(s) = 100i_4 + 10i_5 + i_6\). It is easy to see that \(X_1\) and \(X_2\) are different functions of \(s\) and are not the same random variable. Indeed, there is only a small probability that they will take the same value. But they have the same distribution because they assume the same values with the same probabilities. If a businessman has 1000 customers numbered \(0, \ldots, 999\), and he selects one at random and records the number \(Y\), the distribution of \(Y\) will be the same as the distribution of \(X_1\) and of \(X_2\), but \(Y\) is not like \(X_1\) or \(X_2\) in any other way.

Binomial Distributions

Example 3.9 (Example 3.1.9: Defective Parts (p. 98)) Consider again Example 2.18. In that example, a machine produces a defective item with probability \(p\) (\(0 < p < 1\)) and produces a nondefective item with probability \(1 − p\). We assumed that the events that the different items were defective were mutually independent. Suppose that the experiment consists of examining \(n\) of these items. Each outcome of this experiment will consist of a list of which items are defective and which are not, in the order examined. For example, we can let 0 stand for a nondefective item and 1 stand for a defective item. Then each outcome is a string of \(n\) digits, each of which is 0 or 1. To be specific, if, say, \(n = 6\), then some of the possible outcomes are

\[ \texttt{010010}, \texttt{100100}, \texttt{000011}, \texttt{110000}, \texttt{100001}, \texttt{000000}, \text{etc.} \tag{3.3}\]

We will let \(X\) denote the number of these items that are defective. Then the random variable \(X\) will have a discrete distribution, and the possible values of \(X\) will be \(0, 1, 2, \ldots, n\). For example, the first four outcomes listed in Equation 3.3 all have \(X(s) = 2\). The last outcome listed has \(X(s) = 0\).

Example 3.9 is a generalization of Example 2.18 with \(n\) items inspected rather than just six, and rewritten in the notation of random variables. For \(x = 0, 1, \ldots, n\), the probability of obtaining each particular ordered sequence of \(n\) items containing exactly \(x\) defectives and \(n − x\) nondefectives is \(p^x(1 − p)^{n−x}\), just as it was in Example 2.18. Since there are \(\binom{n}{x}\) different ordered sequences of this type, it follows that

\[ \Pr(X = x) = \binom{n}{x}p^x(1-p)^{n-x}. \]

Therefore, the pmf of \(X\) will be as follows:

\[ f(x) = \begin{cases} \binom{n}{x}p^x(1-p)^{n-x} &\text{ for }x = 0, 1, \ldots, n, \\ 0 &\text{otherwise.} \end{cases} \tag{3.4}\]

Definition 3.7 (Definition 3.1.7: Binomial Distribution/Random Variable) The discrete distribution represented by the pmf in Equation 3.4 is called the binomial distribution with parameters \(n\) and \(p\). A random variable with this distribution is said to be a binomial random variable with parameters \(n\) and \(p\).

The reader should be able to verify that the random variable \(X\) in Example 3.4, the number of heads in a sequence of 10 independent tosses of a fair coin, has the binomial distribution with parameters \(10\) and \(1/2\).

Since the name of each binomial distribution is sufficient to construct its pmf, it follows that the name is enough to identify the distribution. The name of each distribution includes the two parameters. The binomial distributions are very important in probability and statistics and will be discussed further in later chapters of this book.

A short table of values of certain binomial distributions is given at the end of this book. It can be found from this table, for example, that if \(X\) has the binomial distribution with parameters \(n = 10\) and \(p = 0.2\), then \(\Pr(X = 5) = 0.0264\) and \(\Pr(X \geq 5) = 0.0328\).

As another example, suppose that a clinical trial is being run. Suppose that the probability that a patient recovers from her symptoms during the trial is \(p\) and that the probability is \(1 − p\) that the patient does not recover. Let \(Y\) denote the number of patients who recover out of \(n\) independent patients in the trial. Then the distribution of \(Y\) is also binomial with parameters \(n\) and \(p\). Indeed, consider a general experiment that consists of observing \(n\) independent repititions (trials) with only two possible results for each trial. For convenience, call the two possible results “success” and “failure.” Then the distribution of the number of trials that result in success will be binomial with parameters \(n\) and \(p\), where \(p\) is the probability of success on each trial.

Note: Names of Distributions. In this section, we gave names to several families of distributions. The name of each distribution includes any numerical parameters that are part of the definition. For example, the random variable \(X\) in Example 3.4 has the binomial distribution with parameters \(10\) and \(1/2\). It is a correct statement to say that \(X\) has a binomial distribution or that \(X\) has a discrete distribution, but such statements are only partial descriptions of the distribution of \(X\). Such statements are not sufficient to name the distribution of \(X\), and hence they are not sufficient as answers to the question “What is the distribution of \(X\)?” The same considerations apply to all of the named distributions that we introduce elsewhere in the book. When attempting to specify the distribution of a random variable by giving its name, one must give the full name, including the values of any parameters. Only the full name is sufficient for determining the distribution.

Summary

A random variable is a real-valued function defined on a sample space. The distribution of a random variable \(X\) is the collection of all probabilities \(\Pr(X \in C)\) for all subsets \(C\) of the real numbers such that \(\{X \in C\}\) is an event. A random variable \(X\) is discrete if there are at most countably many possible values for \(X\). In this case, the distribution of \(X\) can be characterized by the probability mass function pmf of \(X\), namely, \(f(x) = \Pr(X = x)\) for \(x\) in the set of possible values. Some distributions are so famous that they have names. One collection of such named distributions is the collection of uniform distributions on finite sets of integers. A more famous collection is the collection of binomial distributions whose parameters are \(n\) and \(p\), where \(n\) is a positive integer and \(0 < p < 1\), having pmf Equation 3.4. The binomial distribution with parameters \(n = 1\) and \(p\) is also called the Bernoulli distribution with parameter \(p\). The names of these distributions also characterize the distributions.

Exercises

Exercise 3.1 (Exercise 3.1.1) Suppose that a random variable \(X\) has the uniform distribution on the integers \(10, \ldots, 20\). Find the probability that \(X\) is even.

Exercise 3.2 (Exercise 3.1.2) Suppose that a random variable \(X\) has a discrete distribution with the following pmf:

\[ f(x) = \begin{cases} cx &\text{for }x = 1, \ldots, 5, \\ 0 &\text{otherwise.} \end{cases} \]

Determine the value of the constant \(c\).

Exercise 3.3 (Exercise 3.1.3) Suppose that two balanced dice are rolled, and let \(X\) denote the absolute value of the difference between the two numbers that appear. Determine and sketch the pmf of \(X\).

Exercise 3.4 (Exercise 3.1.4) Suppose that a fair coin is tossed 10 times independently. Determine the pmf of the number of heads that will be obtained.

Exercise 3.5 (Exercise 3.1.5) Suppose that a box contains seven red balls and three blue balls. If five balls are selected at random, without replacement, determine the pmf of the number of red balls that will be obtained.

Exercise 3.6 (Exercise 3.1.6) Suppose that a random variable \(X\) has the binomial distribution with parameters \(n = 15\) and \(p = 0.5\). Find \(\Pr(X < 6)\).

Exercise 3.7 (Exercise 3.1.7) Suppose that a random variable \(X\) has the binomial distribution with parameters \(n = 8\) and \(p = 0.7\). Find \(\Pr(X \geq 5)\) by using the table given at the end of this book. Hint: Use the fact that \(\Pr(X \geq 5) = \Pr(Y \leq 3)\), where \(Y\) has the binomial distribution with parameters \(n = 8\) and \(p = 0.3\).

Exercise 3.8 (Exercise 3.1.8) If 10 percent of the balls in a certain box are red, and if 20 balls are selected from the box at random, with replacement, what is the probability that more than three red balls will be obtained?

Exercise 3.9 (Exercise 3.1.9) Suppose that a random variable \(X\) has a discrete distribution with the following pmf:

\[ f(x) = \begin{cases} \frac{c}{2^x} &\text{for }x = 0, 1, 2, \ldots, \\ 0 &\text{otherwise.} \end{cases} \]

Find the value of the constant \(c\).

Exercise 3.10 (Exercise 3.1.10) A civil engineer is studying a left-turn lane that is long enough to hold seven cars. Let \(X\) be the number of cars in the lane at the end of a randomly chosen red light. The engineer believes that the probability that \(X = v\) is proportional to \((v + 1)(8 − v)\) for \(v = 0, \ldots, 7\) (the possible values of \(X\)).

- Find the pmf of \(X\).

- Find the probability that \(X\) will be at least 5.

Exercise 3.11 (Exercise 3.1.11) Show that there does not exist any number \(c\) such that the following function would be a pmf:

\[ f(v) = \begin{cases} \frac{c}{v} &\text{for }v = 1, 2, \ldots, \\ 0 &\text{otherwise.} \end{cases} \]

3.2 Continuous Distributions

Next, we focus on random variables that can assume every value in an interval (bounded or unbounded). If a random variable \(X\) has associated with it a function \(f\) such that the integral of \(f\) over each interval gives the probability that X is in the interval, then we call \(f\) the probability density function (pdf) of \(X\) and we say that \(X\) has a continuous distribution.

The Probability Density Function

Example 3.10 (Example 3.2.1: Demands for Utilities) In Example 3.5, we determined the distribution of the demand for water, \(X\). From Figure 3.2, we see that the smallest possible value of \(X\) is 4 and the largest is 200. For each interval \(C = [c_0, c_1] \subset [4, 200]\), Equation 3.2 says that

\[ \Pr(c_0 \leq X \leq c_1) = \frac{149(c_1-c_0)}{29204} = \frac{c_1 - c_0}{196} = \int_{c_0}^{c_1}\frac{1}{196}dx. \]

So, if we define

\[ f(v) = \begin{cases} \frac{1}{196} &\text{if }4 \leq x \leq 200, \\ 0 &\text{otherwise,} \end{cases} \tag{3.5}\]

we have that

\[ \Pr(c_0 \leq X \leq c_1) = \int_{c_0}^{c_1}f(x)dx. \tag{3.6}\]

Because we defined \(f(x)\) to be 0 for \(x\) outside of the interval \([4, 200]\), we see that Equation 3.6 holds for all \(c_0 \leq c_1\), even if \(c_0 = -\infty\) and/or \(c_1 = \infty\).

The water demand \(X\) in Example 3.10 is an example of the following.

Definition 3.8 (Definition 3.2.1: Continuous Distribution / Random Variable) We say that a random variable \(X\) has a continuous distribution or that \(X\) is a continuous random variable if there exists a nonnegative function \(f\), defined on the real line, such that for every interval of real numbers (bounded or unbounded), the probability that \(X\) takes a value in the interval is the integral of \(f\) over the interval.

For example, in the situation described in Definition 3.8, for each bounded closed interval \([a, b]\),

\[ \Pr(a \leq X \leq b) = \int_{a}^{b}f(x)dx. \tag{3.7}\]

Similarly, \(\Pr(X \geq a) = \int_{a}^{\infty}f(x)dx\) and \(\Pr(X \leq b) = \int_{-\infty}^{b}f(x)dx\).

We see that the function \(f\) characterizes the distribution of a continuous random variable in much the same way that the probability mass function characterizes the distribution of a discrete random variable. For this reason, the function \(f\) plays an important role, and hence we give it a name.

Definition 3.9 (Definition 3.2.2: Probability Density Function/pdf/Support) If \(X\) has a continuous distribution, the function \(f\) described in Definition 3.8 is called the probability density function (abbreviated pdf) of \(X\). The closure of the set \(\{x \mid f(x) > 0\}\) is called the support of (the distribution of) \(X\).

Example 3.10 demonstrates that the water demand \(X\) has PDF given by Equation 3.5.

Every PDF \(f\) must satisfy the following two requirements:

\[ f(x) \geq 0, \; \text{ for all }x, \tag{3.8}\]

and

\[ \int_{-\infty}^{\infty}f(x)dx = 1. \tag{3.9}\]

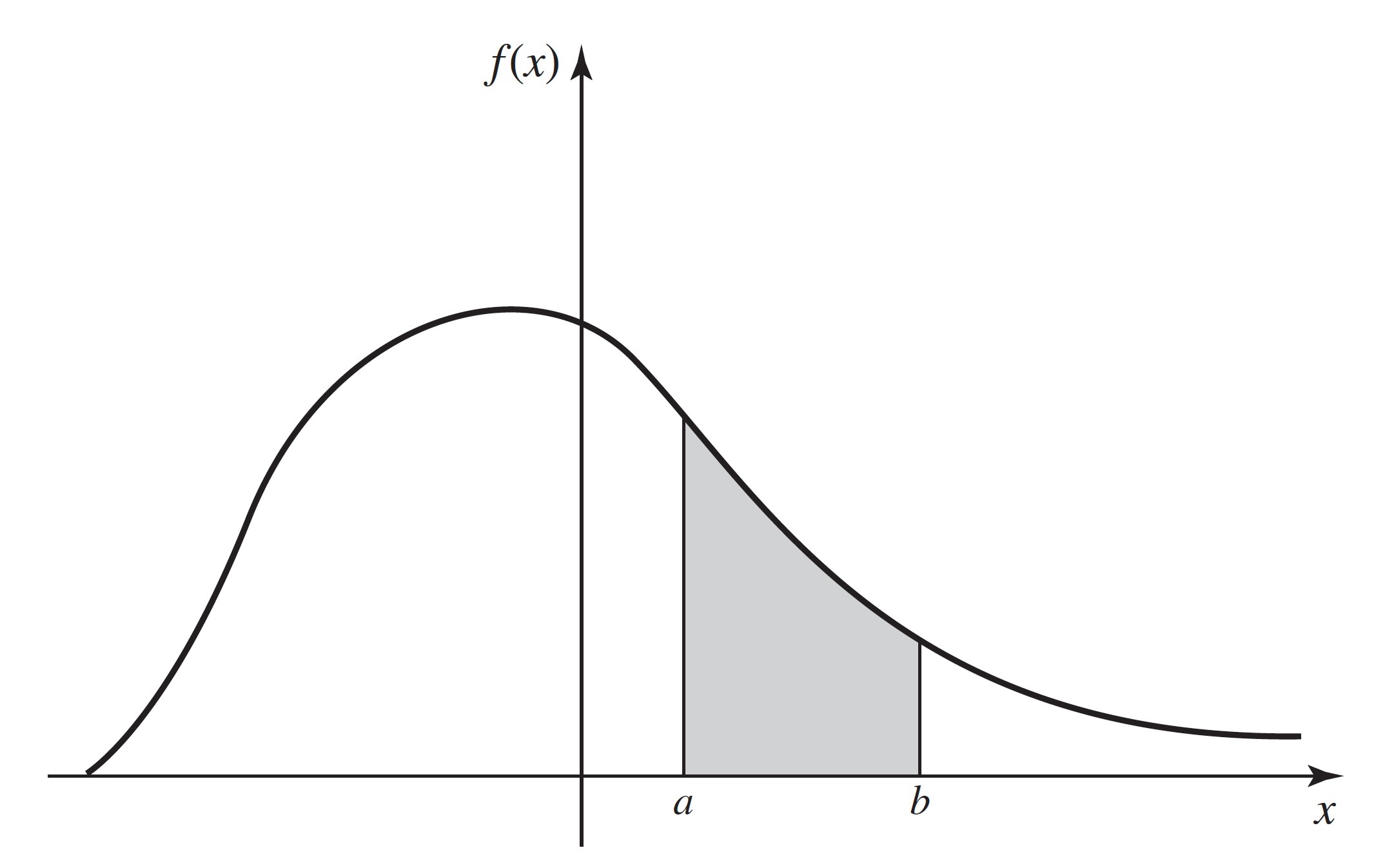

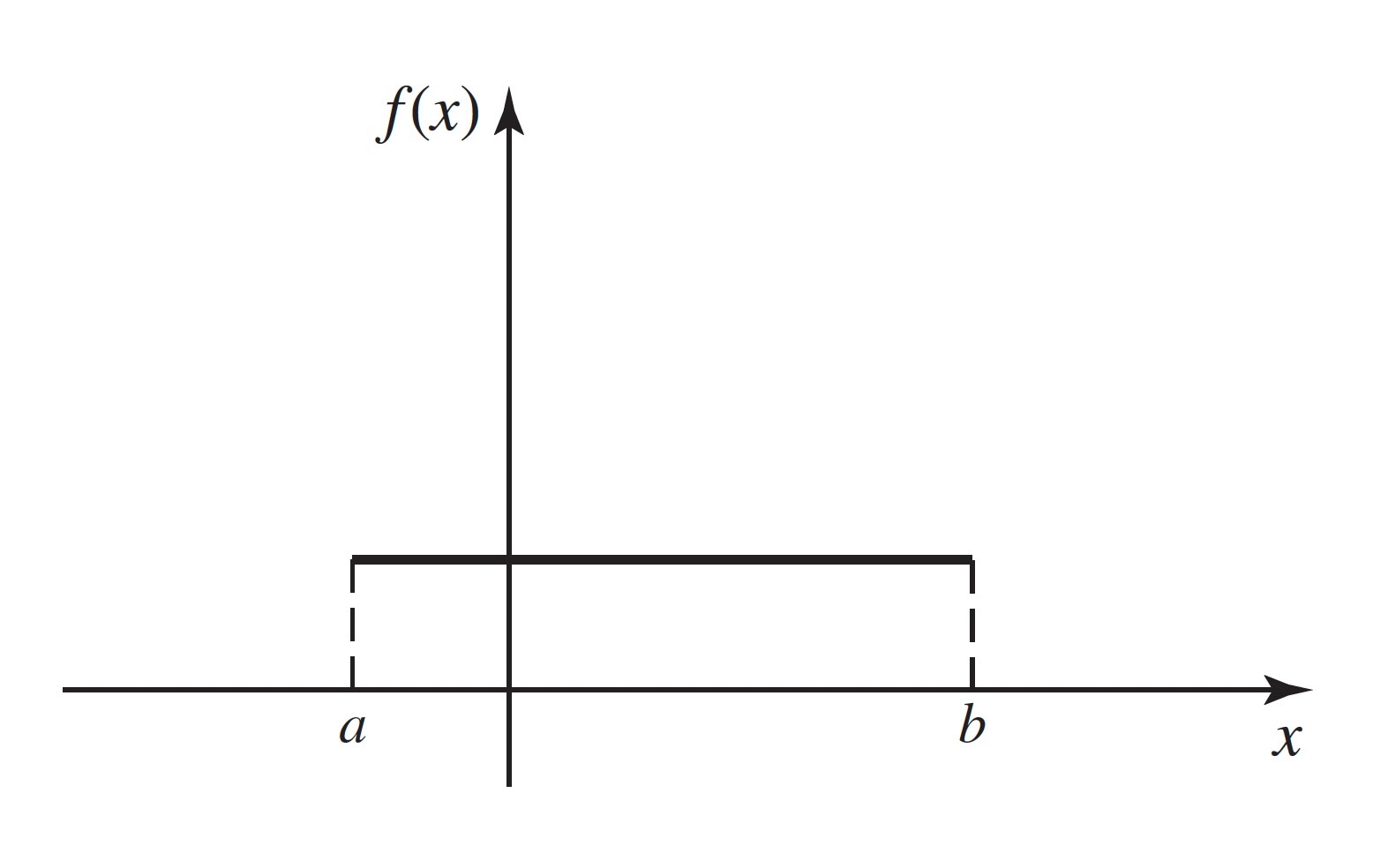

A typical pdf is sketched in Figure 3.4. In that figure, the total area under the curve must be 1, and the value of \(\Pr(a \leq X \leq b)\) is equal to the area of the shaded region.

Note: Continuous Distributions Assign Probability 0 to Individual Values. The integral in Equation 3.7 also equals \(\Pr(a < X \leq b)\) as well as \(\Pr(a < X < b)\) and \(\Pr(a \leq X < b)\). Hence, it follows from the definition of continuous distributions that, if \(X\) has a continuous distribution, \(\Pr(X = a) = 0\) for each number \(a\). As we noted on page 20, the fact that \(\Pr(X = a) = 0\) does not imply that \(X = a\) is impossible. If it did, all values of \(X\) would be impossible and \(X\) couldn’t assume any value. What happens is that the probability in the distribution of \(X\) is spread so thinly that we can only see it on sets like nondegenerate intervals. It is much the same as the fact that lines have 0 area in two dimensions, but that does not mean that lines are not there. The two vertical lines indicated under the curve in Figure 3.4 have 0 area, and this signifies that \(\Pr(X = a) = \Pr(X = b) = 0\). However, for each \(\epsilon > 0\) and each \(a\) such that \(f(a) > 0\), \(\Pr(a − \epsilon \leq X \leq a + \epsilon) \approx 2\epsilon f(a) > 0\).

Nonuniqueness of the PDF

If a random variable \(X\) has a continuous distribution, then \(\Pr(X = x) = 0\) for every individual value \(x\). Because of this property, the values of each pdf can be changed at a finite number of points, or even at certain infinite sequences of points, without changing the value of the integral of the pdf over any subset \(A\). In other words, the values of the pdf of a random variable \(X\) can be changed arbitrarily at many points without affecting any probabilities involving \(X\), that is, without affecting the probability distribution of \(X\). At exactly which sets of points we can change a pdf depends on subtle features of the definition of the Riemann integral. We shall not deal with this issue in this text, and we shall only contemplate changes to pdfs at finitely many points.

To the extent just described, the pdf of a random variable is not unique. In many problems, however, there will be one version of the pdf that is more natural than any other because for this version the pdf will, wherever possible, be continuous on the real line. For example, the pdf sketched in Figure 3.4 is a continuous function over the entire real line. This pdf could be changed arbitrarily at a few points without affecting the probability distribution that it represents, but these changes would introduce discontinuities into the pdf without introducing any apparent advantages.

Throughout most of this book, we shall adopt the following practice: If a random variable \(X\) has a continuous distribution, we shall give only one version of the pdf of \(X\) and we shall refer to that version as the pdf of \(X\), just as though it had been uniquely determined. It should be remembered, however, that there is some freedom in the selection of the particular version of the pdf that is used to represent each continuous distribution. The most common place where such freedom will arise is in cases like Equation 3.5 where the pdf is required to have discontinuities. Without making the function \(f\) any less continuous, we could have defined the pdf in that example so that \(f(4) = f(200) = 0\) instead of \(f(4) = f(200) = 1/196\). Both of these choices lead to the same calculations of all probabilities associated with \(X\), and they are both equally valid. Because the support of a continuous distribution is the closure of the set where the pdf is strictly positive, it can be shown that the support is unique. A sensible approach would then be to choose the version of the pdf that was strictly positive on the support whenever possible.

The reader should note that “continuous distribution” is not the name of a distribution, just as “discrete distribution” is not the name of a distribution. There are many distributions that are discrete and many that are continuous. Some distributions of each type have names that we either have introduced or will introduce later.

We shall now present several examples of continuous distributions and their PDFs.

Uniform Distributions on Intervals

Example 3.11 (Example 3.2.2: Temperature Forecasts) Television weather forecasters announce high and low temperature forecasts as integer numbers of degrees. These forecasts, however, are the results of very sophisticated weather models that provide more precise forecasts that the television personalities round to the nearest integer for simplicity. Suppose that the forecaster announces a high temperature of y. If we wanted to know what temperature \(X\) the weather models actually produced, it might be safe to assume that \(X\) was equally likely to be any number in the interval from \(y − 1/2\) to \(y + 1/2\).

The distribution of \(X\) in Example 3.11 is a special case of the following.

Definition 3.10 (Definition 3.2.3: Uniform Distribution on an Interval) Let \(a\) and \(b\) be two given real numbers such that \(a < b\). Let \(X\) be a random variable such that it is known that \(a \leq X \leq b\) and, for every subinterval of \([a, b]\), the probability that \(X\) will belong to that subinterval is proportional to the length of that subinterval. We then say that the random variable \(X\) has the uniform distribution on the interval \([a, b]\).

A random variable \(X\) with the uniform distribution on the interval \([a, b]\) represents the outcome of an experiment that is often described by saying that a point is chosen at random from the interval \([a, b]\). In this context, the phrase “at random” means that the point is just as likely to be chosen from any particular part of the interval as from any other part of the same length.

Theorem 3.4 (Theorem 3.2.1: Uniform Distribution PDF) If \(X\) has the uniform distribution on an interval \([a, b]\), then the pdf of \(X\) is

\[ f(x) = \begin{cases} \frac{1}{b - a} &\text{for }a \leq x \leq b, \\ 0 &\text{otherwise.} \end{cases} \tag{3.10}\]

Proof. \(X\) must take a value in the interval \([a, b]\). Hence, the pdf \(f(x)\) of \(X\) must be 0 outside of \([a, b]\). Furthermore, since any particular subinterval of \([a, b]\) having a given length is as likely to contain \(X\) as is any other subinterval having the same length, regardless of the location of the particular subinterval in \([a, b]\), it follows that \(f(x)\) must be constant throughout \([a, b]\), and that interval is then the support of the distribution. Also,

\[ \int_{-\infty}^{\infty}f(x)dx = \int_{a}^{b}f(x)dx = 1. \tag{3.11}\]

Therefore, the constant value of \(f(x)\) throughout \([a, b]\) must be \(1/(b − a)\), and the pdf of \(X\) must be Equation 3.10.

The pdf Equation 3.10 is sketched in Figure 3.5. As an example, the random variable \(X\) (demand for water) in Example 3.10 has the uniform distribution on the interval \([4, 200]\).

Note: Density Is Not Probability. The reader should note that the pdf in Equation 3.10 can be greater than 1, particularly if \(b − a < 1\). Indeed, pdfs can be unbounded, as we shall see in Example 3.15. The pdf of \(X\), \(f(x)\), itself does not equal the probability that \(X\) is near \(x\). The integral of \(f\) over values near \(x\) gives the probability that \(X\) is near \(x\), and the integral is never greater than 1.

It is seen from Equation 3.10 that the pdf representing a uniform distribution on a given interval is constant over that interval, and the constant value of the pdf is the reciprocal of the length of the interval. It is not possible to define a uniform distribution over an unbounded interval, because the length of such an interval is infinite.

Consider again the uniform distribution on the interval \([a, b]\). Since the probability is 0 that one of the endpoints \(a\) or \(b\) will be chosen, it is irrelevant whether the distribution is regarded as a uniform distribution on the closed interval \(a \leq x \leq b\), or as a uniform distribution on the open interval \(a < x < b\), or as a uniform distribution on the half-open and half-closed interval \((a, b]\) in which one endpoint is included and the other endpoint is excluded.

For example, if a random variable \(X\) has the uniform distribution on the interval \([−1, 4]\), then the pdf of \(X\) is

\[ f(x) = \begin{cases} 1/5 &\text{for }-1 \leq x \leq 4, \\ 0 &\text{otherwise.} \end{cases} \]

Furthermore,

\[ \Pr(0 \leq X < 2) = \int_{0}^{2}f(x)dx = \frac{2}{5}. \]

Notice that we defined the pdf of \(X\) to be strictly positive on the closed interval \([−1, 4]\) and 0 outside of this closed interval. It would have been just as sensible to define the pdf to be strictly positive on the open interval \((−1, 4)\) and 0 outside of this open interval. The probability distribution would be the same either way, including the calculation of \(\Pr(0 \leq X < 2)\) that we just performed. After this, when there are several equally sensible choices for how to define a pdf, we will simply choose one of them without making any note of the other choices.

Other Continuous Distributions

Example 3.12 (Example 3.2.3: Incompletely Specified pdf) Suppose that the pdf of a certain random variable \(X\) has the following form:

\[ f(x) = \begin{cases} cx &\text{for }0 < x < 4, \\ 0 &\text{otherwise,} \end{cases} \]

where \(c\) is a given constant. We shall determine the value of \(c\).

For every pdf, it must be true that \(\int_{-\infty}^{\infty}f(x) = 1\). Therefore, in this example,

\[ \int_{0}^{4}cx~dx = 8c = 1. \]

Hence, \(c = 1/8\).

Note: Calculating Normalizing Constants. The calculation in Example 3.12 illustrates an important point that simplifies many statistical results. The pdf of \(X\) was specified without explicitly giving the value of the constant \(c\). However, we were able to figure out what was the value of \(c\) by using the fact that the integral of a pdf must be 1. It will often happen, especially in ?sec-8 where we find sampling distributions of summaries of observed data, that we can determine the pdf of a random variable except for a constant factor. That constant factor must be the unique value such that the integral of the pdf is 1, even if we cannot calculate it directly.

Example 3.13 (Example 3.2.4: Calculating Probabilities from a PDF (p. 105)) Suppose that the PDF of \(X\) is as in Example 3.12, namely,

\[ f(x) = \begin{cases} \frac{x}{8} &\text{for }0 < x < 4, \\ 0 &\text{otherwise.} \end{cases} \]

We shall now determine the values of \(\Pr(1 \leq X \leq 2)\) and \(\Pr(X > 2)\). Apply Equation 3.7 to get

\[ \Pr(1 \leq X \leq 2) = \int_1^2 \frac{1}{8}xdx = \frac{3}{16} \]

and

\[ \Pr(X > 2) = \int_2^4 \frac{1}{8}xdx = \frac{3}{4}. \]

Example 3.14 (Example 3.2.5: Unbounded Random Variables) It is often convenient and useful to represent a continuous distribution by a pdf that is positive over an unbounded interval of the real line. For example, in a practical problem, the voltage \(X\) in a certain electrical system might be a random variable with a continuous distribution that can be approximately represented by the pdf

\[ f(x) = \begin{cases} 0 &\text{for }x \leq 0, \\ \frac{1}{(1+x)^2} &\text{for }x > 0. \end{cases} \tag{3.12}\]

It can be verified that the properties Equation 3.8 and Equation 3.9 required of all pdfs are satisfied by \(f(x)\).

Even though the voltage \(X\) may actually be bounded in the real situation, the pdf Equation 3.12 may provide a good approximation for the distribution of \(X\) over its full range of values. For example, suppose that it is known that the maximum possible value of \(X\) is 1000, in which case \(\Pr(X > 1000) = 0\). When the pdf Equation 3.12 is used, we compute \(\Pr(X > 1000) = 0.001\). If Equation 3.12 adequately represents the variability of \(X\) over the interval \((0, 1000)\), then it may be more convenient to use the pdf Equation 3.12 than a pdf that is similar to Equation 3.12 for \(x \leq 1000\), except for a new normalizing constant, and is 0 for \(x > 1000\). This can be especially true if we do not know for sure that the maximum voltage is only 1000.

Example 3.15 (Example 3.2.6: Unbounded PDFs) Since a value of a PDF is a probability density, rather than a probability, such a value can be larger than \(1\). In fact, the values of the following PDF are unbounded in the neighborhood of \(x = 0\):

\[ f(x) = \begin{cases} \frac{2}{3}x^{-1/3} &\text{for }0 < x < 1, \\ 0 &\text{otherwise.} \end{cases} \tag{3.13}\]

It can be verified that even though the PDF Equation 3.13 is unbounded, it satisfies the properties Equation 3.8 and Equation 3.9 required of a PDF.

Mixed Distributions

Most distributions that are encountered in practical problems are either discrete or continuous. We shall show, however, that it may sometimes be necessary to consider a distribution that is a mixture of a discrete distribution and a continuous distribution.

Example 3.16 (Example 3.2.7: Truncated Voltage (DeGroot and Schervish, p. 106)) Suppose that in the electrical system considered in Example 3.14, the voltage \(X\) is to be measured by a voltmeter that will record the actual value of \(X\) if \(X \leq 3\) but will simply record the value \(3\) if \(X > 3\). If we let \(Y\) denote the value recorded by the voltmeter, then the distribution of \(Y\) can be derived as follows.

First, \(\Pr(Y = 3) = \Pr(X \geq 3) = 1/4\). Since the single value \(Y = 3\) has probability \(1/4\), it follows that \(\Pr(0 < Y < 3) = 3/4\). Furthermore, since \(Y = X\) for \(0 < X < 3\), this probability \(3/4\) for \(Y\) is distributed over the interval \((0, 3)\) according to the same PDF (3.2.8) as that of \(X\) over the same interval. Thus, the distribution of \(Y\) is specified by the combination of a PDF over the interval \((0, 3)\) and a positive probability at the point \(Y = 3\).

Summary

A continuous distribution is characterized by its probability density function (PDF). A nonnegative function \(f\) is the PDF of the distribution of \(X\) if, for every interval \([a, b]\), \(\Pr(a \leq X \leq b) = \int_a^b f(x)dx\). Continuous random variables satisfy \(\Pr(X = x) = 0\) for every value \(x\). If the PDF of a distribution is constant on an interval \([a, b]\) and is 0 off the interval, we say that the distribution is uniform on the interval \([a, b]\).

Exercises

Exercise 3.12 (Exercise 3.2.1) Let X be a random variable with the PDF specified in Example 3.15. Compute \(\Pr(X \leq 8/27)\).

Exercise 3.13 (Exercise 3.2.2) Suppose that the PDF of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} \frac{4}{3}(1-x^3) &\text{for }0 < x < 1, \\ 0 &\text{otherwise.} \end{cases} \]

Sketch this PDF and determine the values of the following probabilities:

\(\Pr\left(X < \frac{1}{2}\right)\)

\(\Pr\left(\frac{1}{4} < X < \frac{3}{4}\right)\)

\(\Pr\left(X > \frac{1}{3}\right)\)

Exercise 3.14 (Exercise 3.2.3) Suppose that the PDF of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} \frac{1}{36}(9 - x^2) &\text{for }-3 \leq x \leq 3, \\ 0 &\text{otherwise.} \end{cases} \]

Sketch this pdf and determine the values of the following probabilities:

\(\Pr(X < 0)\)

\(\Pr(−1 \leq X \leq 1)\)

\(\Pr(X > 2)\).

Exercise 3.15 (Exercise 3.2.4)

- Suppose that the pdf of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} cx^2 &\text{for }1 \leq x \leq 2, \\ 0 &\text{otherwise.} \end{cases} \]

- Find the value of the constant \(c\) and sketch the pdf.

- Find the value of \(\Pr(X > 3/2)\).

Exercise 3.16 (Exercise 3.2.5) Suppose that the pdf of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} \frac{1}{8}x &\text{for }0 \leq x \leq 4, 0 &\text{otherwise.} \end{cases} \]

- Find the value of \(t\) such that \(\Pr(X \leq t) = 1/4\).

- Find the value of \(t\) such that \(\Pr(X \geq t) = 1/2\).

Exercise 3.17 (Exercise 3.2.6) Let \(X\) be a random variable for which the pdf is as given in Exercise 3.16. After the value of \(X\) has been observed, let \(Y\) be the integer closest to \(X\). Find the pmf of the random variable \(Y\).

Exercise 3.18 (Exercise 3.2.7) Suppose that a random variable \(X\) has the uniform distribution on the interval \([−2, 8]\). Find the pdf of \(X\) and the value of \(\Pr(0 < X < 7)\).

Exercise 3.19 (Exercise 3.2.8) Suppose that the pdf of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} ce^{-2x} &\text{for }x > 0, \\ 0 &\text{otherwise.} \end{cases} \]

Find the value of the constant \(c\) and sketch the PDF.

Find the value of \(\Pr(1 < X < 2)\).

Exercise 3.20 (Exercise 3.2.9) Show that there does not exist any number \(c\) such that the following function \(f(x)\) would be a pdf:

\[ f(x) = \begin{cases} \frac{c}{1 + x} &\text{for }x > 0, \\ 0 &\text{otherwise.} \end{cases} \]

Exercise 3.21 (Exercise 3.2.10) Suppose that the pdf of a random variable \(X\) is as follows:

\[ f(x) = \begin{cases} \frac{c}{(1-x)^{1/2}} &\text{for }0 < x < 1, \\ 0 &\text{otherwise.} \end{cases} \]

- Find the value of the constant \(c\) and sketch the pdf.

- Find the value of \(\Pr(X \leq 1/2)\).

Exercise 3.22 (Exercise 3.2.11) Show that there does not exist any number \(c\) such that the following function \(f(x)\) would be a pdf:

\[ f(x) = \begin{cases} \frac{c}{x} &\text{for }0 < x < 1, \\ 0 &\text{otherwise.} \end{cases} \]

Exercise 3.23 (Exercise 3.2.12) In Example 3.3, determine the distribution of the random variable \(Y\), the electricity demand. Also, find \(\Pr(Y < 50)\).

Exercise 3.24 (Exercise 3.2.13) An ice cream seller takes 20 gallons of ice cream in her truck each day. Let \(X\) stand for the number of gallons that she sells. The probability is \(0.1\) that \(X = 20\). If she doesn’t sell all 20 gallons, the distribution of \(X\) follows a continuous distribution with a pdf of the form

\[ f(x) = \begin{cases} cx &\text{for }0 < x < 20, \\ 0 &\text{otherwise,} \end{cases} \]

where \(c\) is a constant that makes \(\Pr(X < 20) = 0.9\). Find the constant \(c\) so that \(\Pr(X < 20) = 0.9\) as described above.

3.3 The Cumulative Distribution Function

Although a discrete distribution is characterized by its PMF and a continuous distribution is characterized by its PDF, every distribution has a common characterization through its (cumulative) distribution function (CDF). The inverse of the CDF is called the quantile function, and it is useful for indicating where the probability is located in a distribution.

Example 3.17 (Example 3.3.1: Voltage (pp. 107-108)) Consider again the voltage \(X\) from Example 3.14. The distribution of \(X\) is characterized by the pdf in Equation 3.12. An alternative characterization that is more directly related to probabilities associated with \(X\) is obtained from the following function:

\[ \begin{align*} F(x) &= \Pr(X \leq x) = \int_{-\infty}^{x}f(y)~dy = \begin{cases} 0 &\text{for }x \leq 0, \\ \int_{0}^{x}\frac{dy}{(1+y)^2} &\text{for }x > 0 \end{cases} \\ &= \begin{cases} 0 &\text{for }x \leq 0, \\ 1 - \frac{1}{1+x} &\text{for }x > 0. \end{cases} \end{align*} \]

So, for example, \(\Pr(X \leq 3) = F(3) = 3/4\).

Definition and Basic Properties

Definition 3.11 (Definition 3.3.1: Cumulative Distribution Function (DeGroot and Schervish, p. 108)) The Cumulative Distribution Function (abbreviated CDF) \(F\) of a random variable \(X\) is the function

\[ F(x) = \Pr(X \leq x) \; \text{ for } -\infty < x < \infty. \tag{3.14}\]

It should be emphasized that the cumulative distribution function is defined as above for every random variable \(X\), regardless of whether the distribution of \(X\) is discrete, continuous, or mixed. For the continuous random variable in Example 3.17, the CDF was calculated in ?eq-3-3-1. Here is a discrete example:

Example 3.18 (Example 3.3.2: Bernoulli CDF) Let \(X\) have the Bernoulli distribution with parameter \(p\) defined in Definition 3.5. Then \(\Pr(X = 0) = 1 − p\) and \(\Pr(X = 1) = p\). Let \(F\) be the CDF of \(X\). It is easy to see that \(F(x) = 0\) for \(x < 0\) because \(X \geq 0\) for sure. Similarly, \(F(x) = 1\) for \(x \geq 1\) because \(X \leq 1\) for sure. For \(0 \leq x < 1\), \(\Pr(X \leq x) = Pr(X = 0) = 1 − p\) because 0 is the only possible value of \(X\) that is in the interval \((−\infty, x]\). In summary,

\[ F(x) = \begin{cases} 0 &\text{for }x < 0, \\ 1 - p &\text{for }0 \leq x < 1, \\ 1 &\text{for }x \geq 1. \end{cases} \]

We shall soon see (Theorem 3.6) that the CDF allows calculation of all interval probabilities; hence, it characterizes the distribution of a random variable. It follows from Equation 3.14 that the CDF of each random variable \(X\) is a function \(F\) defined on the real line. The value of \(F\) at every point \(x\) must be a number \(F(x)\) in the interval \([0, 1]\) because \(F(x)\) is the probability of the event \(\{X \leq x\}\). Furthermore, it follows from Equation 3.14 that the CDF of every random variable \(X\) must have the following three properties.

Proposition 3.1 (Property 3.3.1: Nondecreasing.) The function \(F(x)\) is nondecreasing as \(x\) increases; that is, if \(x_1 < x_2\), then \(F(x_1) \leq F(x_2)\).

Proof. If \(x_1 < x_2\), then the event \(\{X \leq x_1\}\) is a subset of the event \(\{X \leq x_2\}\). Hence, \(\Pr\{X \leq x_1\} \leq \Pr\{X \leq x_2\}\) according to Theorem 1.15.

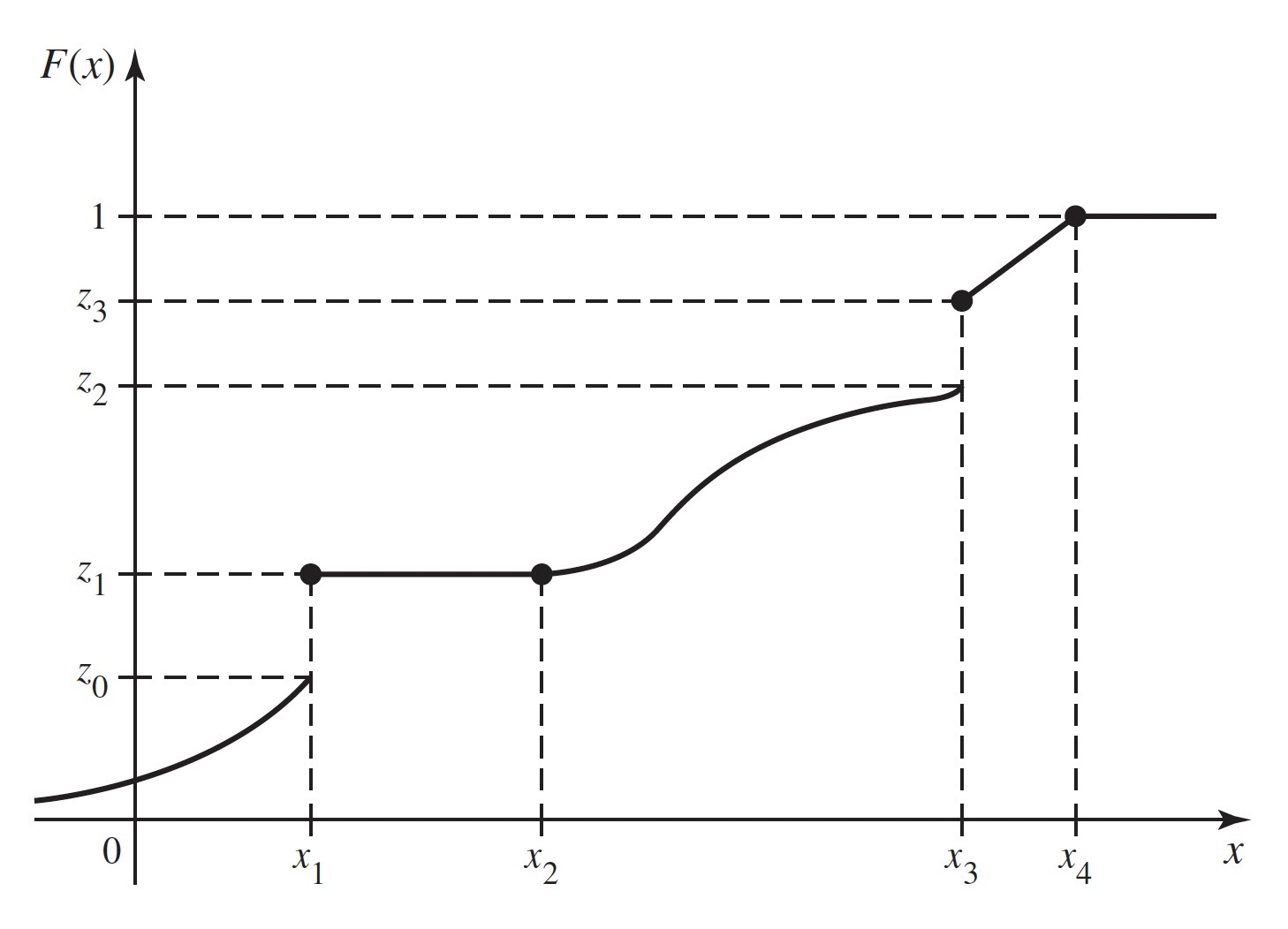

An example of a CDF is sketched in Figure 3.6. It is shown in that figure that \(0 \leq F(x) \leq 1\) over the entire real line. Also, \(F(x)\) is always nondecreasing as \(x\) increases, although \(F(x)\) is constant over the interval \(x_1 \leq x \leq x_2\) and for \(x \geq x_4\).

Proposition 3.2 (Property 3.3.2: Limits at \(\pm \infty\).) \(\lim_{x \rightarrow -\infty} F(x) = 0\) and \(\lim_{x \rightarrow \infty} F(x) = 1\).

Proof. As in the proof of Proposition 3.1, note that \(\{X \leq x_1\} \subset \{X \leq x_2\}\) whenever \(x_1 < x_2\). The fact that \(\Pr(X \leq x)\) approaches 0 as \(x \rightarrow -\infty\) now follows from Exercise 1.94. Similarly, the fact that \(\Pr(X \leq x)\) approaches 1 as \(x \rightarrow \infty\) follows from Exercise 1.93.

The limiting values specified in Proposition 3.2 are indicated in Figure 3.6. In this figure, the value of \(F(x)\) actually becomes 1 at \(x = x_4\) and then remains 1 for \(x > x_4\). Hence, it may be concluded that \(\Pr(X \leq x_4) = 1\) and \(\Pr(X > x_4) = 0\). On the other hand, according to the sketch in Figure 3.6, the value of \(F(x)\) approaches 0 as \(x \rightarrow −\infty\), but does not actually become 0 at any finite point \(x\). Therefore, for every finite value of \(x\), no matter how small, \(\Pr(X \leq x) > 0\).

A CDF need not be continuous. In fact, the value of \(F(x)\) may jump at any finite or countable number of points. In Figure 3.6, for instance, such jumps or points of discontinuity occur where \(x = x_1\) and \(x = x_3\). For each fixed value \(x\), we shall let \(F(x^-)\) denote the limit of the values of \(F(y)\) as \(y\) approaches \(x\) from the left, that is, as \(y\) approaches \(x\) through values smaller than \(x\). In symbols,

\[ F(x^{-}) = \lim_{y \rightarrow x,~y < x}F(y). \]

Similarly, we shall define \(F(x^+)\) as the limit of the values of \(F(y)\) as \(y\) approaches \(x\) from the right. Thus,

\[ F(x^+) = \lim_{y \rightarrow x,~y > x}F(y). \]

If the CDF is continuous at a given point \(x\), then \(F(x^{-}) = F(x^+) = F(x)\) at that point.

Proposition 3.3 (Property 3.3.3: Continuity from the Right.) A CDF is always continuous from the right; that is, \(F(x) = F(x^+)\) at every point \(x\).

Proof. Let \(y_1 > y_2 > \cdots\) be a sequence of numbers that are decreasing such that \(\lim_{n \rightarrow \infty}y_n = x\). Then the event \(\{X \leq x\}\) is the intersection of all the events \(\{X \leq y_n\}\) for \(n = 1, 2, \ldots\). Hence, by Exercise 1.94,

\[ F(x) = \Pr(X \leq x) = \lim_{n \rightarrow \infty}\Pr(X \leq y_n) = F(x^+). \]

It follows from Proposition 3.3 that at every point \(x\) at which a jump occurs,

\[ F(x^+) = F(x) \; \text{ and } \; F(x^-) < F(x). \]

In Figure 3.6 this property is illustrated by the fact that, at the points of discontinuity \(x = x_1\) and \(x = x_3\), the value of \(F(x_1)\) is taken as \(z_1\) and the value of \(F(x_3)\) is taken as \(z_3\).

Determining Probabilities from the Distribution Function

Example 3.19 (Example 3.3.3: Voltage.) In Example 3.17, suppose that we want to know the probability that \(X\) lies in the interval \([2, 4]\). That is, we want \(\Pr(2 \leq X \leq 4)\). The CDF allows us to compute \(\Pr(X \leq 4)\) and \(\Pr(X \leq 2)\). These are related to the probability that we want as follows: Let \(A = \{2 < X \leq 4\}\), \(B = \{X \leq 2\}\), and \(C = \{X \leq 4\}\). Because \(X\) has a continuous distribution, \(\Pr(A)\) is the same as the probability that we desire. We see that \(A \cup B = C\), and it is clear that \(A\) and \(B\) are disjoint. Hence, \(\Pr(A) + \Pr(B) = \Pr(C)\). It follows that

\[ \Pr(A) = \Pr(C) − \Pr(B) = F(4) − F(2) = \frac{4}{5} - \frac{3}{4} = \frac{1}{20}. \]

The type of reasoning used in Example 3.19 can be extended to find the probability that an arbitrary random variable \(X\) will lie in any specified interval of the real line from the CDF. We shall derive this probability for four different types of intervals.

Theorem 3.6 (Theorem 3.3.2) For all values \(x_1\) and \(x_2\) such that \(x_1 < x_2\),

\[ \Pr(x_1 < X \leq x_2) = F(x_2) − F(x_1). \tag{3.16}\]

Proof. Let \(A = \{x_1 < X \leq x_2\}\), \(B = \{X \leq x_1\}\), and \(C = \{X \leq x_2\}\). As in Example 3.19, \(A\) and \(B\) are disjoint, and their union is \(C\), so

\[ \Pr(x_1 < X \leq x_2) + \Pr(X \leq x_1) = \Pr(X \leq x_2). \]

Subtracting \(\Pr(X \leq x_1)\) from both sides of this equation and applying Equation 3.14 yields Equation 3.16.

For example, if the CDF of \(X\) is as sketched in Figure 3.6, then it follows from Theorems 3.5 and 3.6 that \(\Pr(X > x_2) = 1 − z_1\) and \(\Pr(x_2 < X \leq x_3) = z_3 − z_1\). Also, since \(F(x)\) is constant over the interval \(x_1 \leq x \leq x_2\), then \(\Pr(x_1 < X \leq x_2) = 0\).

It is important to distinguish carefully between the strict inequalities and the weak inequalities that appear in all of the preceding relations and also in the next theorem. If there is a jump in \(F(x)\) at a given value \(x\), then the values of \(\Pr(X \leq x)\) and \(\Pr(X < x)\) will be different.

Theorem 3.7 (Theorem 3.3.3) For each value x,

\[ \Pr(X < x) = F(x^-). \tag{3.17}\]

Proof. Let \(y_1 < y_2 < \cdots\) be an increasing sequence of numbers such that \(\lim_{n \rightarrow \infty} y_n = x\). Then it can be shown that

\[ \{X < x\} = \bigcup_{n=1}^{\infty}\{X \leq y_n\}. \]

Therefore, it follows from Exercise 1.93 that

\[ \begin{align*} \Pr(X < x) &= \lim_{n \rightarrow \infty}\Pr(X \leq y_n) \\ &= \lim_{n \rightarrow \infty}F(y_n) = F(x^-). \end{align*} \]

For example, for the CDF sketched in Figure 3.6, \(\Pr(X < x_3) = z_2\) and \(\Pr(X < x_4) = 1\).

Finally, we shall show that for every value \(x\), \(\Pr(X = x)\) is equal to the amount of the jump that occurs in \(F\) at the point \(x\). If \(F\) is continuous at the point \(x\), that is, if there is no jump in \(F\) at \(x\), then \(\Pr(X = x) = 0\).

Theorem 3.8 (Theorem 3.3.4) For every value \(x\),

\[ \Pr(X = x) = F(x) - F(x^-). \tag{3.18}\]

Proof. It is always true that \(\Pr(X = x) = \Pr(X \leq x) − \Pr(X < x)\). The relation Equation 3.18 follows from the fact that \(\Pr(X \leq x) = F(x)\) at every point and from Theorem 3.7.

In Figure 3.6, for example, \(\Pr(X = x_1) = z_1 − z_0\), \(\Pr(X = x_3) = z_3 − z_2\), and the probability of every other individual value of \(X\) is 0.

The CDF of a Discrete Distribution

From the definition and properties of a CDF \(F(x)\), it follows that if \(a < b\) and if \(\Pr(a < X < b) = 0\), then \(F(x)\) will be constant and horizontal over the interval \(a < x < b\). Furthermore, as we have just seen, at every point \(x\) such that \(\Pr(X = x) > 0\), the CDF will jump by the amount \(\Pr(X = x)\).

Suppose that \(X\) has a discrete distribution with the pmf \(f(x)\). Together, the properties of a CDF imply that \(F(x)\) must have the following form: \(F(x)\) will have a jump of magnitude \(f(x_i)\) at each possible value \(x_i\) of \(X\), and \(F(x)\) will be constant between every pair of successive jumps. The distribution of a discrete random variable \(X\) can be represented equally well by either the pmf or the CDF of \(X\).

The CDF of a Continuous Distribution

Theorem 3.9 (Theorem 3.3.5) Let \(X\) have a continuous distribution, and let \(f(x)\) and \(F(x)\) denote its pdf and CDF, respectively. Then \(F\) is continuous at every \(x\),

\[ F(x) = \int_{-\infty}^x f(t)~dt, \tag{3.19}\]

and

\[ \frac{dF(x)}{dx} = f(x), \tag{3.20}\]

at all \(x\) such that \(f\) is continuous.

Proof. Since the probability of each individual point \(x\) is 0, the CDF \(F(x)\) will have no jumps. Hence, \(F(x)\) will be a continuous function over the entire real line.

By definition, \(F(x) = \Pr(X \leq x)\). Since \(f\) is the pdf of \(X\), we have from the definition of pdf that \(\Pr(X \leq x)\) is the right-hand side of Equation 3.19.

It follows from Equation 3.19 and the relation between integrals and derivatives (the fundamental theorem of calculus) that, for every \(x\) at which \(f\) is continuous, Equation 3.20 holds.

Thus, the CDF of a continuous random variable \(X\) can be obtained from the pdf and vice versa. Equation 3.19 is how we found the CDF in Example 3.17. Notice that the derivative of the \(F\) in Example 3.17 is

\[ F'(x) = \begin{cases} 0 &\text{for }x < 0, \\ \frac{1}{(1+x)^2} &\text{for }x > 0, \end{cases} \]

and \(F'\) does not exist at \(x = 0\). This verifies Equation 3.20 for Example 3.17. Here, we have used the popular shorthand notation \(F'(x)\) for the derivative of \(F\) at the point \(x\).

Example 3.20 (Example 3.3.4: Calculating a pdf from a CDF) Let the CDF of a random variable be

\[ F(x) = \begin{cases} 0 &\text{for }x < 0, \\ x^{2/3} &\text{for }0 \leq x \leq 1, \\ 1 &\text{for }x > 1. \end{cases} \]

This function clearly satisfies the three properties required of every CDF, as given earlier in this section. Furthermore, since this CDF is continuous over the entire real line and is differentiable at every point except \(x = 0\) and \(x = 1\), the distribution of \(X\) is continuous. Therefore, the pdf of \(X\) can be found at every point other than \(x = 0\) and \(x = 1\) by the relation Equation 3.20. The value of \(f(x)\) at the points \(x = 0\) and \(x = 1\) can be assigned arbitrarily. When the derivative \(F(x)\) is calculated, it is found that \(f(x)\) is as given by Equation 3.13 in Example 3.15. Conversely, if the pdf of \(X\) is given by Equation 3.13, then by using Equation 3.19 it is found that \(F(x)\) is as given in this example.

The Quantile Function

Example 3.21 (Example 3.3.5: Fair Bets) Suppose that \(X\) is the amount of rain that will fall tomorrow, and \(X\) has CDF \(F\). Suppose that we want to place an even-money bet on \(X\) as follows: If \(X \leq x_0\), we win one dollar and if \(X > x_0\) we lose one dollar. In order to make this bet fair, we need \(\Pr(X \leq x_0) = \Pr(X > x_0) = 1/2\). We could search through all of the real numbers \(x\) trying to find one such that \(F(x) = 1/2\), and then we would let \(x_0\) equal the value we found. If \(F\) is a one-to-one function, then \(F\) has an inverse \(F^{-1}\) and \(x_0 = F^{-1}(1/2)\).

The value \(x_0\) that we seek in Example 3.21 is called the 0.5 quantile of \(X\) or the 50th percentile of \(X\) because 50% of the distribution of \(X\) is at or below \(x_0\).

Definition 3.12 (Definition 3.3.2: Quantiles/Percentiles) Let \(X\) be a random variable with CDF \(F\). For each \(p\) strictly between 0 and 1, define \(F^{-1}(p)\) to be the smallest value \(x\) such that \(F(x) \geq p\). Then \(F^{-1}(p)\) is called the \(p\) quantile of \(X\) or the \(100p\) percentile of \(X\). The function \(F^{-1}\) defined here on the open interval \((0, 1)\) is called the quantile function of \(X\).

Example 3.22 (Example 3.3.6: Standardized Test Scores) Many universities in the United States rely on standardized test scores as part of their admissions process. Thousands of people take these tests each time that they are offered. Each examinee’s score is compared to the collection of scores of all examinees to see where it fits in the overall ranking. For example, if 83% of all test scores are at or below your score, your test report will say that you scored at the 83rd percentile.

The notation \(F^{-1}(p)\) in Definition 3.12 deserves some justification. Suppose first that the CDF \(F\) of \(X\) is continuous and one-to-one over the whole set of possible values of \(X\). Then the inverse \(F^{-1}\) of \(F\) exists, and for each \(0 < p < 1\), there is one and only one \(x\) such that \(F(x) = p\). That \(x\) is \(F^{−1}(p)\). Definition 3.12 extends the concept of inverse function to nondecreasing functions (such as CDFs) that may be neither one-to-one nor continuous.

Quantiles of Continuous Distributions: When the CDF of a random variable \(X\) is continuous and one-to-one over the whole set of possible values of \(X\), the inverse \(F^{-1}\) of \(F\) exists and equals the quantile function of \(X\).

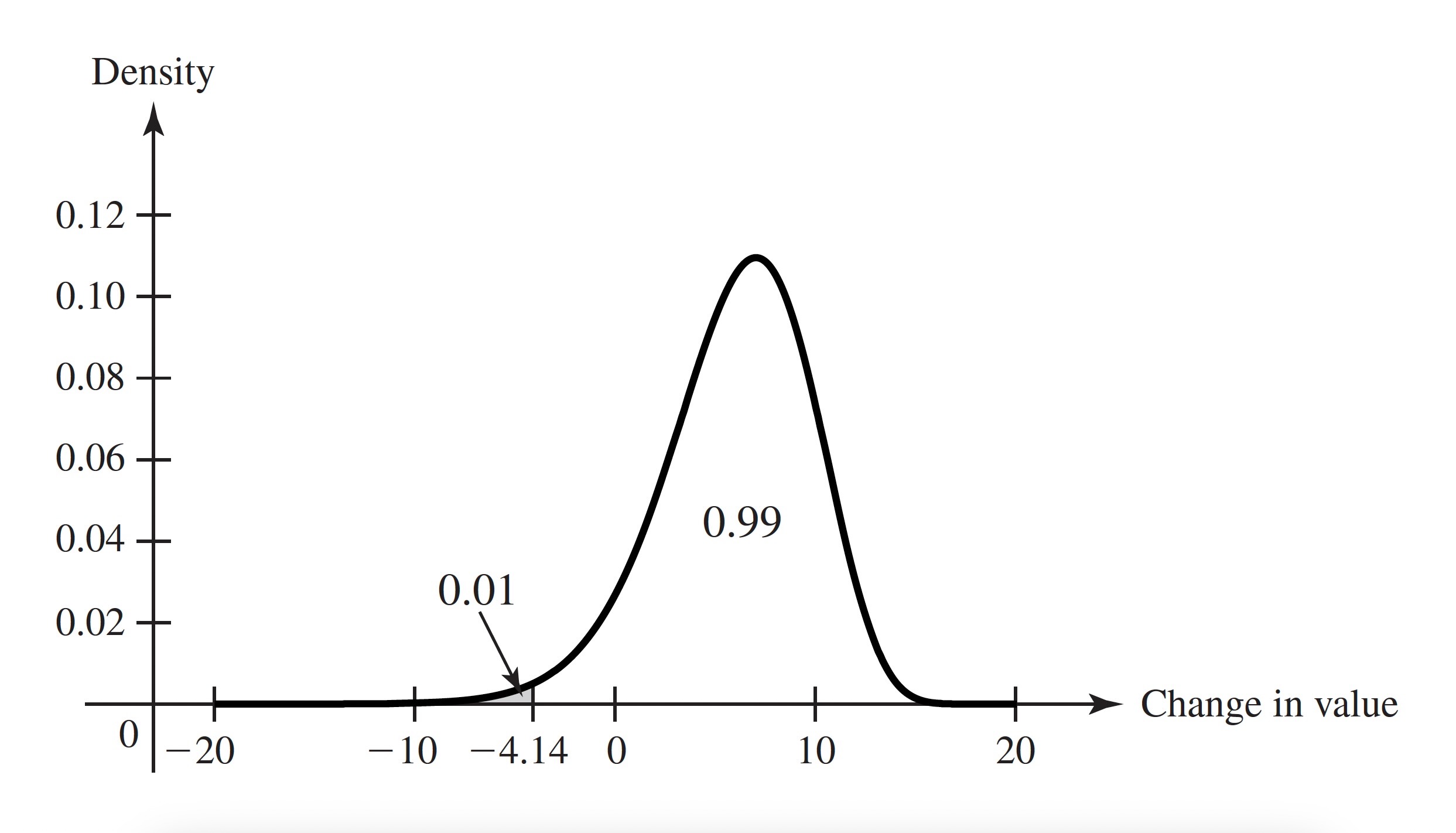

Example 3.23 (Example 3.3.7: Value at Risk) The manager of an investment portfolio is interested in how much money the portfolio might lose over a fixed time horizon. Let \(X\) be the change in value of the given portfolio over a period of one month. Suppose that \(X\) has the pdf in Figure 3.7. The manager computes a quantity known in the world of risk management as Value at Risk (denoted by VaR). To be specific, let \(Y = −X\) stand for the loss incurred by the portfolio over the one month. The manager wants to have a level of confidence about how large \(Y\) might be. In this example, the manager specifies a probability level, such as 0.99 and then finds \(y_0\), the 0.99 quantile of \(Y\). The manager is now 99% sure that \(Y \leq y_0\), and \(y_0\) is called the VaR. If \(X\) has a continuous distribution, then it is easy to see that \(y_0\) is closely related to the 0.01 quantile of the distribution of \(X\). The 0.01 quantile \(x_0\) has the property that \(\Pr(X < x_0) = 0.01\). But \(\Pr(X < x_0) = \Pr(Y > −x_0) = 1 − \Pr(Y \leq −x_0)\). Hence, \(−x_0\) is a 0.99 quantile of \(Y\). For the pdf in Figure 3.7, we see that \(x_0 = −4.14\), as the shaded region indicates. Then \(y_0 = 4.14\) is VaR for one month at probability level 0.99.

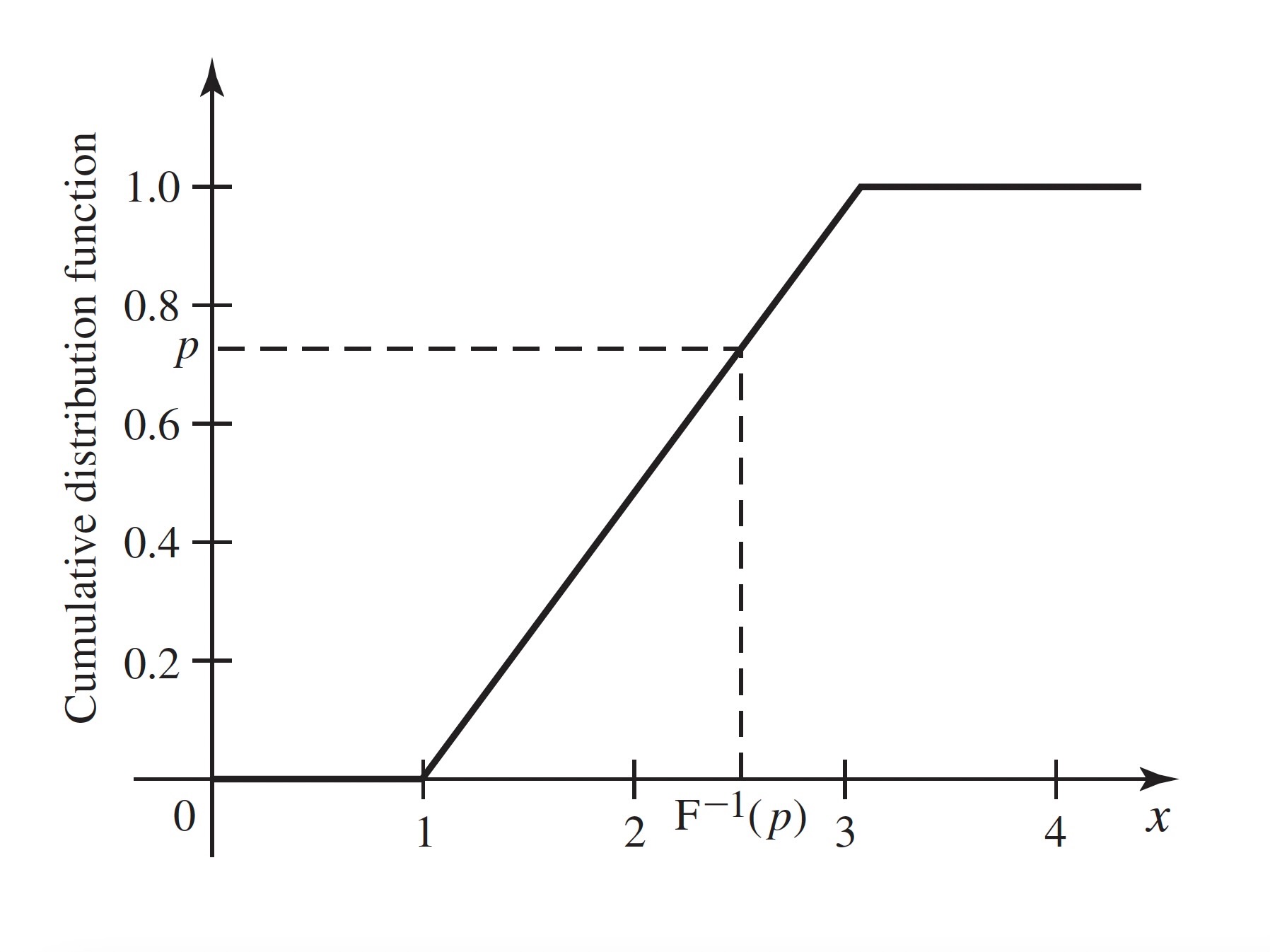

Example 3.24 (Example 3.3.8: Uniform Distribution on an Interval) Let \(X\) have the uniform distribution on the interval \([a, b]\). The CDF of \(X\) is

\[ F(x) = \Pr(X \leq x) = \begin{cases} 0 &\text{if }x \leq a, \\ \int_{a}^{x}\frac{1}{b-a}du &\text{if }a < x \leq b, \\ 1 &\text{if }x > b. \end{cases} \]

The integral above equals \((x−a)/(b−a)\). So, \(F(x) = (x−a)/(b−a)\) for all \(a < x < b\), which is a strictly increasing function over the entire interval of possible values of \(X\). The inverse of this function is the quantile function of \(X\), which we obtain by setting \(F(x)\) equal to \(p\) and solving for \(x\):

\[ \begin{align*} \frac{x - a}{b - a} &= p, \\ x - a &= p(b-a), \\ x &= a + p(b - a) = pb + (1-p)a. \end{align*} \]

Figure 3.8 illustrates how the calculation of a quantile relates to the CDF.

The quantile function of \(X\) is \(F^{−1}(p) = pb + (1 − p)a\) for \(0 < p < 1\). In particular, \(F^{-1}(1/2) = (b + a)/2\).

Note: Quantiles, Like CDFs, Depend on the Distribution Only: Any two random variables with the same distribution have the same quantile function. When we refer to a quantile of \(X\), we mean a quantile of the distribution of \(X\).

Quantiles of Discrete Distributions: It is convenient to be able to calculate quantiles for discrete distributions as well. The quantile function of Definition 3.12 exists for all distributions whether discrete, continuous, or otherwise. For example, in Figure 3.6, let \(z_0 \leq p \leq z_1\). Then the smallest \(x\) such that \(F(x) \geq p\) is \(x_1\). For every value of \(x < x_1\), we have \(F(x) < z_0 \leq p\) and \(F(x_1) = z_1\). Notice that \(F(x) = z_1\) for all \(x\) between \(x_1\) and \(x_2\), but since \(x_1\) is the smallest of all those numbers, \(x_1\) is the \(p\) quantile. Because distribution functions are continuous from the right, the smallest \(x\) such that \(F(x) \geq p\) exists for all \(0 < p < 1\). For \(p = 1\), there is no guarantee that such an \(x\) will exist. For example, in Figure 3.6, \(F(x_4) = 1\), but in Example 3.17, \(F(x) < 1\) for all \(x\). For \(p = 0\), there is never a smallest \(x\) such that \(F(x) = 0\) because \(\lim_{x \rightarrow −\infty}F(x) = 0\). That is, if \(F(x_0) = 0\), then \(F(x) = 0\) for all \(x < x_0\). For these reasons, we never talk about the 0 or 1 quantiles.

| \(p\) | \(F^{-1}(p)\) |

|---|---|

| \((0, 0.1681]\) | 0 |

| \((0.1681, 0.5283]\) | 1 |

| \((0.5283, 0.8370]\) | 2 |

| \((0.8370, 0.9693]\) | 3 |

| \((0.9693, 0.9977]\) | 4 |

| \((0.9977, 1)\) | 5 |

Example 3.25 (Example 3.3.9: Quantiles of a Binomial Distribution.) Let \(X\) have the binomial distribution with parameters 5 and 0.3. The binomial table in the back of the book has the pmf \(f\) of \(X\), which we reproduce here together with the CDF \(F\):

| \(x\) | 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|

| \(f(x)\) | 0.1681 | 0.3602 | 0.3087 | 0.1323 | 0.0284 | 0.0024 |

| \(F(x)\) | 0.1681 | 0.5283 | 0.8370 | 0.9693 | 0.9977 | 1 |

(A little rounding error occurred in the pmf) So, for example, the 0.5 quantile of this distribution is 1, which is also the 0.25 quantile and the 0.20 quantile. The entire quantile function is in Table 3.1. So, the 90th percentile is 3, which is also the 95th percentile, etc.

Certain quantiles have special names.

Definition 3.13 (Definition 3.3.3: Median/Quartiles.) The \(1/2\) quantile or the 50th percentile of a distribution is called its median. The \(1/4\) quantile or 25th percentile is the lower quartile. The \(3/4\) quantile or 75th percentile is called the upper quartile.

Note: The Median Is Special. The median of a distribution is one of several special features that people like to use when sumarizing the distribution of a random variable. We shall discuss summaries of distributions in more detail in Chapter 4. Because the median is such a popular summary, we need to note that there are several different but similar “definitions” of median. Recall that the \(1/2\) quantile is the smallest number \(x\) such that \(F(x) \geq 1/2\). For some distributions, usually discrete distributions, there will be an interval of numbers \([x_1, x_2)\) such that for all \(x \in [x_1, x_2)\), \(F(x) = 1/2\). In such cases, it is common to refer to all such \(x\) (including \(x_2\)) as medians of the distribution. (See ?def-4-5-1.) Another popular convention is to call \((x_1 + x_2)/2\) the median. This last is probably the most common convention. The readers should be aware that, whenever they encounter a median, it might be any one of the things that we just discussed. Fortunately, they all mean nearly the same thing, namely that the number divides the distribution in half as closely as is possible.

Example 3.26 (Example 3.3.10: Uniform Distribution on Integers.) Let \(X\) have the uniform distribution on the integers 1, 2, 3, 4. (See Definition 3.6) The CDF of \(X\) is

\[ F(x) = \begin{cases} 0 &\text{if }x < 1, \\ 1/4 &\text{if }1 \leq x < 2, \\ 1/2 &\text{if }2 \leq x < 3, \\ 3/4 &\text{if }3 \leq x < 4, \\ 1 &\text{if }x \geq 4. \end{cases} \]

The \(1/2\) quantile is 2, but every number in the interval \([2, 3]\) might be called a median. The most popular choice would be 2.5.

One advantage to describing a distribution by the quantile function rather than by the CDF is that quantile functions are easier to display in tabular form for multiple distributions. The reason is that the domain of the quantile function is always the interval \((0, 1)\) no matter what the possible values of \(X\) are. Quantiles are also useful for summarizing distributions in terms of where the probability is. For example, if one wishes to say where the middle half of a distribution is, one can say that it lies between the 0.25 quantile and the 0.75 quantile. In ?sec-8-5, we shall see how to use quantiles to help provide estimates of unknown quantities after observing data.

In Exercise 3.43, you can show how to recover the CDF from the quantile function. Hence, the quantile function is an alternative way to characterize a distribution.

Summary

The CDF \(F\) of a random variable \(X\) is \(F(x) = \Pr(X \leq x)\) for all real \(x\). This function is continuous from the right. If we let \(F(x^-)\) equal the limit of \(F(y)\) as \(y\) approaches \(x\) from below, then \(F(x) − F(x^-) = Pr(X = x)\). A continuous distribution has a continuous CDF and \(F'(x) = f(x)\), the pdf of the distribution, for all \(x\) at which \(F\) is differentiable. A discrete distribution has a CDF that is constant between the possible values and jumps by \(f(x)\) at each possible value \(x\). The quantile function \(F^{-1}(p)\) is equal to the smallest \(x\) such that \(F(x) \geq p\) for \(0 < p < 1\).

Exercises

Exercise 3.25 (Exercise 3.3.1) Suppose that a random variable \(X\) has the Bernoulli distribution with parameter \(p = 0.7\). (See Definition 3.5) Sketch the CDF of \(X\).

Exercise 3.26 (Exercise 3.3.2) Suppose that a random variable \(X\) can take only the values \(−2\), \(0\), \(1\), and \(4\), and that the probabilities of these values are as follows: \(\Pr(X = −2) = 0.4\), \(\Pr(X = 0) = 0.1\), \(Pr(X = 1) = 0.3\), and \(\Pr(X = 4) = 0.2\). Sketch the CDF of \(X\).

Exercise 3.27 (Exercise 3.3.3) Suppose that a coin is tossed repeatedly until a head is obtained for the first time, and let \(X\) denote the number of tosses that are required. Sketch the CDF of \(X\).

Exercise 3.28 (Exercise 3.3.4) Suppose that the CDF \(F\) of a random variable \(X\) is as sketched in Figure 3.9. Find each of the following probabilities:

- \(\Pr(X = −1)\)

- \(\Pr(X < 0)\)

- \(\Pr(X \leq 0)\)

- \(\Pr(X = 1)\)

- \(\Pr(0 < X \leq 3)\)

- \(\Pr(0 < X < 3)\)

- \(\Pr(0 \leq X \leq 3)\)

- \(\Pr(1 < X \leq 2)\)

- \(\Pr(1 \leq X \leq 2)\)

- \(\Pr(X > 5)\)

- \(Pr(X \geq 5)\)

- \(\Pr(3 \leq X \leq 4)\)

Exercise 3.29 (Exercise 3.3.5) Suppose that the CDF of a random variable \(X\) is as follows:

\[ F(x) = \begin{cases} 0 &\text{for }x \leq 0, \\ \frac{1}{9}x^2 &\text{for }0 < x \leq 3, \\ 1 &\text{for }x > 3. \end{cases} \]

Find and sketch the pdf of \(X\).

Exercise 3.30 (Exercise 3.3.6) Suppose that the CDF of a random variable \(X\) is as follows:

\[ F(x) = \begin{cases} e^{x - 3} &\text{for }x \leq 3, \\ 1 &\text{for }x > 3. \end{cases} \]

Find and sketch the pdf of \(X\).

Exercise 3.31 (Exercise 3.3.7) Suppose, as in Exercise 3.18, that a random variable \(X\) has the uniform distribution on the interval \([−2, 8]\). Find and sketch the CDF of \(X\).

Exercise 3.32 (Exercise 3.3.8) Suppose that a point in the \(xy\)-plane is chosen at random from the interior of a circle for which the equation is \(x^2 + y^2 = 1\); and suppose that the probability that the point will belong to each region inside the circle is proportional to the area of that region. Let \(Z\) denote a random variable representing the distance from the center of the circle to the point. Find and sketch the CDF of \(Z\).

Exercise 3.33 (Exercise 3.3.9) Suppose that \(X\) has the uniform distribution on the interval \([0, 5]\) and that the random variable \(Y\) is defined by \(Y = 0\) if \(X \leq 1\), \(Y = 5\) if \(X \geq 3\), and \(Y = X\) otherwise. Sketch the CDF of \(Y\).

Exercise 3.34 (Exercise 3.3.10) For the CDF in Example 3.20, find the quantile function.

Exercise 3.35 (Exercise 3.3.11) For the CDF in Exercise 3.29, find the quantile function.

Exercise 3.36 (Exercise 3.3.12) For the CDF in Exercise 3.30, find the quantile function.

Exercise 3.37 (Exercise 3.3.13) Suppose that a broker believes that the change in value \(X\) of a particular investment over the next two months has the uniform distribution on the interval \([−12,24]\). Find the value at risk VaR for two months at probability level 0.95.

Exercise 3.38 (Exercise 3.3.14) Find the quartiles and the median of the binomial distribution with parameters \(n = 10\) and \(p = 0.2\).

Exercise 3.39 (Exercise 3.3.15) Suppose that \(X\) has the pdf

\[ f(x) = \begin{cases} 2x &\text{if }0 < x < 1, \\ 0 &\text{otherwise.} \end{cases} \]

Find and sketch the CDF of \(X\).

Exercise 3.40 (Exercise 3.3.16) Find the quantile function for the distribution in Example 3.17.

Exercise 3.41 (Exercise 3.3.17) Prove that the quantile function F^{-1} of a general random variable \(X\) has the following three properties that are analogous to properties of the CDF:

- \(F^{-1}\) is a nondecreasing function of \(p\) for \(0 < p < 1\).

- Let \(x_0 = \lim_{p \rightarrow 0,~p > 0}F^{-1}(p)\) and \(x_1 = \lim_{p \rightarrow 1,~p < 1}F^{-1}(p)\). Then \(x_0\) equals the greatest lower bound on the set of numbers \(c\) such that \(\Pr(X \leq c) > 0\), and \(x_1\) equals the least upper bound on the set of numbers \(d\) such that \(\Pr(X \geq d) > 0\).

- \(F^{−1}\) is continuous from the left; that is \(F^{-1}(p) = F^{-1}(p^-)\) for all \(0 < p < 1\).

Exercise 3.42 (Exercise 3.3.18) Let \(X\) be a random variable with quantile function \(F^{-1}\). Assume the following three conditions: (i) \(F^{-1}(p) = c\) for all \(p\) in the interval \((p_0, p_1)\), (ii) either \(p_0 = 0\) or \(F^{-1}(p_0) < c\), and (iii) either \(p_1 = 1\) or \(F^{-1}(p) > c\) for \(p > p_1\). Prove that \(\Pr(X = c) = p_1 − p_0\).

Exercise 3.43 (Exercise 3.3.19) Let \(X\) be a random variable with CDF \(F\) and quantile function \(F^{-1}\). Let \(x_0\) and \(x_1\) be as defined in Exercise 3.41. (Note that $x_0 = -and/or \(x_1 = \infty\) are possible.) Prove that for all \(x\) in the open interval \((x_0, x_1)\), \(F(x)\) is the largest \(p\) such that \(F^{−1}(p) \leq x\).

Exercise 3.44 (Exercise 3.3.20) In Exercise 3.24, draw a sketch of the CDF \(F\) of \(X\) and find \(F(10)\).

3.4 Bivariate Distributions

We generalize the concept of distribution of a random variable to the joint distribution of two random variables. In doing so, we introduce the joint pmf for two discrete random variables, the joint pdf for two continuous random variables, and the joint CDF for any two random variables. We also introduce a joint hybrid of pmf and pdf for the case of one discrete random variable and one continuous random variable.

It is a straightforward consequence of the definition of the joint distribution of \(X\) and \(Y\) that this joint distribution is itself a probability measure on the set of ordered pairs of real numbers. The set \(\{ (X, Y) \in C\}\) will be an event for every set \(C\) of pairs of real numbers that most readers will be able to imagine.

In this section and the next two sections, we shall discuss convenient ways to characterize and do computations with bivariate distributions. In Section 3.7, these considerations will be extended to the joint distribution of an arbitrary finite number of random variables.

Discrete Joint Distributions

The two random variables in Example 3.28 have a discrete joint distribution.

When we define continuous joint distribution shortly, we shall see that the obvious analog of Theorem 3.10 is not true.

The following result is easy to prove because there are at most countably many pairs \((x, y)\) that must account for all of the probability a discrete joint distribution.

Continuous Joint Distributions

If one looks carefully at Equation 3.21, one will notice the similarity to Equation 3.6 and Equation 3.5. We formalize this connection as follows.

It is clear from Definition 3.18 that the joint pdf of two random variables characterizes their joint distribution. The following result is also straightforward.

Any function that satisfies the two displayed formulas in Theorem 3.12 is the joint pdf for some probability distribution.

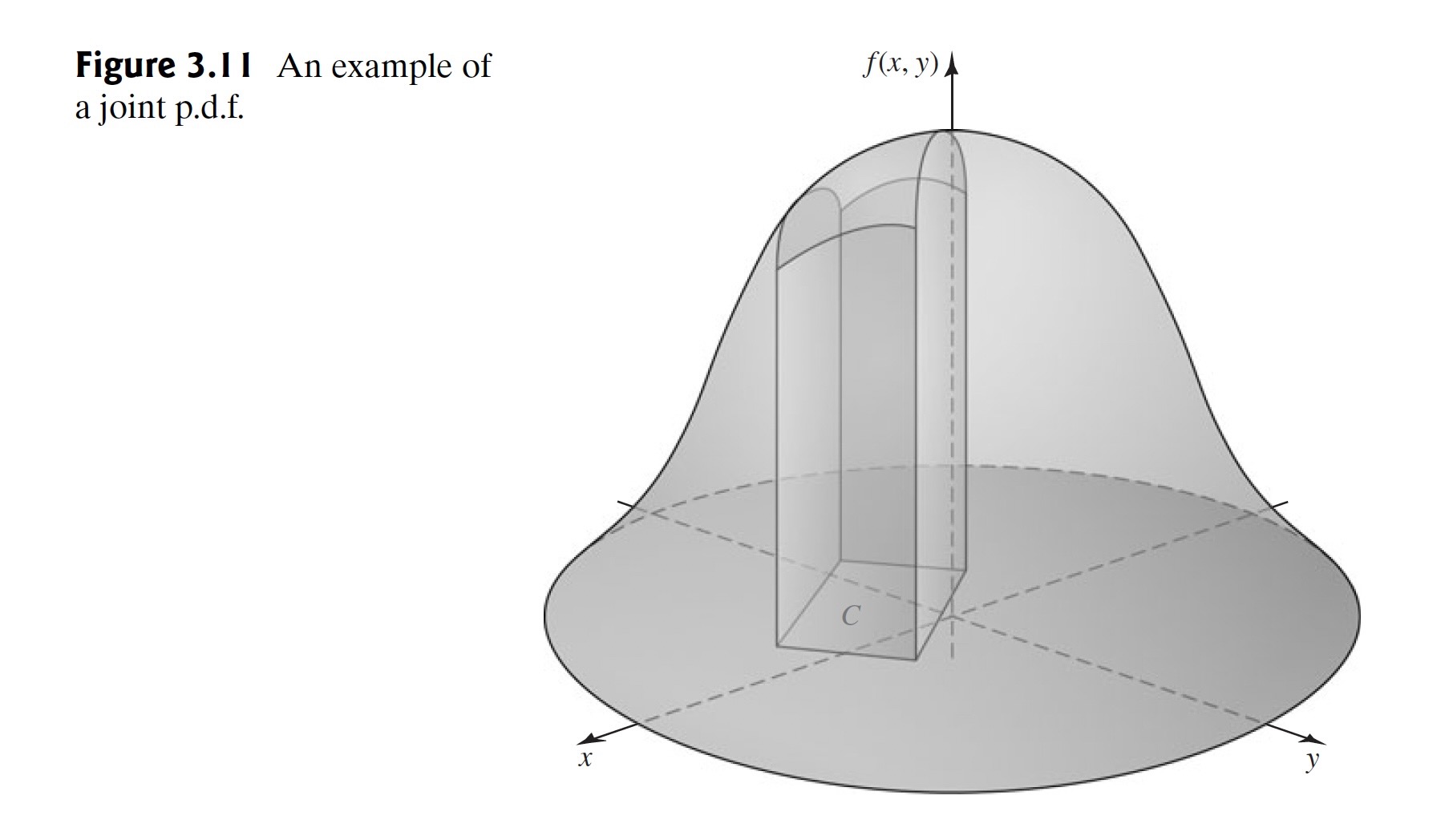

An example of the graph of a joint pdf is presented in Figure 3.11.

The total volume beneath the surface \(z = f(x, y)\) and above the \(xy\)-plane must be 1. The probability that the pair \((X, Y)\) will belong to the rectangle \(C\) is equal to the volume of the solid figure with base \(A\) shown in Figure 3.11. The top of this solid figure is formed by the surface \(z = f(x, y)\).

In Section 3.5, we will show that if \(X\) and \(Y\) have a continuous joint distribution, then \(X\) and \(Y\) each have a continuous distribution when considered separately. This seems reasonable intutively. However, the converse of this statement is not true, and the following result helps to show why.

Mixed Bivariate Distributions

Prior to Example 3.36, we have discussed bivariate distributions that were either discrete or continuous. Occasionally, one must consider a mixed bivariate distribution in which one of the random variables is discrete and the other is continuous. We shall use a function \(f(x, y)\) to characterize such a joint distribution in much the same way that we use a joint pmf to characterize a discrete joint distribution or a joint pdf to characterize a continuous joint distribution.

Clearly, Definition 3.19 can be modified in an obvious way if \(Y\) is discrete and \(X\) is continuous. Every joint pmf/pdf must satisfy two conditions. If \(X\) is the discrete random variable with possible values \(x_1, x_2, \ldots\) and \(Y\) is the continuous random variable, then \(f(x, y) \geq 0\) for all \(x\), \(y\) and

\[ \int_{-\infty}^{\infty}\sum_{i=1}^{\infty} f(x_i, y) \, dy = 1. \tag{3.24}\]

Because \(f\) is nonnegative, the sum and integral in Eqs. 3.23 and 3.24 can be done in whichever order is more convenient.

Note: Probabilities of More General Sets. For a general set \(C\) of pairs of real numbers, we can compute \(\Pr((X, Y) \in C)\) using the joint pmf/pdf of \(X\) and \(Y\). For each \(x\), let \(C_x = \{y \mid (x, y) \in C\}\). Then

\[ \Pr((X, Y) \in C) = \sum_{\text{All }x}\int_{C_x}f(x, y)\, dy, \]

if all of the integrals exist. Alternatively, for each \(y\), define \(C^y = \{x \mid (x, y) \in C\}\), and then

\[ \Pr((X, Y) \in C) = \int_{-\infty}^{\infty}\left[ \sum_{x \in C^y}f(x, y) \right]dy, \]

if the integral exists.

A more complicated type of joint distribution can also arise in a practical problem.

Bivariate Cumulative Distribution Functions

The first calculation in Example 3.38, namely, \(\Pr(X \leq 0 \text{ and } Y \leq 1/2)\), is a generalization of the calculation of a CDF to a bivariate distribution. We formalize the generalization as follows.

It is clear from Definition 3.20 that \(F(x, y)\) is monotone increasing in \(x\) for each fixed \(y\) and is monotone increasing in \(y\) for each fixed \(x\).

If the joint CDF of two arbitrary random variables \(X\) and \(Y\) is \(F\), then the probability that the pair \((X, Y)\) will lie in a specified rectangle in the \(xy\)-plane can be found from \(F\) as follows: For given numbers \(a < b\) and \(c < d\),

\[ \begin{align*} \Pr&(a < X \leq b \text{ and }c < Y \leq d) \\ &= \Pr(a < X \leq b \text{ and }Y \leq d) - \Pr(a < X \leq b \text{ and }Y \leq c) \\ &= \left[\Pr(X \leq b \text{ and } Y \leq d) - \Pr(X \leq a \text{ and }Y \leq d)\right] \\ &\phantom{\Pr} - \left[\Pr(X \leq b \text{ and }Y \leq c) - \Pr(X \leq a \text{ and }Y \leq c)\right] \\ &= F(b, d) - F(a, d) - F(b, c) + F(a, c). \end{align*} \]

Hence, the probability of the rectangle \(C\) sketched in Figure 3.14 is given by the combination of values of \(F\) just derived. It should be noted that two sides of the rectangle are included in the set \(C\) and the other two sides are excluded. Thus, if there are points or line segments on the boundary of \(C\) that have positive probability, it is important to distinguish between the weak inequalities and the strict inequalities in ?eq-3-4-6.

Other relationships involving the univariate distribution of \(X\), the univariate distribution of \(Y\), and their joint bivariate distribution will be presented in the next section.

Finally, if \(X\) and \(Y\) have a continuous joint distribution with joint pdf \(f\), then the joint CDF at \((x, y)\) is

\[ F(x, y) = \int_{-\infty}^{y}\int_{-\infty}^{x}f(r, s)\, dr \, ds. \]

Here, the symbols \(r\) and \(s\) are used simply as dummy variables of integration. The joint pdf can be derived from the joint CDF by using the relations

\[ f(x, y) = \frac{\partial^2 F(x, y)}{\partial x \partial y} = \frac{\partial^2F(x,y)}{\partial y \partial x} \]

at every point \((x, y)\) at which these second-order derivatives exist.

Summary