2 Conditional Probability

2.1 The Definition of Conditional Probability

A major use of probability in statistical inference is the updating of probabilities when certain events are observed. The updated probability of event \(A\) after we learn that event \(B\) has occurred is the conditional probability of \(A\) given \(B\).

Example 2.1 (Example 2.1.1: Lottery Ticket) Consider a state lottery game in which six numbers are drawn without replacement from a bin containing the numbers 1–30. Each player tries to match the set of six numbers that will be drawn without regard to the order in which the numbers are drawn. Suppose that you hold a ticket in such a lottery with the numbers 1, 14, 15, 20, 23, and 27. You turn on your television to watch the drawing but all you see is one number, 15, being drawn when the power suddenly goes off in your house. You don’t even know whether 15 was the first, last, or some in-between draw. However, now that you know that 15 appears in the winning draw, the probability that your ticket is a winner must be higher than it was before you saw the draw. How do you calculate the revised probability?

Example 2.1 is typical of the following situation. An experiment is performed for which the sample space \(S\) is given (or can be constructed easily) and the probabilities are available for all of the events of interest. We then learn that some event \(B\) has occuured, and we want to know how the probability of another event \(A\) changes after we learn that \(B\) has occurred. In Example 2.1, the event that we have learned is \(B = \{\text{one of the numbers drawn is }15\}\). We are certainly interested in the probability of

\[ A = \{\text{the numbers 1, 14, 15, 20, 23, and 27 are drawn}\}, \]

and possibly other events.

If we know that the event \(B\) has occurred, then we know that the outcome of the experiment is one of those included in \(B\). Hence, to evaluate the probability that \(A\) will occur, we must consider the set of those outcomes in \(B\) that also result in the occurrence of \(A\). As sketched in Figure 2.1, this set is precisely the set \(A \cap B\). It is therefore natural to calculate the revised probability of \(A\) according to the following definition.

Definition 2.1 (Definition 2.1.1: Conditional Probability) Suppose that we learn that an event \(B\) has occurred and that we wish to compute the probability of another event \(A\) taking into account that we know that \(B\) has occurred. The new probability of \(A\) is called the conditional probability of the event \(A\) given that the event \(B\) has occurred and is denoted \(\Pr(A \mid B)\). If \(\Pr(B) > 0\), we compute this probability as

\[ \Pr(A \mid B) = \frac{\Pr(A \cap B)}{\Pr(B)}. \tag{2.1}\]

The conditional probability \(\Pr(A \mid B)\) is not defined if \(\Pr(B) = 0\).

For convenience, the notation in Definition 2.1 is read simply as the conditional probability of \(A\) given \(B\). Equation 2.1 indicates that \(\Pr(A \mid B)\) is computed as the proportion of the total probability \(\Pr(B)\) that is represented by \(\Pr(A \cap B)\), intuitively the proportion of \(B\) that is also part of \(A\).

Example 2.2 (Example 2.1.2: Lottery Ticket.) In Example 2.1, you learned that the event

\[ B = \{\text{one of the numbers drawn is }15\} \]

has occurred. You want to calculate the probability of the event \(A\) that your ticket is a winner. Both events \(A\) and \(B\) are expressible in the sample space that consists of the \(\binom{30}{6} = 30!/(6!24!)\) possible combinations of 30 items taken six at a time, namely, the unordered draws of six numbers from 1–30. The event \(B\) consists of combinations that include 15. Since there are 29 remaining numbers from which to choose the other five in the winning draw, there are \(\binom{29}{5}\) outcomes in \(B\). It follows that

\[ \Pr(B) = \frac{\binom{29}{5}}{\binom{30}{6}} = \frac{29!24!6!}{30!5!24!} = 0.2. \]

The event \(A\) that your ticket is a winner consists of a single outcome that is also in \(B\), so \(A \cap B = A\), and

\[ \Pr(A \cap B) = \Pr(A) = \frac{1}{\binom{30}{6}} = \frac{6!24!}{30!} = 1.68 \times 10^{-6}. \]

It follows that the conditional probability of \(A\) given \(B\) is

\[ \Pr(A \mid B) = \frac{\frac{6!24!}{30!}}{0.2} = 8.4 \times 10^{-6}. \]

This is five times as large as \(\Pr(A)\) before you learned that \(B\) had occurred.

Definition 2.1 for the conditional probability \(\Pr(A \mid B)\) is worded in terms of the subjective interpretation of probability in Section 1.2. Equation 2.1 also has a simple meaning in terms of the frequency interpretation of probability. According to the frequency interpretation, if an experimental process is repeated a large number of times, then the proportion of repetitions in which the event \(B\) will occur is approximately \(\Pr(B)\) and the proportion of repetitions in which both the event \(A\) and the event \(B\) will occur is approximately \(\Pr(A \cap B)\). Therefore, among those repetitions in which the event \(B\) occurs, the proportion of repetitions in which the event \(A\) will also occur is approximately equal to

\[ \Pr(A \mid B) = \frac{\Pr(A \cap B)}{\Pr(B)} \]

Example 2.3 (Example 2.1.3: Rolling Dice) Suppose that two dice were rolled and it was observed that the sum \(T\) of the two numbers was odd. We shall determine the probability that \(T\) was less than 8.

If we let \(A\) be the event that \(T < 8\) and let \(B\) be the event that \(T\) is odd, then \(A \cap B\) is the event that \(T\) is 3, 5, or 7. From the probabilities for two dice given at the end of Section 1.6, we can evaluate \(\Pr(A \cap B)\) and \(\Pr(B)\) as follows:

\[ \begin{align*} \Pr(A \cap B) &= \frac{2}{36} + \frac{4}{36} + \frac{6}{36} = \frac{12}{36} = \frac{1}{3}, \\ \Pr(B) &= \frac{2}{36} + \frac{4}{36} + \frac{6}{36} + \frac{4}{36} + \frac{2}{36} = \frac{18}{36} = \frac{1}{2}. \end{align*} \]

Hence,

\[ \Pr(A \mid B) = \frac{\Pr(A \cap B)}{\Pr(B)} = \frac{2}{3}. \]

Example 2.4 (Example 2.1.4: A Clinical Trial) It is very common for patients with episodes of depression to have a recurrence within two to three years. Prien et al. (1984) studied three treatments for depression: imipramine, lithium carbonate, and a combination. As is traditional in such studies (called clinical trials), there was also a group of patients who received a placebo. (A placebo is a treatment that is supposed to be neither helpful nor harmful. Some patients are given a placebo so that they will not know that they did not receive one of the other treatments. None of the other patients knew which treatment or placebo they received, either.) In this example, we shall consider 150 patients who entered the study after an episode of depression that was classified as “unipolar” (meaning that there was no manic disorder). They were divided into the four groups (three treatments plus placebo) and followed to see how many had recurrences of depression. ?tbl-2-1 summarizes the results. If a patient were selected at random from this study and it were found that the patient received the placebo treatment, what is the conditional probability that the patient had a relapse? Let \(B\) be the event that the patient received the placebo, and let \(A\) be the event that the patient had a relapse. We can calculate \(\Pr(B) = 34/150\) and \(\Pr(A \cap B) = 24/150\) directly from the table. Then \(\Pr(A \mid B) = 24/34 = 0.706\). On the other hand, if the randomly selected patient is found to have received lithium (call this event \(C\)) then \(\Pr(C) = 38/150\), \(\Pr(A \cap C) = 13/150\), and \(\Pr(A \mid C) = 13/38 = 0.342\). Knowing which treatment a patient received seems to make a difference to the probability of relapse. In Chapter 10, we shall study methods for being more precise about how much of a difference it makes.

| Treatment Group | |||||

|---|---|---|---|---|---|

| Response | Imipramine | Lithium | Combination | Placebo | Total |

| Relapse | 18 | 13 | 22 | 24 | 77 |

| No relapse | 22 | 25 | 16 | 10 | 73 |

| Total | 40 | 38 | 38 | 34 | 150 |

Example 2.5 (Example 2.1.5: Rolling Dice Repeatedly) Suppose that two dice are to be rolled repeatedly and the sum \(T\) of the two numbers is to be observed for each roll. We shall determine the probability \(p\) that the value \(T = 7\) will be observed before the value \(T = 8\) is observed.

The desired probability \(p\) could be calculated directly as follows: We could assume that the sample space \(S\) contains all sequences of outcomes that terminate as soon as either the sum \(T = 7\) or the sum \(T = 8\) is obtained. Then we could find the sum of the probabilities of all the sequences that terminate when the value \(T = 7\) is obtained.

However, there is a simpler approach in this example.We can consider the simple experiment in which two dice are rolled. If we repeat the experiment until either the sum \(T = 7\) or the sum \(T = 8\) is obtained, the effect is to restrict the outcome of the experiment to one of these two values. Hence, the problem can be restated as follows: Given that the outcome of the experiment is either \(T = 7\) or \(T = 8\), determine the probability \(p\) that the outcome is actually \(T = 7\).

If we let \(A\) be the event that \(T = 7\) and let \(B\) be the event that the value of \(T\) is either 7 or 8, then \(A \cap B = A\) and

\[ p = \Pr(A \mid B) = \frac{\Pr(A \cap B)}{\Pr(B)} = \frac{\Pr(A)}{\Pr(B)}. \]

From the probabilities for two dice given in Example 1.14, \(\Pr(A) = 6/36\) and \(\Pr(B) = (6/36) + (5/36) = 11/36\). Hence, \(p = 6/11\).

2.1.1 The Multiplication Rule for Conditional Probabilities

In some experiments, certain conditional probabilities are relatively easy to assign directly. In these experiments, it is then possible to compute the probability that both of two events occur by applying the next result that follows directly from Equation 2.1 and the analogous definition of \(\Pr(B \mid A)\).

Theorem 2.1 (Theorem 2.1.1: Multiplication Rule for Conditional Probabilities) Let \(A\) and \(B\) be events. If \(\Pr(B) > 0\), then

\[ \Pr(A \cap B) = \Pr(B)\Pr(A \mid B). \]

If \(\Pr(A) > 0\), then

\[ \Pr(A \cap B) = \Pr(A)\Pr(B \mid A). \]

Example 2.6 (Example 2.1.6: Selecting Two Balls) Suppose that two balls are to be selected at random, without replacement, from a box containing \(r\) red balls and \(b\) blue balls. We shall determine the probability \(p\) that the first ball will be red and the second ball will be blue.

Let \(A\) be the event that the first ball is red, and let \(B\) be the event that the second ball is blue. Obviously, \(\Pr(A) = r/(r + b)\). Furthermore, if the event \(A\) has occurred, then one red ball has been removed from the box on the first draw. Therefore, the probability of obtaining a blue ball on the second draw will be

\[ \Pr(B \mid A) = \frac{b}{r + b - 1}. \]

It follows that

\[ \Pr(A \cap B) = \frac{r}{r + b}\cdot \frac{b}{r + b - 1}. \]

The principle that has just been applied can be extended to any finite number of events, as stated in the following theorem.

Theorem 2.2 (Theorem 2.1.2: Multiplication Rule for Conditional Probabilities) Suppose that \(A_1, A_2, \ldots, A_n\) are events such that \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1}) > 0\). Then

\[ \begin{align*} &\Pr(A_1 \cap A_2 \cap \cdots \cap A_n) \\ &=\Pr(A_1)\Pr(A_2 \mid A_1)\Pr(A_3 \mid A_1 \cap A_2)\cdots \Pr(A_n \mid A_1 \cap A_2 \cap \cdots \cap A_{n-1}). \end{align*} \tag{2.2}\]

Proof. The product of probabilities on the right side of Equation 2.2 is equal to

\[ \Pr(A_1)\cdot \frac{\Pr(A_1 \cap A_2)}{\Pr(A_1)} \cdot \frac{\Pr(A_1 \cap A_2 \cap A_3)}{\Pr(A_1 \cap A_2)}\cdots \frac{\Pr(A_1 \cap A_2 \cap \cdots \cap A_n)}{\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1})}. \]

Since \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1}) > 0\), each of the denominators in this product must be positive. All of the terms in the product cancel each other except the final numerator \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_n)\), which is the left side of Equation 2.2.

Example 2.7 (Example 2.1.7: Selcting Four Balls) Suppose that four balls are selected one at a time, without replacement, from a box containing \(r\) red balls and \(b\) blue balls (\(r \geq 2\), \(b \geq 2\)). We shall determine the probability of obtaining the sequence of outcomes red, blue, red, blue.

If we let \(R_j\) denote the event that a red ball is obtained on the \(j\)th draw and let \(B_j\) denote the event that a blue ball is obtained on the \(j\)th draw (\(j = 1, \ldots, 4\)), then

\[ \begin{align*} \Pr(R_1 \cap B_2 \cap R_3 \cap B_4) &= \Pr(R_1)\Pr(B_2 \mid R_1)\Pr(R_3 \mid R_1 \cap B_2)\Pr(B_4 \mid R_1 \cap B_2 \cap R_3) \\ &= \frac{r}{r+b} \cdot \frac{b}{r + b - 1} \cdot \frac{r-1}{r + b - 2} \cdot \frac{b - 1}{r + b - 3}. \end{align*} \]

Note: Conditional Probabilities Behave Just Like Probabilities. In all of the situations that we shall encounter in this text, every result that we can prove has a conditional version given an event \(B\) with \(\Pr(B) > 0\). Just replace all probabilities by conditional probabilities given \(B\) and replace all conditional probabilities given other events \(C\) by conditional probabilities given \(C \cap B\). For example, Theorem 1.14 says that \(\Pr(A^c) = 1 − \Pr(A)\). It is easy to prove that \(\Pr(A^c \mid B) = 1 − \Pr(A \mid B)\) if \(\Pr(B) > 0\). (See Exercises 2.11 and 2.12 in this section.) Another example is Theorem 2.3, which is a conditional version of the multiplication rule Theorem 2.2. Although a proof is given for Theorem 2.3, we shall not provide proofs of all such conditional theorems, because their proofs are generally very similar to the proofs of the unconditional versions.

Theorem 2.3 (Theorem 2.1.3) Suppose that \(A_1, A_2, \ldots, A_n\), \(B\) are events such that \(\Pr(B) > 0\) and \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1} \mid B) > 0\). Then

\[ \begin{align*} \Pr(A_1 \cap A_2 \cap \cdots \cap A_n \mid B) = &\Pr(A_1 \mid B)\Pr(A_2 \mid A_1 \cap B)\cdots \\ &\cdot \Pr(A_n \mid A_1 \cap A_2 \cap \cdots \cap A_{n-1} \cap B). \end{align*} \tag{2.3}\]

Proof. The product of probabilities on the right side of Equation 2.3 is equal to

\[ \frac{\Pr(A_1 \cap B)}{\Pr(B)} \cdot \frac{\Pr(A_1 \cap A_2 \cap B)}{\Pr(A_1 \cap B)} \cdots \frac{\Pr(A_1 \cap A_2 \cap \cdots \cap A_n \cap B)}{\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1} \cap B)}. \]

Since \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_{n-1} \mid B) > 0\), each of the denominators in this product must be positive. All of the terms in the product cancel each other except the first denominator and the final numerator to yield \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_n \cap B) / \Pr(B)\), which is the left side of Equation 2.3.

2.1.2 Conditional Probability and Partitions

Theorem 1.11 shows how to calculate the probability of an event by partitioning the sample space into two events \(B\) and \(B^c\). This result easily generalizes to larger partitions, and when combined with Theorem 2.1 it leads to a very powerful tool for calculating probabilities.

Definition 2.2 (Definition 2.1.2: Partition) Let \(S\) denote the sample space of some experiment, and consider \(k\) events \(B_1, \ldots, B_k\) in \(S\) such that \(B_1, \ldots, B_k\) are disjoint and \(\bigcup_{i=1}^kB_i = S\). It is said that these events form a partition of \(S\).

Typically, the events that make up a partition are chosen so that an important source of uncertainty in the problem is reduced if we learn which event has occurred.

Example 2.8 (Example 2.1.8: Selecting Bolts) Two boxes contain long bolts and short bolts. Suppose that one box contains 60 long bolts and 40 short bolts, and that the other box contains 10 long bolts and 20 short bolts. Suppose also that one box is selected at random and a bolt is then selected at random from that box. We would like to determine the probability that this bolt is long.

Partitions can facilitate the calculations of probabilities of certain events.

Theorem 2.4 (Theorem 2.1.4: Law of Total Probability) Suppose that the events \(B_1, \ldots, B_k\) form a partition of the space \(S\) and \(\Pr(B_j) > 0\) for \(j = 1, \ldots, k\). Then, for every event \(A\) in \(S\),

\[ \Pr(A) = \sum_{j=1}^k \Pr(B_j)\Pr(A \mid B_j). \tag{2.4}\]

Proof. The events \(B_1 \cap A, B_2 \cap A, \ldots, B_k \cap A\) will form a partition of \(A\), as illustrated in Figure 2.2. Hence, we can write

\[ A = (B_1 \cap A) \cup (B_2 \cap A) \cup \cdots \cup (B_k \cap A). \]

Furthermore, since the \(k\) events on the right side of this equation are disjoint,

\[ \Pr(A) = \sum_{j=1}^k \Pr(B_j \cap A). \]

Finally, if \(\Pr(B_j) > 0\) for \(j = 1, \ldots, k\), then \(\Pr(B_j \cap A) = \Pr(B_j)\Pr(A \mid B_j)\) and it follows that Equation 2.4 holds.

Example 2.9 (Example 2.1.9: Selecting Bolts) In Example 2.8, let \(B_1\) be the event that the first box (the one with 60 long and 40 short bolts) is selected, let \(B_2\) be the event that the second box (the one with 10 long and 20 short bolts) is selected, and let \(A\) be the event that a long bolt is selected. Then

\[ \Pr(A) = \Pr(B_1) \Pr(A \mid B_1) + \Pr(B_2) \Pr(A \mid B_2). \]

Since a box is selected at random, we know that \(\Pr(B_1) = \Pr(B_2) = 1/2\). Furthermore, the probability of selecting a long bolt from the first box is \(\Pr(A \mid B_1) = 60/100 = 3/5\), and the probability of selecting a long bolt from the second box is \(\Pr(A \mid B_2) = 10/30 = 1/3\). Hence,

\[ \Pr(A) = \frac{1}{2}\cdot \frac{3}{5} + \frac{1}{2} \cdot \frac{1}{3} = \frac{7}{15}. \]

Example 2.10 (Example 2.1.10: Achieving a High Score) Suppose that a person plays a game in which his score must be one of the 50 numbers \(1, 2, \ldots, 50\) and that each of these 50 numbers is equally likely to be his score. The first time he plays the game, his score is \(X\). He then continues to play the game until he obtains another score \(Y\) such that \(Y \geq X\). We will assume that, conditional on previous plays, the 50 scores remain equally likely on all subsequent plays. We shall determine the probability of the event \(A\) that \(Y = 50\).

For each \(i = 1, \ldots, 50\), let \(B_i\) be the event that \(X = i\). Conditional on \(B_i\), the value of \(Y\) is equally likely to be any one of the numbers \(i, i + 1, \ldots, 50\). Since each of these \((51− i)\) possible values for \(Y\) is equally likely, it follows that

\[ \Pr(A \mid B_i) = \Pr(Y = 50 \mid B_i) = \frac{1}{51-i}. \]

Furthermore, since the probability of each of the 50 values of \(X\) is \(1/50\), it follows that \(\Pr(B_i) = 1/50\) for all \(i\) and

\[ \Pr(A) = \sum_{i=1}^{50}\frac{1}{50}\cdot\frac{1}{51-i} = \frac{1}{50}\left(1 + \frac{1}{2} + \frac{1}{3} + \cdots + \frac{1}{50}\right) = 0.0900. \]

Note: Conditional Version of Law of Total Probability. The law of total probability has an analog conditional on another event \(C\), namely,

\[ \Pr(A \mid C) = \sum_{j=1}^k \Pr(B_j \mid C)\Pr(A \mid B_j \cap C). \tag{2.5}\]

The reader can prove this in Exercise 2.17.

Augmented Experiment: In some experiments, it may not be clear from the initial description of the experiment that a partition exists that will facilitate the calculation of probabilities. However, there are many such experiments in which such a partition exists if we imagine that the experiment has some additional structure. Consider the following modification of Examples 2.8 and 2.9.

Example 2.11 (Example 2.1.11: Selecting Bolts) There is one box of bolts that contains some long and some short bolts. A manager is unable to open the box at present, so she asks her employees what is the composition of the box. One employee says that it contains 60 long bolts and 40 short bolts. Another says that it contains 10 long bolts and 20 short bolts. Unable to reconcile these opinions, the manager decides that each of the employees is correct with probability \(1/2\). Let \(B_1\) be the event that the box contains 60 long and 40 short bolts, and let \(B_2\) be the event that the box contains 10 long and 20 short bolts. The probability that the first bolt selected is long is now calculated precisely as in Example 2.9.

In Example 2.11, there is only one box of bolts, but we believe that it has one of two possible compositions. We let the events \(B_1\) and \(B_2\) determine the possible compositions. This type of situation is very common in experiments.

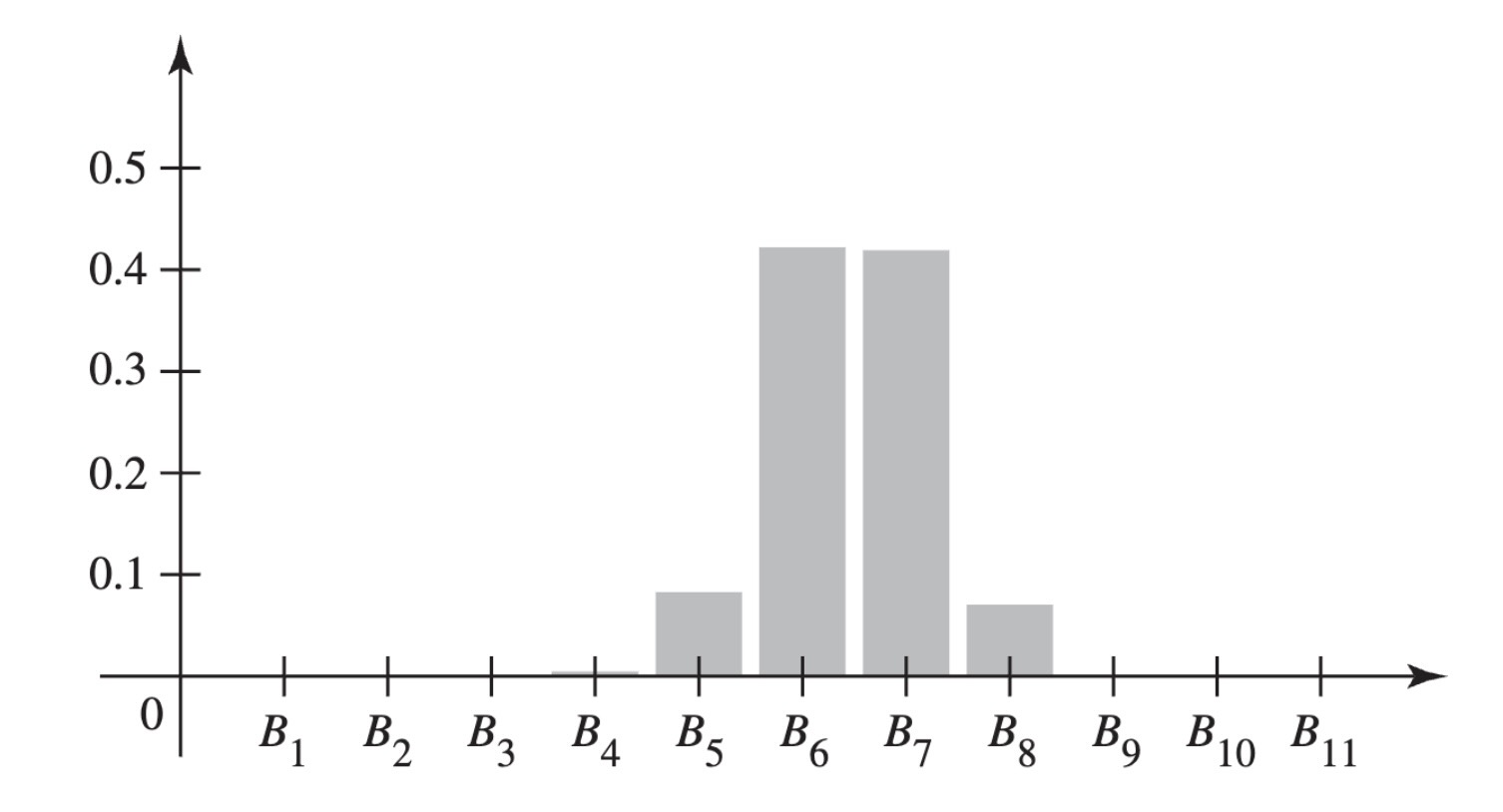

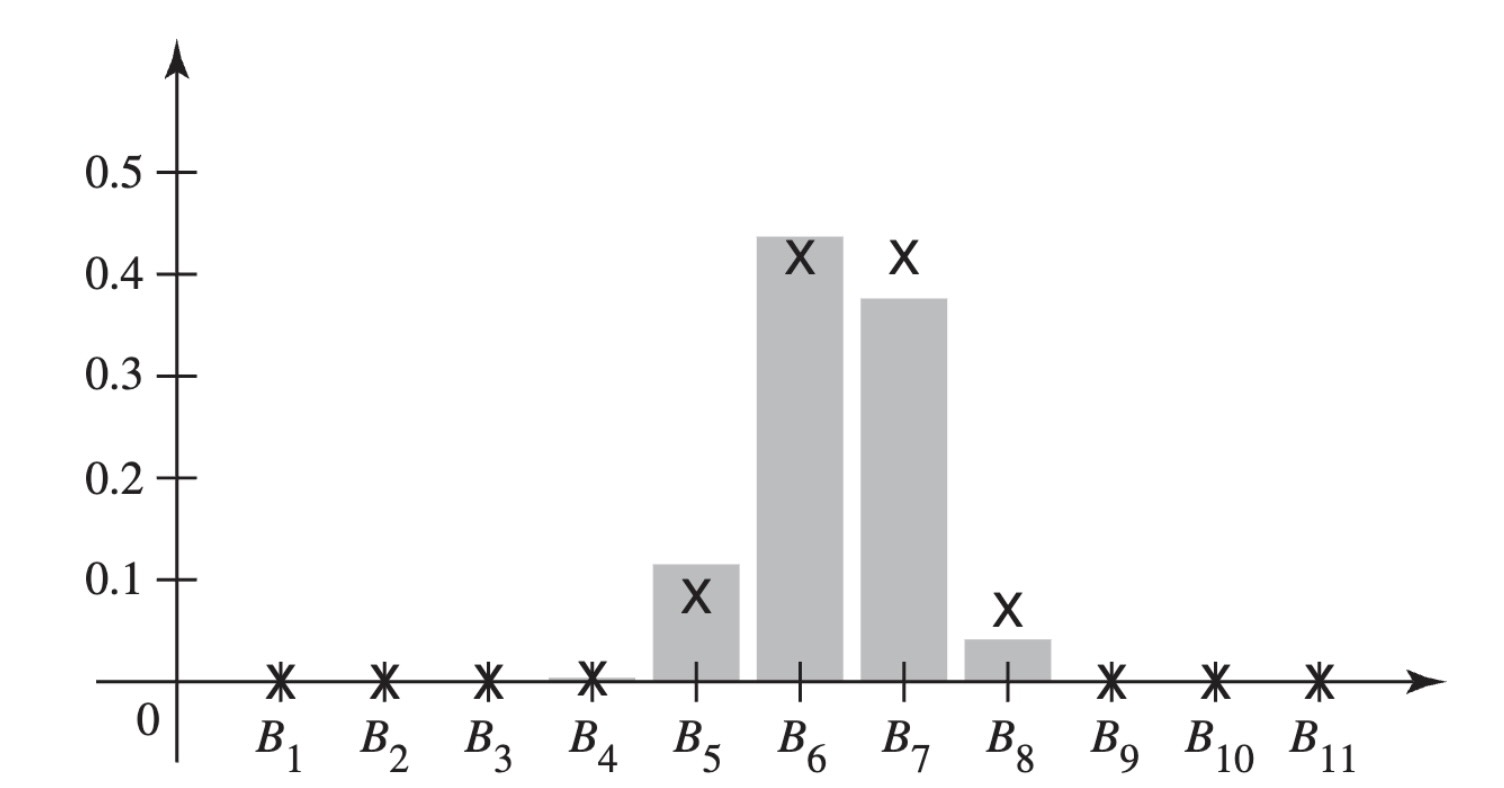

Example 2.12 (Example 2.1.12: A Clinical Trial) Consider a clinical trial such as the study of treatments for depression in Example 2.4. As in many such trials, each patient has two possible outcomes, in this case relapse and no relapse. We shall refer to relapse as “failure” and no relapse as “success.” For now, we shall consider only patients in the imipramine treatment group. If we knew the effectiveness of imipramine, that is, the proportion \(p\) of successes among all patients who might receive the treatment, then we might model the patients in our study as having probability \(p\) of success. Unfortunately, we do not know \(p\) at the start of the trial. In analogy to the box of bolts with unknown composition in Example 2.11, we can imagine that the collection of all available patients (from which the 40 imipramine patients in this trial were selected) has two or more possible compositions. We can imagine that the composition of the collection of patients determines the proportion that will be success. For simplicity, in this example, we imagine that there are 11 different possible compositions of the collection of patients. In particular, we assume that the proportions of success for the 11 possible compositions are \(0, 1/10, \ldots, 9/10, 1\). (We shall be able to handle more realistic models for \(p\) in Chapter 3.) For example, if we knew that our patients were drawn from a collection with the proportion \(3/10\) of successes, we would be comfortable saying that the patients in our sample each have success probability \(p = 3/10\). The value of \(p\) is an important source of uncertainty in this problem, and we shall partition the sample space by the possible values of \(p\). For \(j = 1, \ldots, 11\), let \(B_j\) be the event that our sample was drawn from a collection with proportion \((j − 1)/10\) of successes. We can also identify \(B_j\) as the event \(\{p = (j − 1)/10\}\).

Now, let \(E_1\) be the event that the first patient in the imipramine group has a success. We defined each event \(B_j\) so that \(\Pr(E_1 \mid B_j) = (j − 1)/10\). Supppose that, prior to starting the trial, we believe that \(\Pr(B_j) = 1/11\) for each \(j\). It follows that

\[ \Pr(E_1) = \sum_{j=1}^{11}\frac{1}{11}\frac{j-1}{10} = \frac{55}{110} = \frac{1}{2}, \tag{2.6}\]

where the second equality uses the fact that $_{j=1}^n j = n(n+1)/2

The events \(B_1, B_2, \ldots, B_{11}\) in Example 2.12 can be thought of in much the same way as the two events \(B_1\) and \(B_2\) that determine the mixture of long and short bolts in Example 2.11. There is only one box of bolts, but there is uncertainty about its composition. Similarly in Example 2.12, there is only one group of patients, but we believe that it has one of 11 possible compositions determined by the events \(B_1, B_2, \ldots, B_{11}\). To call these events, they must be subsets of the sample space for the experiment in question. That will be the case in Example 2.12 if we imagine that the experiment consists not only of observing the numbers of successes and failures among the patients but also of potentially observing enough additional patients to be able to compute \(p\), possibly at some time very far in the future. Similarly, in Example 2.11, the two events \(B_1\) and \(B_2\) are subsets of the sample space if we imagine that the experiment consists not only of observing one sample bolt but also of potentially observing the entire composition of the box.

Throughout the remainder of this text, we shall implicitly assume that experiments are augmented to include outcomes that determine the values of quantities such as \(p\). We shall not require that we ever get to observe the complete outcome of the experiment so as to tell us precisely what \(p\) is, but merely that there is an experiment that includes all of the events of interest to us, including those that determine quantities like \(p\).

Definition 2.3 (Definition 2.1.3: Augmented Experiment) If desired, any experiment can be augmented to include the potential or hypothetical observation of as much additional information as we would find useful to help us calculate any probabilities that we desire.

Definition 2.3 is worded somewhat vaguely because it is intended to cover a wide variety of cases. Here is an explicit application to Example 2.12.

Example 2.13 (Example 2.1.13: A Clinical Trial) In Example 2.12, we could explicitly assume that there exists an infinite sequence of patients who could be treated with imipramine even though we will observe only finitely many of them. We could let the sample space consist of infinite sequences of the two symbols \(S\) and \(F\) such as \((S, S, F, S, F, F, F, \ldots)\). Here \(S\) in coordinate \(i\) means that the \(i\)th patient is a success, and \(F\) stands for failure. So, the event \(E_1\) in Example 2.12 is the event that the first coordinate is \(S\). The example sequence above is then in the event \(E_1\). To accommodate our interpretation of \(p\) as the proportion of successes, we can assume that, for every such sequence, the proportion of \(S\)’s among the first \(n\) coordinates gets close to one of the numbers \(0, 1/10, \ldots, 9/10, 1\) as \(n\) increases. In this way, \(p\) is explicitly the limit of the proportion of successes we would observe if we could find a way to observe indefinitely. In Example 2.12, \(B_2\) is the event consisting of all the outcomes in which the limit of the proportion of \(S\)’s equals \(1/10\), \(B_3\) is the set of outcomes in which the limit is \(2/10\), etc. Also, we observe only the first 40 coordinates of the infinite sequence, but we still behave as if \(p\) exists and could be determined if only we could observe forever.

In the remainder of the text, there will be many experiments that we assume are augmented. In such cases, we will mention which quantities (such as \(p\) in Example 2.13) would be determined by the augmented part of the experiment even if we do not explicitly mention that the experiment is augmented.

2.1.3 The Game of Craps

We shall conclude this section by discussing a popular gambling game called craps. One version of this game is played as follows: A player rolls two dice, and the sum of the two numbers that appear is observed. If the sum on the first roll is 7 or 11, the player wins the game immediately. If the sum on the first roll is 2, 3, or 12, the player loses the game immediately. If the sum on the first roll is 4, 5, 6, 8, 9, or 10, then the two dice are rolled again and again until the sum is either 7 or the original value. If the original value is obtained a second time before 7 is obtained, then the player wins. If the sum 7 is obtained before the original value is obtained a second time, then the player loses.

We shall now compute the probability \(\Pr(W)\), where \(W\) is the event that the player will win. Let the sample space \(S\) consist of all possible sequences of sums from the rolls of dice that might occur in a game. For example, some of the elements of \(S\) are \((4, 7)\), \((11)\), \((4, 3, 4)\), \((12)\), \((10, 8, 2, 12, 6, 7)\), etc. We see that \((11) \in W\) but \((4, 7) \in W^c\), etc. We begin by noticing that whether or not an outcome is in \(W\) depends in a crucial way on the first roll. For this reason, it makes sense to partition \(W\) according to the sum on the first roll. Let \(B_i\) be the event that the first roll is \(i\) for \(i = 2, \ldots, 12\).

Theorem 2.4 tells us that \(\Pr(W) = \sum_{i=2}^{12}\Pr(B_i)\Pr(W \mid B_i)\). Since \(\Pr(B_i)\) for each \(i\) was computed in Example 1.14, we need to determine \(\Pr(W \mid B_i)\) for each \(i\). We begin with \(i = 2\). Because the player loses if the first roll is 2, we have \(\Pr(W \mid B_2) = 0\). Similarly, \(\Pr(W \mid B_3) = 0 = \Pr(W \mid B_{12})\). Also, \(\Pr(W \mid B_7) = 1\) because the player wins if the first roll is 7. Similarly, \(\Pr(W \mid B_{11}) = 1\).

For each first roll \(i \in \{4, 5, 6, 8, 9, 10\}\), \(\Pr(W \mid B_i)\) is the probability that, in a sequence of dice rolls, the sum \(i\) will be obtained before the sum 7 is obtained. As described in Example 2.5, this probability is the same as the probability of obtaining the sum \(i\) when the sum must be either \(i\) or 7. Hence,

\[ \Pr(W \mid B_i) = \frac{\Pr(B_i)}{\Pr(B_i \cup B_7)}. \]

We compute the necessary values here:

\[ \begin{align*} \Pr(W \mid B_4) &= \frac{\frac{3}{36}}{\frac{3}{36} + \frac{6}{36}} = \frac{1}{3}, \; \Pr(W \mid B_5) &= \frac{\frac{4}{36}}{\frac{4}{36} + \frac{6}{36}} = \frac{2}{5}, \\ \Pr(W \mid B_6) &= \frac{\frac{5}{36}}{\frac{5}{36} + \frac{6}{36}} = \frac{5}{11}, \; \Pr(W \mid B_8) &= \frac{\frac{5}{36}}{\frac{5}{36} + \frac{6}{36}} = \frac{5}{11}, \\ \Pr(W \mid B_9) &= \frac{\frac{4}{36}}{\frac{4}{36} + \frac{6}{36}} = \frac{2}{5}, \; \Pr(W \mid B_{10}) &= \frac{\frac{3}{36}}{\frac{3}{36} + \frac{6}{36}} = \frac{1}{3}. \end{align*} \]

Finally, we compute the sum \(\sum_{i=2}^{12}\Pr(B_i)\Pr(W \mid B_i)\):

\[ \begin{align*} \Pr(W) &= \sum_{i=2}^{12}\Pr(B_i)\Pr(W \mid B_i) = 0 + 0 + \frac{3}{36}\frac{1}{3} + \frac{4}{36}\frac{2}{5} + \frac{5}{36}\frac{5}{11} + \frac{6}{36} \\ &+ \frac{5}{36}\frac{5}{11} + \frac{4}{36}\frac{2}{5} + \frac{3}{36}\frac{1}{3} + \frac{2}{36} + 0 = \frac{2928}{5940} = 0.493. \end{align*} \]

Thus, the probability of winning in the game of craps is slightly less than \(1/2\).

2.1.4 Summary

The revised probability of an event \(A\) after learning that event \(B\) (with \(\Pr(B) > 0\)) has occurred is the conditional probability of \(A\) given \(B\), denoted by \(\Pr(A \mid B)\) and computed as \(\Pr(A \cap B) / \Pr(B)\). Often it is easy to assess a conditional probability, such as \(\Pr(A \mid B)\), directly. In such a case, we can use the multiplication rule for conditional probabilities to compute \(\Pr(A \cap B) = \Pr(B)\Pr(A \mid B)\). All probability results have versions conditional on an event \(B\) with \(\Pr(B) > 0\): Just change all probabilities so that they are conditional on \(B\) in addition to anything else they were already conditional on. For example, the multiplication rule for conditional probabilities becomes \(\Pr(A_1 \cap A_2 \mid B) = \Pr(A_1 \mid B)\Pr(A_2 \mid A_1 \cap B)\). A partition is a collection of disjoint events whose union is the whole sample space. To be most useful, a partition is chosen so that an important source of uncertainty is reduced if we learn which one of the partition events occurs. If the conditional probability of an event \(A\) is available given each event in a partition, the law of total probability tells how to combine these conditional probabilities to get \(\Pr(A)\).

2.1.5 Exercises

Exercise 2.1 (Exercise 2.1.1) If \(A \subset B\) with \(\Pr(B) > 0\), what is the value of \(\Pr(A \mid B)\)?

Exercise 2.2 (Exercise 2.1.2) If \(A\) and \(B\) are disjoint events and \(\Pr(B) > 0\), what is the value of \(\Pr(A \mid B)\)?

Exercise 2.3 (Exercise 2.1.3) If \(S\) is the sample space of an experiment and \(A\) is any event in that space, what is the value of \(\Pr(A \mid S)\)?

Exercise 2.4 (Exercise 2.1.4) Each time a shopper purchases a tube of toothpaste, he chooses either brand \(A\) or brand \(B\). Suppose that for each purchase after the first, the probability is \(1/3\) that he will choose the same brand that he chose on his preceding purchase and the probability is \(2/3\) that he will switch brands. If he is equally likely to choose either brand \(A\) or brand \(B\) on his first purchase, what is the probability that both his first and second purchases will be brand \(A\) and both his third and fourth purchases will be brand \(B\)?

Exercise 2.5 (Exercise 2.1.5) A box contains \(r\) red balls and \(b\) blue balls. One ball is selected at random and its color is observed. The ball is then returned to the box and \(k\) additional balls of the same color are also put into the box. A second ball is then selected at random, its color is observed, and it is returned to the box together with \(k\) additional balls of the same color. Each time another ball is selected, the process is repeated. If four balls are selected, what is the probability that the first three balls will be red and the fourth ball will be blue?

Exercise 2.6 (Exercise 2.1.6) A box contains three cards. One card is red on both sides, one card is green on both sides, and one card is red on one side and green on the other. One card is selected from the box at random, and the color on one side is observed. If this side is green, what is the probability that the other side of the card is also green?

Exercise 2.7 (Exercise 2.1.7) Consider again the conditions of Exercise 1.83 of Section 1.10. If a family selected at random from the city subscribes to newspaper \(A\), what is the probability that the family also subscribes to newspaper \(B\)?

Exercise 2.8 (Exercise 2.1.8) Consider again the conditions of Exercise 1.83 of Section 1.10. If a family selected at random from the city subscribes to at least one of the three newspapers \(A\), \(B\), and \(C\), what is the probability that the family subscribes to newspaper \(A\)?

Exercise 2.9 (Exercise 2.1.9) Suppose that a box contains one blue card and four red cards, which are labeled \(A\), \(B\), \(C\), and \(D\). Suppose also that two of these five cards are selected at random, without replacement.

- If it is known that card \(A\) has been selected, what is the probability that both cards are red?

- If it is known that at least one red card has been selected, what is the probability that both cards are red?

Exercise 2.10 (Exercise 2.1.10) Consider the following version of the game of craps: The player rolls two dice. If the sum on the first roll is 7 or 11, the player wins the game immediately. If the sum on the first roll is 2, 3, or 12, the player loses the game immediately. However, if the sum on the first roll is 4, 5, 6, 8, 9, or 10, then the two dice are rolled again and again until the sum is either 7 or 11 or the original value. If the original value is obtained a second time before either 7 or 11 is obtained, then the player wins. If either 7 or 11 is obtained before the original value is obtained a second time, then the player loses. Determine the probability that the player will win this game.

Exercise 2.11 (Exercise 2.1.11) For any two events \(A\) and \(B\) with \(\Pr(B) > 0\), prove that \(\Pr(A^c \mid B) = 1 − \Pr(A \mid B)\).

Exercise 2.12 (Exercise 2.1.12) For any three events \(A\), \(B\), and \(D\), such that \(\Pr(D) > 0\), prove that \(\Pr(A \cup B \mid D) = \Pr(A \mid D) + \Pr(B \mid D) − \Pr(A \cap B \mid D)\).

Exercise 2.13 (Exercise 2.1.13) A box contains three coins with a head on each side, four coins with a tail on each side, and two fair coins. If one of these nine coins is selected at random and tossed once, what is the probability that a head will be obtained?

Exercise 2.14 (Exercise 2.1.14) A machine produces defective parts with three different probabilities depending on its state of repair. If the machine is in good working order, it produces defective parts with probability 0.02. If it is wearing down, it produces defective parts with probability 0.1. If it needs maintenance, it produces defective parts with probability 0.3. The probability that the machine is in good working order is 0.8, the probability that it is wearing down is 0.1, and the probability that it needs maintenance is 0.1. Compute the probability that a randomly selected part will be defective.

Exercise 2.15 (Exercise 2.1.15) The percentages of voters classed as Liberals in three different election districts are divided as follows: in the first district, 21 percent; in the second district, 45 percent; and in the third district, 75 percent. If a district is selected at random and a voter is selected at random from that district, what is the probability that she will be a Liberal?

Exercise 2.16 (Exercise 2.1.16) Consider again the shopper described in Exercise 2.4. On each purchase, the probability that he will choose the same brand of toothpaste that he chose on his preceding purchase is \(1/3\), and the probability that he will switch brands is \(2/3\). Suppose that on his first purchase the probability that he will choose brand \(A\) is \(1/4\) and the probability that he will choose brand \(B\) is \(3/4\). What is the probability that his second purchase will be brand \(B\)?

Exercise 2.17 (Exercise 2.1.17) Prove the conditional version of the law of total probability (Equation 2.5).

2.2 Independent Events

If learning that \(B\) has occurred does not change the probability of \(A\), then we say that \(A\) and \(B\) are independent. There are many cases in which events \(A\) and \(B\) are not independent, but they would be independent if we learned that some other event \(C\) had occurred. In this case, \(A\) and \(B\) are conditionally independent given \(C\).

Example 2.14 (Example 2.2.1: Tossing Coins) Suppose that a fair coin is tossed twice. The experiment has four outcomes, \(HH\), \(HT\), \(TH\), and \(TT\), that tell us how the coin landed on each of the two tosses. We can assume that this sample space is simple so that each outcome has probability \(1/4\). Suppose that we are interested in the second toss. In particular, we want to calculate the probability of the event \(A = \{H\text{ on second toss}\}\). We see that \(A = \{HH, TH\}\), so that \(\Pr(A) = 2/4 = 1/2\). If we learn that the first coin landed \(T\), we might wish to compute the conditional probability \(\Pr(A \mid B)\) where \(B = \{T\text{ on first toss}\}\). Using the definition of conditional probability, we easily compute

\[ \Pr(A \mid B) = \frac{\Pr(A \cap B)}{\Pr(B)} = \frac{1/4}{1/2} = \frac{1}{2}, \]

because \(A \cap B = \{TH\}\) has probability \(1/4\). We see that \(\Pr(A \mid B) = \Pr(A)\); hence, we don’t change the probability of \(A\) even after we learn that \(B\) has occurred.

2.2.1 Definition of Independence

The conditional probability of the event \(A\) given that the event \(B\) has occurred is the revised probability of \(A\) after we learn that \(B\) has occurred. It might be the case, however, that no revision is necessary to the probability of \(A\) even after we learn that \(B\) occurs. This is precisely what happened in Example 2.14. In this case, we say that \(A\) and \(B\) are independent events. As another example, if we toss a coin and then roll a die, we could let \(A\) be the event that the die shows 3 and let \(B\) be the event that the coin lands with heads up. If the tossing of the coin is done in isolation of the rolling of the die, we might be quite comfortable assigning \(\Pr(A \mid B) = \Pr(A) = 1/6\). In this case, we say that \(A\) and \(B\) are independent events.

In general, if \(\Pr(B) > 0\), the equation \(\Pr(A \mid B) = \Pr(A)\) can be rewritten as \(\Pr(A \cap B) / \Pr(B) = \Pr(A)\). If we multiply both sides of this last equation by \(\Pr(B)\), we obtain the equation \(\Pr(A \cap B) = \Pr(A)\Pr(B)\). In order to avoid the condition \(\Pr(B) > 0\), the mathematical definition of the independence of two events is stated as follows:

Definition 2.4 (Definition 2.2.1: Independent Events) Two events \(A\) and \(B\) are independent if

\[ \Pr(A \cap B) = \Pr(A)\Pr(B). \]

Suppose that \(\Pr(A) > 0\) and \(\Pr(B) > 0\). Then it follows easily from the definitions of independence and conditional probability that \(A\) and \(B\) are independent if and only if \(\Pr(A \mid B) = \Pr(A)\) and \(\Pr(B \mid A) = \Pr(B)\).

2.2.2 Independence of Two Events

If two events \(A\) and \(B\) are considered to be independent because the events are physically unrelated, and if the probabilities \(\Pr(A)\) and \(\Pr(B)\) are known, then the definition can be used to assign a value to \(\Pr(A \cap B)\).

Example 2.15 (Example 2.2.2: Machine Operation) Suppose that two machines 1 and 2 in a factory are operated independently of each other. Let \(A\) be the event that machine 1 will become inoperative during a given 8-hour period, let \(B\) be the event that machine 2 will become inoperative during the same period, and suppose that \(\Pr(A) = 1/3\) and \(\Pr(B) = 1/4\). We shall determine the probability that at least one of the machines will become inoperative during the given period.

The probability \(\Pr(A \cap B)\) that both machines will become inoperative during the period is

\[ \Pr(A \cap B) = \Pr(A)\Pr(B) = \left(\frac{1}{3}\right)\left(\frac{1}{4}\right) = \frac{1}{12}. \]

Therefore, the probability \(\Pr(A \cup B)\) that at least one of the machines will become inoperative during the period is

\[ \begin{align*} \Pr(A \cup B) &= \Pr(A) + \Pr(B) − \Pr(A \cap B) \\ &= \frac{1}{3} + \frac{1}{4} - \frac{1}{12} = \frac{1}{2}. \end{align*} \]

The next example shows that two events \(A\) and \(B\), which are physically related, can, nevertheless, satisfy the definition of independence.

Example 2.16 (Example 2.2.3: Rolling a Die) Suppose that a balanced die is rolled. Let \(A\) be the event that an even number is obtained, and let \(B\) be the event that one of the numbers 1, 2, 3, or 4 is obtained. We shall show that the events \(A\) and \(B\) are independent.

In this example, \(\Pr(A) = 1/2\) and \(\Pr(B) = 2/3\). Furthermore, since \(A \cap B\) is the event that either the number 2 or the number 4 is obtained, \(\Pr(A \cap B) = 1/3\). Hence, \(\Pr(A \cap B) = \Pr(A)\Pr(B)\). It follows that the events \(A\) and \(B\) are independent events, even though the occurrence of each event depends on the same roll of a die.

The independence of the events \(A\) and \(B\) in Example 2.16 can also be interpreted as follows: Suppose that a person must bet on whether the number obtained on the die will be even or odd, that is, on whether or not the event \(A\) will occur. Since three of the possible outcomes of the roll are even and the other three are odd, the person will typically have no preference between betting on an even number and betting on an odd number.

Suppose also that after the die has been rolled, but before the person has learned the outcome and before she has decided whether to bet on an even outcome or on an odd outcome, she is informed that the actual outcome was one of the numbers 1, 2, 3, or 4, i.e., that the event \(B\) has occurred. The person now knows that the outcome was 1, 2, 3, or 4. However, since two of these numbers are even and two are odd, the person will typically still have no preference between betting on an even number and betting on an odd number. In other words, the information that the event \(B\) has occurred is of no help to the person who is trying to decide whether or not the event \(A\) has occurred.

Independence of Complements: In the foregoing discussion of independent events, we stated that if \(A\) and \(B\) are independent, then the occurrence or nonoccurrence of \(A\) should not be related to the occurrence or nonoccurrence of \(B\). Hence, if \(A\) and \(B\) satisfy the mathematical definition of independent events, then it should also be true that \(A\) and \(B^c\) are independent events, that \(A^c\) and \(B\) are independent events, and that \(A^c\) and \(B^c\) are independent events. One of these results is established in the next theorem.

Theorem 2.5 (Theorem 2.2.1) If two events \(A\) and \(B\) are independent, then the events \(A\) and \(B^c\) are also independent.

Proof. Theorem 1.17 says that

\[ \Pr(A \cap B^c) = \Pr(A) − \Pr(A \cap B). \]

Furthermore, since \(A\) and \(B\) are independent events, \(\Pr(A \cap B) = \Pr(A)\Pr(B)\). It now follows that

\[ \begin{align*} \Pr(A \cap B^c) &= \Pr(A) − \Pr(A)\Pr(B) = \Pr(A)[1 − \Pr(B)] \\ &= \Pr(A)\Pr(B^c). \end{align*} \]

Therefore, the events \(A\) and \(B^c\) are independent.

The proof of the analogous result for the events \(A^c\) and \(B\) is similar, and the proof for the events \(A^c\) and \(B^c\) is required in Exercise 2.19 at the end of this section.

2.2.3 Independence of Several Events

The definition of independent events can be extended to any number of events, \(A_1, \ldots, A_k\). Intuitively, if learning that some of these events do or do not occur does not change our probabilities for any events that depend only on the remaining events, we would say that all \(k\) events are independent. The mathematical definition is the following analog to Definition 2.4.

Definition 2.5 (Definition 2.2.2: (Mutually) Independent Events) The \(k\) events \(A_1, \ldots, A_k\) are independent (or mutually independent) if, for every subset \(A_{i_1}, \ldots, A_{i_j}\) of \(j\) of these events (\(j = 2, 3, \ldots, k\)),

\[ \Pr(A_{i_1} \cap \cdots \cap A_{i_j}) = \Pr(A_{i_1}) \cdots \Pr(A_{i_j}). \]

As an example, in order for three events \(A\), \(B\), and \(C\) to be independent, the following four relations must be satisfied:

\[ \begin{align*} \Pr(A \cap B) = \Pr(A)\Pr(B), \\ \Pr(A \cap C) = \Pr(A)\Pr(C), \\ \Pr(B \cap C) = \Pr(B)\Pr(C), \end{align*} \tag{2.7}\]

and

\[ \Pr(A \cap B \cap C) = \Pr(A)\Pr(B)\Pr(C). \tag{2.8}\]

It is possible that Equation 2.8 will be satisfied, but one or more of the three relations Equation 2.7 will not be satisfied. On the other hand, as is shown in the next example, it is also possible that each of the three relations Equation 2.7 will be satisfied but Equation 2.8 will not be satisfied.

Example 2.17 (Example 2.2.4: Pairwise Independence) Suppose that a fair coin is tossed twice so that the sample space \(S = \{HH, HT, TH, TT\}\) is simple. Define the following three events:

\[ \begin{align*} A &= \{H\text{ on first toss}\} = \{HH, HT\}, \\ B &= \{H\text{ on second toss}\} = \{HH, TH\},\text{ and} \\ C &= \{\text{Both tosses the same}\} = \{HH, TT\}. \end{align*} \]

Then \(A \cap B = A \cap C = B \cap C = A \cap B \cap C = \{HH\}\). Hence,

\[ \Pr(A) = \Pr(B) = \Pr(C) = 1/2 \]

and

\[ \Pr(A \cap B) = \Pr(A \cap C) = \Pr(B \cap C) = \Pr(A \cap B \cap C) = 1/4. \]

It follows that each of the three relations of Equation 2.7 is satisfied but Equation 2.8 is not satisfied. These results can be summarized by saying that the events \(A\), \(B\), and \(C\) are pairwise independent, but all three events are not independent.

We shall now present some examples that will illustrate the power and scope of the concept of independence in the solution of probability problems.

Example 2.18 (Example 2.2.5: Inspecting Items) Suppose that a machine produces a defective item with probability \(p\) (\(0 < p < 1\)) and produces a nondefective item with probability \(1 − p\). Suppose further that six items produced by the machine are selected at random and inspected, and that the results (defective or nondefective) for these six items are independent. We shall determine the probability that exactly two of the six items are defective.

It can be assumed that the sample space \(S\) contains all possible arrangements of six items, each one of which might be either defective or nondefective. For \(j = 1, \ldots, 6\), we shall let \(D_j\) denote the event that the \(j\)th item in the sample is defective so that \(D_j^c\) is the event that this item is nondefective. Since the outcomes for the six different items are independent, the probability of obtaining any particular sequence of defective and nondefective items will simply be the product of the individual probabilities for the items. For example,

\[ \begin{align*} \Pr(D_1^c \cap D_2 \cap D_3^c \cap D_4^c \cap D_5 \cap D_6^c) &= \Pr(D_1^c)\Pr(D_2)\Pr(D_3^c)\Pr(D_4^c)\Pr(D_5)\Pr(D_6^c) \\ &= (1-p)p(1-p)(1-p)p(1-p) = p^2(1-p)^4. \end{align*} \]

It can be seen that the probability of any other particular sequence in \(S\) containing two defective items and four nondefective items will also be \(p^2(1− p)^4\). Hence, the probability that there will be exactly two defectives in the sample of six items can be found by multiplying the probability \(p^2(1− p)^4\) of any particular sequence containing two defectives by the possible number of such sequences. Since there are \(\binom{6}{2}\) distinct arrangements of two defective items and four nondefective items, the probability of obtaining exactly two defectives is \(\binom{6}{2}p^2(1-p)^4\).

Example 2.19 (Example 2.2.6: Obtaining a Defective Item) For the conditions of Example 2.18, we shall now determine the probability that at least one of the six items in the sample will be defective.

Since the outcomes for the different items are independent, the probability that all six items will be nondefective is \((1−p)^6\). Therefore, the probability that at least one item will be defective is \(1 − (1 − p)^6\).

Example 2.20 (Example 2.2.7: Tossing a Coin Until a Head Appears) Suppose that a fair coin is tossed until a head appears for the first time, and assume that the outcomes of the tosses are independent. We shall determine the probability \(p_n\) that exactly \(n\) tosses will be required.

The desired probability is equal to the probability of obtaining \(n − 1\) tails in succession and then obtaining a head on the next toss. Since the outcomes of the tosses are independent, the probability of this particular sequence of \(n\) outcomes is \(p_n = (1/2)^n\).

The probability that a head will be obtained sooner or later (or, equivalently, that tails will not be obtained forever) is

\[ \sum_{n=1}^{\infty}p_n = \frac{1}{2} + \frac{1}{4} + \frac{1}{8} + \cdots = 1. \]

Since the sum of the probabilities \(p_n\) is 1, it follows that the probability of obtaining an infinite sequence of tails without ever obtaining a head must be 0.

Example 2.21 (Example 2.2.8: Inspecting Items One at a Time) Consider again a machine that produces a defective item with probability \(p\) and produces a nondefective item with probability \(1 − p\). Suppose that items produced by the machine are selected at random and inspected one at a time until exactly five defective items have been obtained. We shall determine the probability \(p_n\) that exactly \(n\) items (\(n \geq 5\)) must be selected to obtain the five defectives.

The fifth defective item will be the \(n\)th item that is inspected if and only if there are exactly four defectives among the first \(n − 1\) items and then the \(n\)th item is defective. By reasoning similar to that given in Example 2.18, it can be shown that the probability of obtaining exactly four defectives and \(n − 5\) nondefectives among the first \(n − 1\) items is \(\binom{n-1}{4}p^4(1-p)^{n-5}\). The probability that the \(n\)th item will be defective is \(p\). Since the first event refers to outcomes for only the first \(n − 1\) items and the second event refers to the outcome for only the \(n\)th item, these two events are independent. Therefore, the probability that both events will occur is equal to the product of their probabilities. It follows that

\[ p_n = \binom{n-1}{4}p^5(1-p)^{n-5} \]

Example 2.22 (Example 2.2.9: People v. Collins.) Finkelstein and Levin (1990) describe a criminal case whose verdict was overturned by the Supreme Court of California in part due to a probability calculation involving both conditional probability and independence. The case, People v. Collins, 68 Cal. 2d 319, 438 P.2d 33 (1968), involved a purse snatching in which witnesses claimed to see a young woman with blond hair in a ponytail fleeing from the scene in a yellow car driven by a black man with a beard. A couple meeting the description was arrested a few days after the crime, but no physical evidence was found. A mathematician calculated the probability that a randomly selected couple would possess the described characteristics as about \(8.3 \times 10^{−8}\), or 1 in 12 million. Faced with such overwhelming odds and no physical evidence, the jury decided that the defendants must have been the only such couple and convicted them. The Supreme Court thought that a more useful probability should have been calculated. Based on the testimony of the witnesses, there was a couple that met the above description. Given that there was already one couple who met the description, what is the conditional probability that there was also a second couple such as the defendants?

Let \(p\) be the probability that a randomly selected couple from a population of \(n\) couples has certain characteristics. Let \(A\) be the event that at least one couple in the population has the characteristics, and let \(B\) be the event that at least two couples have the characteristics. What we seek is \(\Pr(B \mid A)\). Since \(B \subset A\), it follows that

\[ \Pr(B \mid A) = \frac{B \cap A}{\Pr(A)} = \frac{\Pr(B)}{\Pr(A)}. \]

We shall calculate \(\Pr(B)\) and \(\Pr(A)\) by breaking each event into more manageable pieces. Suppose that we number the \(n\) couples in the population from 1 to \(n\). Let \(A_i\) be the event that couple number \(i\) has the characteristics in question for \(i = 1, \ldots, n\), and let \(C\) be the event that exactly one couple has the characteristics. Then

\[ \begin{align*} A &= (A_1^c \cap A_2^c \cap \cdots \cap A_n^c)^c, \\ C &= (A_1 \cap A_2^c \cap \cdots \cap A_n^c) \cup (A_1^c \cap A_2 \cap A_3^c \cap \cdots \cap A_n^c) \cup \cdots \cup (A_1^c \cap \cdots \cap A_{n-1}^c \cap A_n), \\ B &= A \cap C^c. \end{align*} \]

Assuming that the \(n\) couples are mutually independent, \(\Pr(A^c) = (1 − p)^n\), and \(\Pr(A) = 1− (1 − p)^n\). The \(n\) events whose union is \(C\) are disjoint and each one has probability \(p(1− p)^{n−1}\), so \(\Pr(C) = np(1 − p)^{n−1}\). Since \(A = B \cup C\) with \(B\) and \(C\) disjoint, we have

\[ \Pr(B) = \Pr(A) − \Pr(C) = 1− (1 − p)^n − np(1− p)^{n−1}. \]

So,

\[ \Pr(B \mid A) = \frac{1 - (1-p)^n - np(1-p)^{n-1}}{1 - (1-p)^n}. \tag{2.9}\]

The Supreme Court of California reasoned that, since the crime occurred in a heavily populated area, \(n\) would be in the millions. For example, with \(p = 8.3 \times 10^{−8}\) and \(n = 8,000,000\), the value of Equation 2.9 is 0.2966. Such a probability suggests that there is a reasonable chance that there was another couple meeting the same description as the witnesses provided. Of course, the court did not know how large \(n\) was, but the fact that Equation 2.9 could easily be so large was grounds enough to rule that reasonable doubt remained as to the guilt of the defendants.

Independence and Conditional Probability: Two events \(A\) and \(B\) with positive probability are independent if and only if \(\Pr(A \mid B) = \Pr(A)\). Similar results hold for larger collections of independent events. The following theorem, for example, is straightforward to prove based on the definition of independence.

Theorem 2.6 (Theorem 2.2.2) Let \(A_1, \ldots, A_k\) be events such that \(\Pr(A_1 \cap \cdots \cap A_k) > 0\). Then \(A_1, \ldots, A_k\) are independent if and only if, for every two disjoint subsets \(\{i_1, \ldots, i_m\}\) and \(\{j_1, \ldots, j_\ell\}\) of \(\{1, \ldots, k\}\), we have

\[ \Pr(A_{i_1} \cap \cdots \cap A_{i_m} \mid A_{j_1} \cap \cdots \cap A_{j_\ell}) = \Pr(A_{i_1} \cap \cdots \cap A_{i_m}). \]

Theorem 2.6 says that \(k\) events are independent if and only if learning that some of the events occur does not change the probability that any combination of the other events occurs.

The Meaning of Independence: We have given a mathematical definition of independent events in Definition 2.4. We have also given some interpretations for what it means for events to be independent. The most instructive interpretation is the one based on conditional probability. If learning that \(B\) occurs does not change the probability of \(A\), then \(A\) and \(B\) are independent. In simple examples such as tossing what we believe to be a fair coin, we would generally not expect to change our minds about what is likely to happen on later flips after we observe earlier flips; hence, we declare the events that concern different flips to be independent. However, consider a situation similar to Example 2.18 in which items produced by a machine are inspected to see whether or not they are defective. In Example 2.18, we declared that the different items were independent and that each item had probability \(p\) of being defective. This might make sense if we were confident that we knew how well the machine was performing. But if we were unsure of how the machine were performing, we could easily imagine changing our mind about the probability that the 10th item is defective depending on how many of the first nine items are defective. To be specific, suppose that we begin by thinking that the probability is 0.08 that an item will be defective. If we observe one or zero defective items in the first nine, we might not make much revision to the probability that the 10th item is defective. On the other hand, if we observe eight or nine defectives in the first nine items, we might be uncomfortable keeping the probability at 0.08 that the 10th item will be defective. In summary, when deciding whether to model events as independent, try to answer the following question: “If I were to learn that some of these events occurred, would I change the probabilities of any of the others?” If we feel that we already know everything that we could learn from these events about how likely the others should be, we can safely model them as independent. If, on the other hand, we feel that learning some of these events could change our minds about how likely some of the others are, then we should be more careful about determining the conditional probabilities and not model the events as independent.

Mutually Exclusive Events and Mutually Independent Events: Two similar-sounding definitions have appeared earlier in this text. Definition 1.11 defines mutually exclusive events, and Definition 2.5 defines mutually independent events. It is almost never the case that the same set of events satisfies both definitions. The reason is that if events are disjoint (mutually exclusive), then learning that one occurs means that the others definitely did not occur. Hence, learning that one occurs would change the probabilities for all the others to 0, unless the others already had probability 0. Indeed, this suggests the only condition in which the two definitions would both apply to the same collection of events. The proof of the following result is left to Exercise 2.41 in this section.

Theorem 2.7 (Theorem 2.2.3) Let \(n > 1\) and let \(A_1, \ldots, A_n\) be events that are mutually exclusive. The events are also mutually independent if and only if all the events except possibly one of them has probability 0.

2.2.4 Conditionally Independent Events

Conditional probability and independence combine into one of the most versatile models of data collection. The idea is that, in many circumstances, we are unwilling to say that certain events are independent because we believe that learning some of them will provide information about how likely the others are to occur. But if we knew the frequency with which such events would occur, we might then be willing to assume that they are independent. This model can be illustrated using one of the examples from earlier in this section.

Example 2.23 (Example 2.2.10: Inspecting Items) Consider again the situation in Example 2.18. This time, however, suppose that we believe that we would change our minds about the probabilities of later items being defective were we to learn that certain numbers of early items were defective. Suppose that we think of the number \(p\) from Example 2.18 as the proportion of defective items that we would expect to see if we were to inspect a very large sample of items. If we knew this proportion \(p\), and if we were to sample only a few, say, six or 10 items now, we might feel confident maintaining that the probability of a later item being defective remains \(p\) even after we inspect some of the earlier items. On the other hand, if we are not sure what would be the proportion of defective items in a large sample, we might not feel confident keeping the probability the same as we continue to inspect.

To be precise, suppose that we treat the proportion \(p\) of defective items as unknown and that we are dealing with an augmented experiment as described in Definition 2.3. For simplicity, suppose that \(p\) can take one of two values, either 0.01 or 0.4, the first corresponding to normal operation and the second corresponding to a need for maintenance. Let \(B_1\) be the event that \(p = 0.01\), and let \(B_2\) be the event that \(p = 0.4\). If we knew that \(B_1\) had occurred, then we would proceed under the assumption that the events \(D_1, D_2, \ldots\) were independent with \(\Pr(D_i \mid B_1) = 0.01\) for all \(i\). For example, we could do the same calculations as in Examples 2.18 and 2.21 with \(p = 0.01\). Let \(A\) be the event that we observe exactly two defectives in a random sample of six items. Then \(\Pr(A \mid B_1) = \binom{6}{2}(0.01)^2(0.99)^4 = 1.44 \times 10^{-3}\). Similarly, if we knew that \(B_2\) had occurred, then we would assume that \(D_1, D_2, \ldots\) were independent with \(\Pr(D_i \mid B_2) = 0.4\). In this case, \(\Pr(A \mid B_2) = \binom{6}{2}(0.4)^2(0.6)^4 = 0.311\).

In Example 2.23, there is no reason that \(p\) must be required to assume at most two different values. We could easily allow \(p\) to take a third value or a fourth value, etc. Indeed, in Chapter 3 we shall learn how to handle the case in which every number between 0 and 1 is a possible value of \(p\). The point of the simple example is to illustrate the concept of assuming that events are independent conditional on another event, such as \(B_1\) or \(B_2\) in the example.

The formal concept illustrated in Example 2.23 is the following:

Definition 2.6 (Definition 2.2.3: Conditional Independence) We say that events \(A_1, \ldots, A_k\) are conditionally independent given \(B\) if, for every subcollection \(A_{i_1}, \ldots, A_{i_j}\) of \(j\) of these events (\(j = 2, 3, \ldots, k\)),

\[ \Pr\left( A_{i_1} \cap \cdots \cap A_{i_j} \mid B \right) = \Pr(A_{i_1} \mid B)\cdots \Pr(A_{i_j} \mid B). \]

Definition 2.6 is identical to Definition 2.5 for independent events with the modification that all probabilities in the definition are now conditional on \(B\). As a note, even if we assume that events \(A_1, \ldots, A_k\) are conditionally independent given \(B\), it is not necessary that they be conditionally independent given \(B^c\). In Example 2.23, the events \(D_1, D_2, \ldots\) were conditionally independent given both \(B_1\) and \(B_2 = B_1^c\), which is the typical situation. Exercise 2.57 in Section 2.3 is an example in which events are conditionally independent given one event \(B\) but are not conditionally independent given the complement \(B^c\).

Recall that two events \(A_1\) and \(A_2\) (with \(\Pr(A_1) > 0\)) are independent if and only if \(\Pr(A_2 \mid A_1) = \Pr(A_2)\). A similar result holds for conditionally independent events.

Theorem 2.8 (Theorem 2.2.4) Suppose that \(A_1\), \(A_2\), and \(B\) are events such that \(\Pr(A_1 \cap B) > 0\). Then \(A_1\) and \(A_2\) are conditionally independent given \(B\) if and only if \(\Pr(A_2 \mid A_1 \cap B) = \Pr(A_2 \mid B)\).

This is another example of the claim we made earlier that every result we can prove has an analog conditional on an event \(B\). The reader can prove this theorem in Exercise 2.39.

2.2.5 The Collector’s Problem

Suppose that \(n\) balls are thrown in a random manner into \(r\) boxes (\(r \leq n\)). We shall assume that the \(n\) throws are independent and that each of the \(r\) boxes is equally likely to receive any given ball. The problem is to determine the probability \(p\) that every box will receive at least one ball. This problem can be reformulated in terms of a collector’s problem as follows: Suppose that each package of bubble gum contains the picture of a baseball player, that the pictures of \(r\) different players are used, that the picture of each player is equally likely to be placed in any given package of gum, and that pictures are placed in different packages independently of each other. The problem now is to determine the probability \(p\) that a person who buys \(n\) packages of gum (\(n \geq r\)) will obtain a complete set of \(r\) different pictures.

For \(i = 1, \ldots, r\), let \(A_i\) denote the event that the picture of player \(i\) is missing from all \(n\) packages. Then \(\bigcup_{i=1}^r A_i\) is the event that the picture of at least one player is missing. We shall find $(_{i=1}^r A_i) by applying Equation 1.10.

Since the picture of each of the \(r\) players is equally likely to be placed in any particular package, the probability that the picture of player \(i\) will not be obtained in any particular package is \((r − 1)/r\). Since the packages are filled independently, the probability that the picture of player \(i\) will not be obtained in any of the \(n\) packages is \([(r − 1)/r]n\). Hence,

\[ \Pr(A_i) = \left(\frac{r-1}{r}\right)^n \; \text{ for }i = 1, \ldots, r. \]

Now consider any two players \(i\) and \(j\). The probability that neither the picture of player \(i\) nor the picture of player \(j\) will be obtained in any particular package is \((r − 2)/r\). Therefore, the probability that neither picture will be obtained in any of the \(n\) packages is \([(r − 2)/r]n\). Thus,

\[ \Pr(A_i \cap A_j) = \left(\frac{r-2}{r}\right)^n. \]

If we next consider any three players \(i\), \(j\), and \(k\), we find that

\[ \Pr(A_i \cap A_j \cap A_k) = \left(\frac{r - 3}{r}\right)^3. \]

By continuing in this way, we finally arrive at the probability \(\Pr(A_1 \cap A_2 \cap \cdots \cap A_r)\) that the pictures of all \(r\) players are missing from the \(n\) packages. Of course, this probability is 0. Therefore, by Equation 1.10 of Section 1.10,

\[ \begin{align*} \Pr\left( \bigcup_{i=1}^r A_i \right) &= r \left(\frac{r-1}{r}\right)^n - \binom{r}{2}\left(\frac{r-2}{r}\right)^n + \cdots + (-1)^r\binom{r}{r-1}\left(\frac{1}{r}\right)^n \\ &= \sum_{j=1}^{r-1}(-1)^{j+1}\binom{r}{j}\left(1 - \frac{j}{r}\right)^n. \end{align*} \]

Since the probability \(p\) of obtaining a complete set of \(r\) different pictures is equal to \(1 - \Pr(\bigcup_{i=1}^r A_i)\), it follows from the foregoing derivation that \(p\) can be written in the form

\[ p = \sum_{j=0}^{r-1}(-1)^j \binom{r}{j}\left(1 - \frac{j}{r}\right)^n. \]

::: :::

2.2.6 Summary

A collection of events is independent if and only if learning that some of them occur does not change the probabilities that any combination of the rest of them occurs. Equivalently, a collection of events is independent if and only if the probability of the intersection of every subcollection is the product of the individual probabilities. The concept of independence has a version conditional on another event. A collection of events is independent conditional on \(B\) if and only if the conditional probability of the intersection of every subcollection given \(B\) is the product of the individual conditional probabilities given \(B\). Equivalently, a collection of events is conditionally independent given \(B\) if and only if learning that some of them (and \(B\)) occur does not change the conditional probabilities given \(B\) that any combination of the rest of them occur. The full power of conditional independence will become more apparent after we introduce Bayes’ theorem in the next section.

2.2.7 Exercises

Exercise 2.18 (Exercise 2.2.1) If \(A\) and \(B\) are independent events and \(\Pr(B) < 1\), what is the value of \(\Pr(A^c \mid B^c)\)?

Exercise 2.19 (Exercise 2.2.2) Assuming that \(A\) and \(B\) are independent events, prove that the events \(A^c\) and \(B^c\) are also independent.

Exercise 2.20 (Exercise 2.2.3) Suppose that \(A\) is an event such that \(\Pr(A) = 0\) and that \(B\) is any other event. Prove that \(A\) and \(B\) are independent events.

Exercise 2.21 (Exercise 2.2.4) Suppose that a person rolls two balanced dice three times in succession. Determine the probability that on each of the three rolls, the sum of the two numbers that appear will be 7.

Exercise 2.22 (Exercise 2.2.5) Suppose that the probability that the control system used in a spaceship will malfunction on a given flight is 0.001. Suppose further that a duplicate, but completely independent, control system is also installed in the spaceship to take control in case the first system malfunctions. Determine the probability that the spaceship will be under the control of either the original system or the duplicate system on a given flight.

Exercise 2.23 (Exercise 2.2.6) Suppose that 10,000 tickets are sold in one lottery and 5000 tickets are sold in another lottery. If a person owns 100 tickets in each lottery, what is the probability that she will win at least one first prize?

Exercise 2.24 (Exercise 2.2.7) Two students \(A\) and \(B\) are both registered for a certain course. Assume that student \(A\) attends class 80 percent of the time, student \(B\) attends class 60 percent of the time, and the absences of the two students are independent.

- What is the probability that at least one of the two students will be in class on a given day?

- If at least one of the two students is in class on a given day, what is the probability that \(A\) is in class that day?

Exercise 2.25 (Exercise 2.2.8) If three balanced dice are rolled, what is the probability that all three numbers will be the same?

Exercise 2.26 (Exercise 2.2.9) Consider an experiment in which a fair coin is tossed until a head is obtained for the first time. If this experiment is performed three times, what is the probability that exactly the same number of tosses will be required for each of the three performances?

Exercise 2.27 (Exercise 2.2.10) The probability that any child in a certain family will have blue eyes is \(1/4\), and this feature is inherited independently by different children in the family. If there are five children in the family and it is known that at least one of these children has blue eyes, what is the probability that at least three of the children have blue eyes?

Exercise 2.28 (Exercise 2.2.11) Consider the family with five children described in Exercise 2.27.

- If it is known that the youngest child in the family has blue eyes, what is the probability that at least three of the children have blue eyes?

- Explain why the answer in part (a) is different from the answer in Exercise 2.27.

Exercise 2.29 (Exercise 2.2.12) Suppose that \(A\), \(B\), and \(C\) are three independent events such that \(\Pr(A) = 1/4\), \(\Pr(B) = 1/3\), and \(\Pr(C) = 1/2\).

- Determine the probability that none of these three events will occur.

- Determine the probability that exactly one of these three events will occur.

Exercise 2.30 (Exercise 2.2.13) Suppose that the probability that any particle emitted by a radioactive material will penetrate a certain shield is 0.01. If 10 particles are emitted, what is the probability that exactly one of the particles will penetrate the shield?

Exercise 2.31 (Exercise 2.2.14) Consider again the conditions of Exercise 2.30. If 10 particles are emitted, what is the probability that at least one of the particles will penetrate the shield?

Exercise 2.32 (Exercise 2.2.15) Consider again the conditions of Exercise 2.30. How many particles must be emitted in order for the probability to be at least 0.8 that at least one particle will penetrate the shield?

Exercise 2.33 (Exercise 2.2.16) In the World Series of baseball, two teams \(A\) and \(B\) play a sequence of games against each other, and the first team that wins a total of four games becomes the winner of the World Series. If the probability that team \(A\) will win any particular game against team \(B\) is \(1/3\), what is the probability that team \(A\) will win the World Series?

Exercise 2.34 (Exercise 2.2.17) Two boys \(A\) and \(B\) throw a ball at a target. Suppose that the probability that boy \(A\) will hit the target on any throw is \(1/3\) and the probability that boy \(B\) will hit the target on any throw is \(1/4\). Suppose also that boy \(A\) throws first and the two boys take turns throwing. Determine the probability that the target will be hit for the first time on the third throw of boy \(A\).

Exercise 2.35 (Exercise 2.2.18) For the conditions of Exercise 2.34, determine the probability that boy \(A\) will hit the target before boy \(B\) does.

Exercise 2.36 (Exercise 2.2.19) A box contains 20 red balls, 30 white balls, and 50 blue balls. Suppose that 10 balls are selected at random one at a time, with replacement; that is, each selected ball is replaced in the box before the next selection is made. Determine the probability that at least one color will be missing from the 10 selected balls.

Exercise 2.37 (Exercise 2.2.20) Suppose that \(A_1, \ldots, A_k\) form a sequence of \(k\) independent events. Let \(B_1, \ldots, B_k\) be another sequence of \(k\) events such that for each value of \(j\) (\(j = 1, \ldots, k\)), either \(B_j = A_j\) or \(B_j = A_j^c\). Prove that \(B_1, \ldots, B_k\) are also independent events. Hint: Use an induction argument based on the number of events \(B_j\) for which \(B_j = A_j^c\).

Exercise 2.38 (Exercise 2.2.21) Prove Theorem 2.6. Hint: The “only if” direction is direct from Definition 2.5. For the “if” direction, use induction on the value of \(j\) in the definition of independence. Let \(m = j − 1\) and let \(\ell = 1\) with \(j_1 = i_j\).

Exercise 2.39 (Exercise 2.2.22) Prove Theorem 2.8.

Exercise 2.40 (Exercise 2.2.23) A programmer is about to attempt to compile a series of 11 similar programs. Let \(A_i\) be the event that the \(i\)th program compiles successfully for \(i = 1, \ldots, 11\). When the programming task is easy, the programmer expects that 80 percent of programs should compile. When the programming task is difficult, she expects that only 40 percent of the programs will compile. Let \(B\) be the event that the programming task was easy. The programmer believes that the events \(A_1, \ldots, A_{11}\) are conditionally independent given \(B\) and given \(B^c\).

- Compute the probability that exactly 8 out of 11 programs will compile given \(B\).

- Compute the probability that exactly 8 out of 11 programs will compile given \(B^c\).

Exercise 2.41 (Exercise 2.2.24) Prove Theorem 2.7.

2.3 Bayes’ Theorem

Suppose that we are interested in which of several disjoint events \(B_1, \ldots, B_k\) will occur and that we will get to observe some other event \(A\). If \(\Pr(A \mid B_i)\) is available for each \(i\), then Bayes’ theorem is a useful formula for computing the conditional of the \(B_i\) events given \(A\).

We begin with a typical example.

Example 2.24 (Example 2.3.1: Test for a Disease) Suppose that you are walking down the street and notice that the Department of Public Health is giving a free medical test for a certain disease. The test is 90 percent reliable in the following sense: If a person has the disease, there is a probability of 0.9 that the test will give a positive response; whereas, if a person does not have the disease, there is a probability of only 0.1 that the test will give a positive response.

Data indicate that your chances of having the disease are only 1 in 10,000. However, since the test costs you nothing, and is fast and harmless, you decide to stop and take the test. A few days later you learn that you had a positive response to the test. Now, what is the probability that you have the disease?

The last question in Example 2.24 is a prototype of the question for which Bayes’ theorem was designed.We have at least two disjoint events (“you have the disease” and “you do not have the disease”) about which we are uncertain, and we learn a piece of information (the result of the test) that tells us something about the uncertain events. Then we need to know how to revise the probabilities of the events in the light of the information we learned.

We now present the general structure in which Bayes’ theorem operates before returning to the example.

2.3.1 Statement, Proof, and Examples of Bayes’ Theorem

Example 2.25 (Example 2.3.2: Selecting Bolts) Consider again the situation in Example 2.8, in which a bolt is selected at random from one of two boxes. Suppose that we cannot tell without making a further effort from which of the two boxes the one bolt is being selected. For example, the boxes may be identical in appearance or somebody else may actually select the box, but we only get to see the bolt. prior to selecting the bolt, it was equally likely that each of the two boxes would be selected. However, if we learn that event \(A\) has occurred, that is, a long bolt was selected, we can compute the conditional of the two boxes given \(A\). To remind the reader, \(B_1\) is the event that the box is selected containing 60 long bolts and 40 short bolts, while \(B_2\) is the event that the box is selected containing 10 long bolts and 20 short bolts. In Example 2.9, we computed \(\Pr(A) = 7/15\), \(\Pr(A \mid B_1) = 3/5\), \(\Pr(A \mid B_2) = 1/3\), and \(\Pr(B_1) = \Pr(B_2) = 1/2\). So, for example,

\[ \Pr(B_1 \mid A) = \frac{\Pr(A \cap B_1)}{\Pr(A)} = \frac{\Pr(B_1)\Pr(A \mid B_1)}{\Pr(A)} = \frac{\frac{1}{2}\cdot \frac{3}{5}}{\frac{7}{15}} = \frac{9}{14}. \]

Since the first box has a higher of long bolts than the second box, it seems reasonable that the probability of \(B_1\) should rise after we learn that a long bolt was selected. It must be that \(\Pr(B_2 \mid A) = 5/14\) since one or the other box had to be selected.

In Example 2.25, we started with uncertainty about which of two boxes would be chosen and then we observed a long bolt drawn from the chosen box. Because the two boxes have different chances of having a long bolt drawn, the observation of a long bolt changed the of each of the two boxes having been chosen. The calculation of how the change is the purpose of Bayes’ theorem.

Theorem 2.9 (Theorem 2.3.1: Bayes’ Theorem) Let the events \(B_1, \ldots, B_k\) form a partition of the space \(S\) such that \(\Pr(B_j) > 0\) for \(j = 1, \ldots, k\), and let \(A\) be an event such that \(\Pr(A) > 0\). Then, for \(i = 1, \ldots, k\),