Week 10: ETL Pipeline Orchestration with Airflow

DSAN 6000: Big Data and Cloud Computing

Fall 2025

Monday, November 3, 2025

Pipelines for Triggered Execution

…HEY! WAKE UP! NEW DATA JUST CAME IN!

You before this week:

- Sprint to your

.ipynband/or Spark cluster - Load, clean, process new data, manually, step-by-step

- Email update to boss

You after this week:

- Asset-aware pipeline automatically triggered

- Airflow orchestrates loading, cleaning, processing

EmailOperatorsends update

Pipelines vs. Pipeline Orchestration

From Astronomer Academy’s Airflow 101

Key Airflow-Specific Buzzwords

(Underlined words link to Airflow docs “Core Concepts” section)

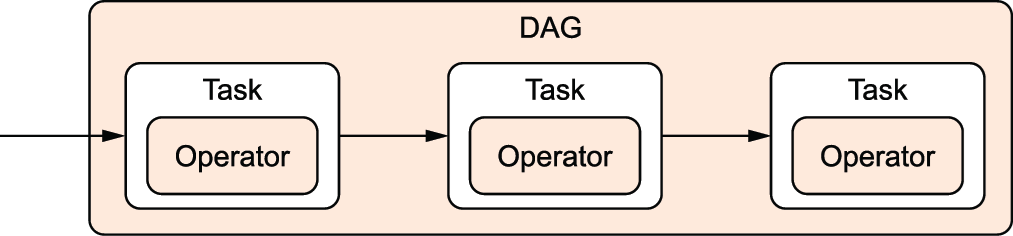

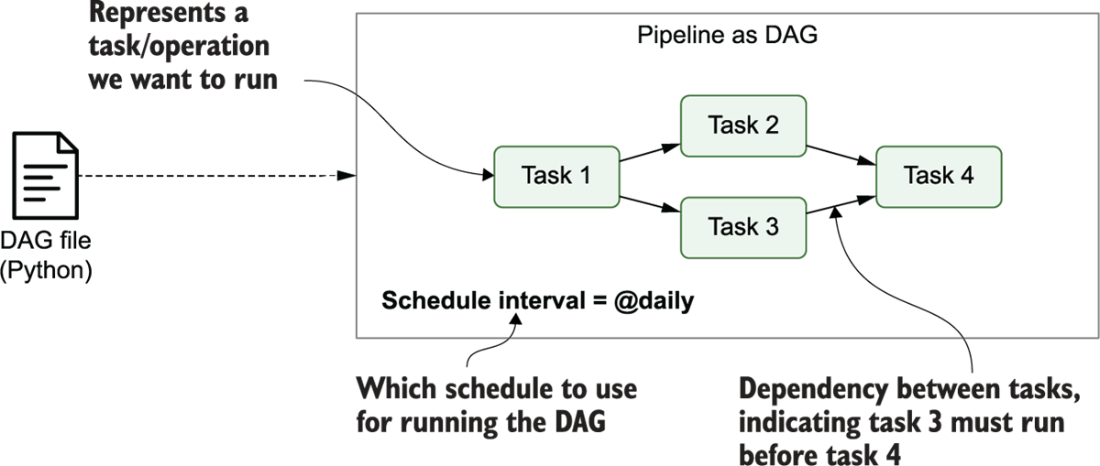

Directed Acyclic Graph (

DAG): Your pipeline as a whole!DAGs consist of multipletasks, which you “string together” using the control flow operators>>and<<[Ex ]

second_task,third_taskcan’t start untilfirst_taskcompletes:[Ex ]

fourth_taskcan’t start untilthird_taskcompletes:What kinds of

tasks can we create? Brings us to another concept…

Operators: What Kind of task?

- “Core”

Operators:BashOperatorandPythonOperator

- + Hundreds of “community provider”

Operators: HttpOperatorS3FileTransformOperatorSQLExecuteQueryOperatorEmailOperatorSlackAPIOperator

+ Jinja templating for managing how data “passes” from one step to the next:

Task vs. Operator: A Sanity-Preserving Distinction

From Operator docs:

When we talk about a

Task, we mean the generic “unit of execution” of aDAG; when we talk about anOperator, we mean a [specific] pre-madeTasktemplate, whose logic is all done for you and that just needs some arguments.

Task:

Operator:

Concepts \(\leadsto\) Code

start-airflow.sh

- Full Airflow “ecosystem” is running once you’ve started each piece via above (

bash) commands! - Let’s look at each in turn…

db migrate: The Airflow Metastore

start-airflow.sh

- General info used by DAGs: variables, connections, XComs

- Data about DAG and task runs (generated by scheduler)

- Logs / error information

- Not modified directly/manually! Managed by Airflow; To modify, use API Server →

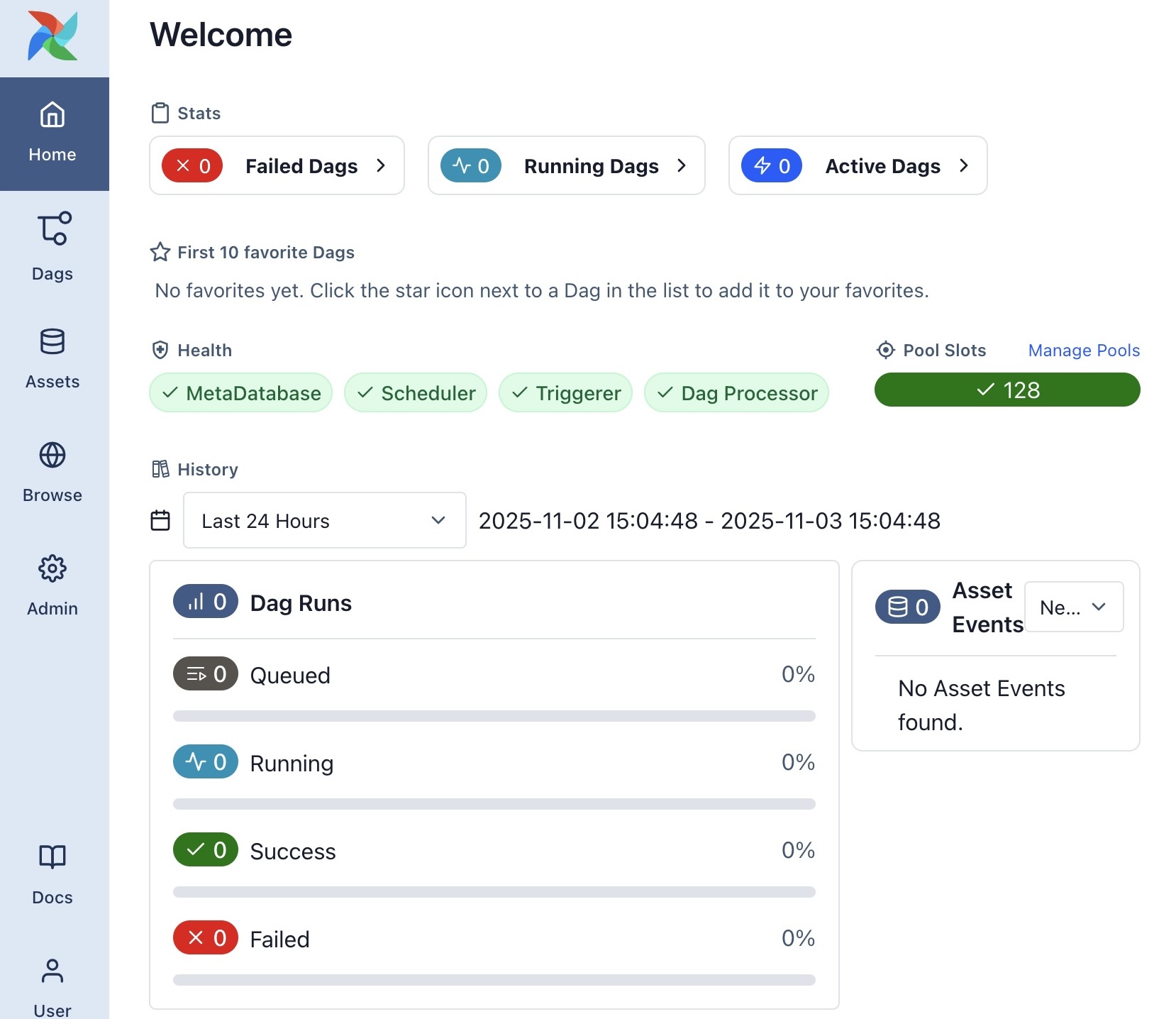

API Server

start-airflow.sh

Web UI for managing Airflow (much more on this later!)

Default Login Info

Default db migrate generates admin password in ~/simple_auth_manager_passwords.json.generated

Scheduler → Executor

start-airflow.sh

- Default:

LocalExecutor - For scaling:

EcsExecutor(AWS ECS),KubernetesExecutor - See also

SparkSubmitOperator

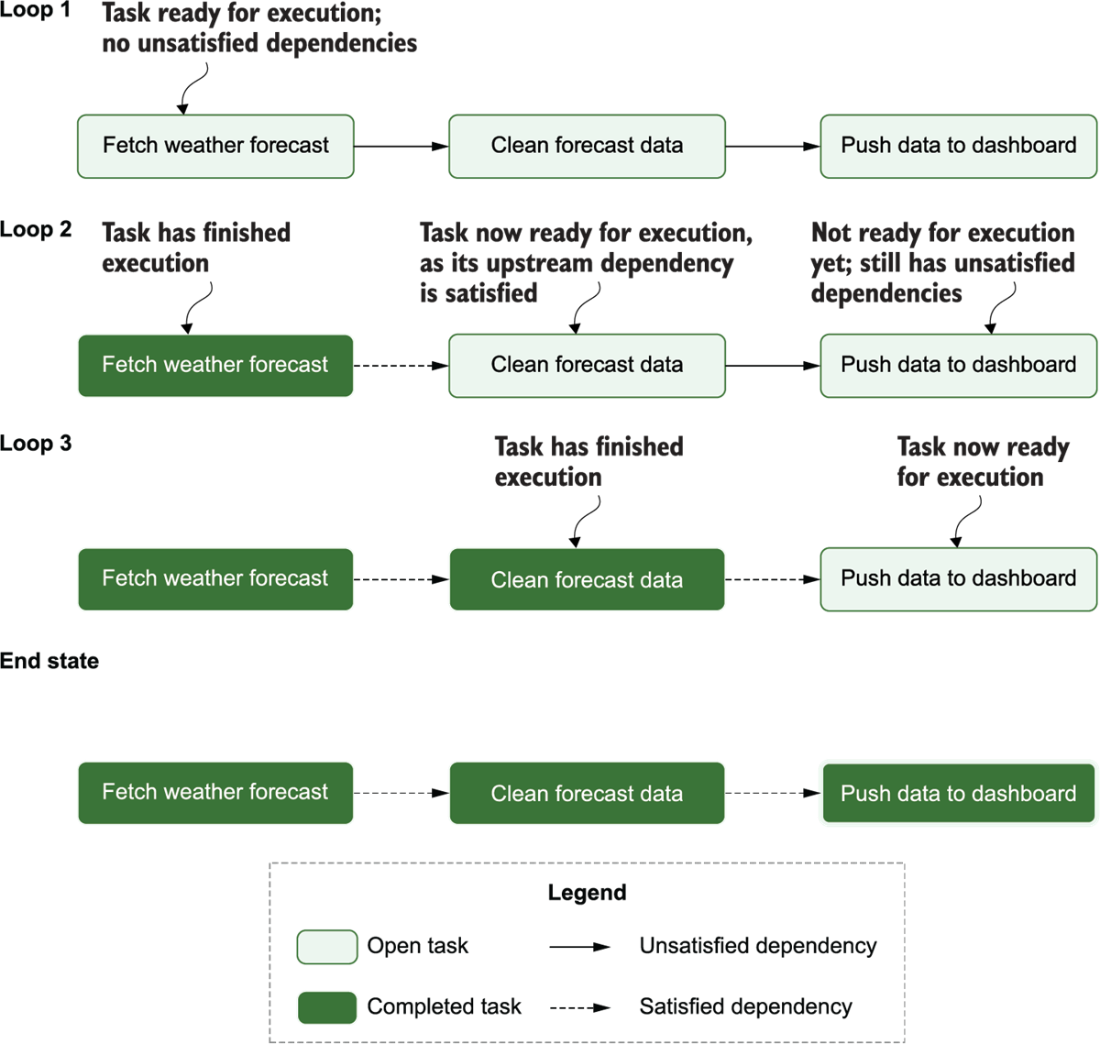

DAG Processor

start-airflow.sh

From Ruiter et al. (2026)

The schedule Argument

(Airflow uses pendulum under the hood, rather than datetime!)

dag_scheduling.py

Cron: Full-on scheduling language (used by computers since 1975!)

crontab.sh

Cron Presets: None, "@once", "@continuous", "@hourly", "@daily", "@weekly"

External Service Integration

| Service | Command |

|---|---|

| AWS | pip install 'apache-airflow[amazon]' |

| Azure | pip install 'apache-airflow[microsoft-azure]' |

| Databricks | pip install 'apache-airflow[databricks]' |

| GitHub | pip install 'apache-airflow[github]' |

| Google Cloud | pip install 'apache-airflow[google]' |

| MongoDB | pip install 'apache-airflow[mongo]' |

| OpenAI | pip install 'apache-airflow[openai]' |

| Slack | pip install 'apache-airflow[slack]' |

| Spark | pip install 'apache-airflow[apache-spark]' |

| Tableau | pip install 'apache-airflow[tableau]' |

(And many more:)

Jinja Example

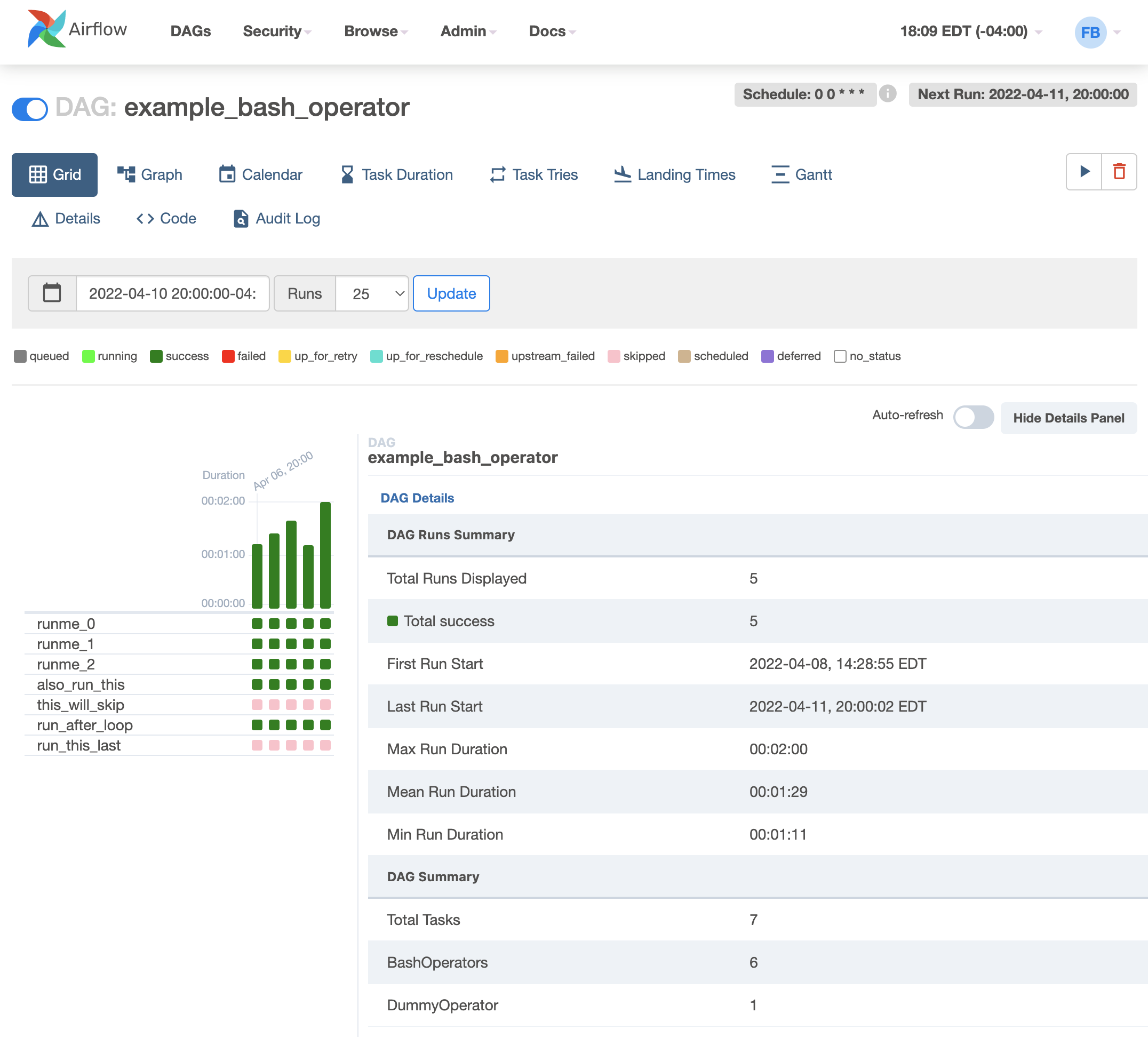

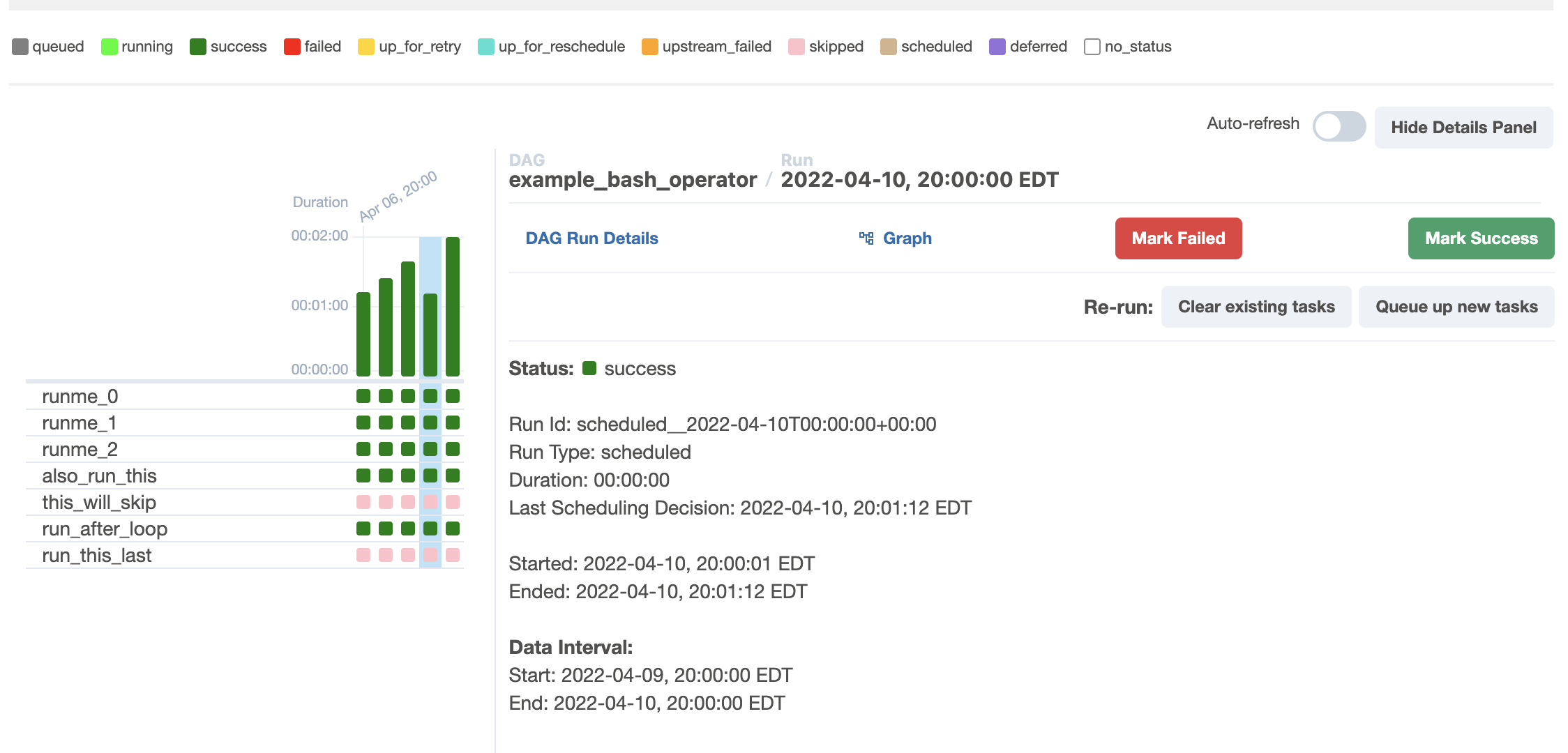

Airflow UI: Grid View

Airflow UI: Run Details

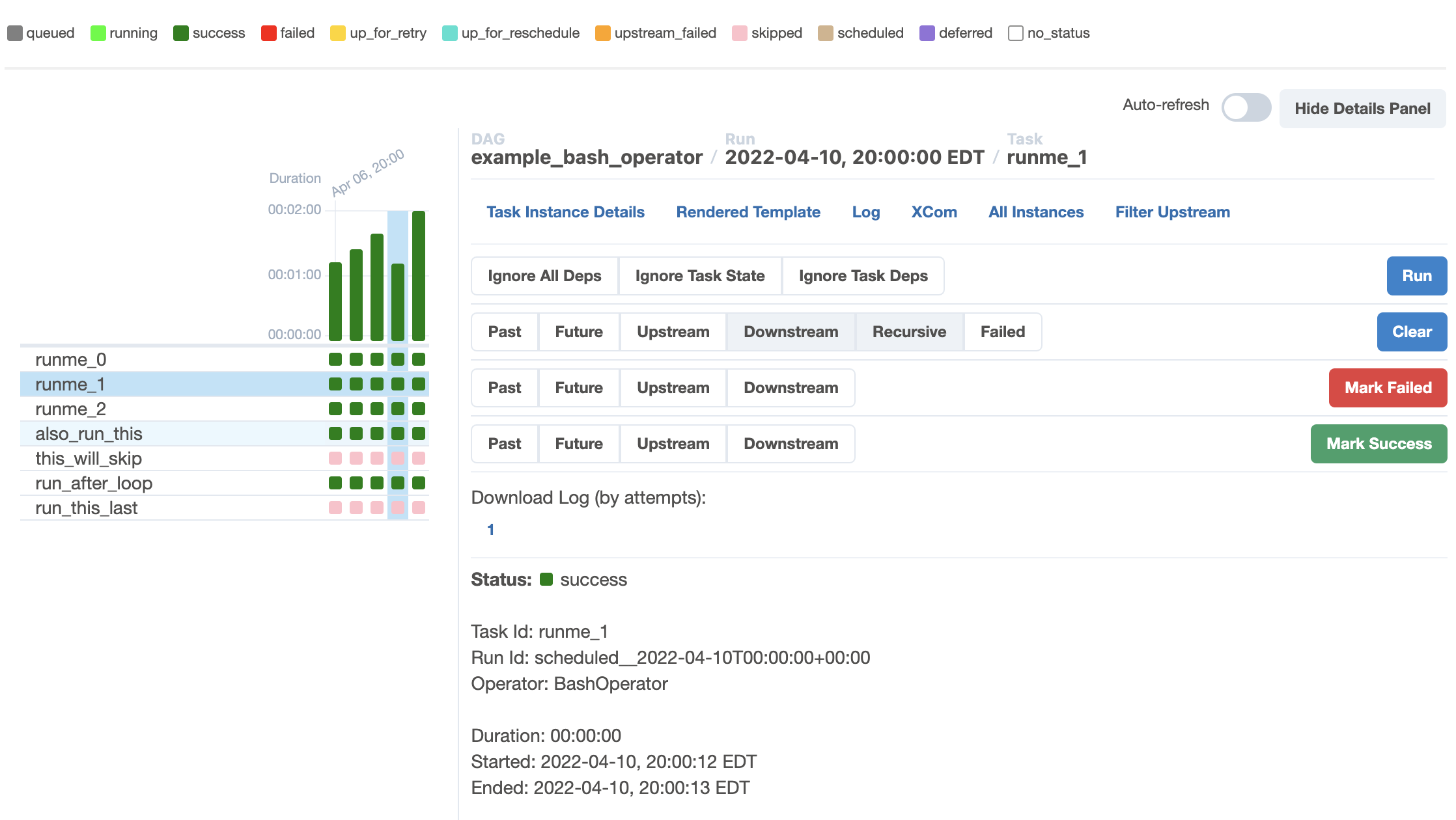

Airflow UI: Task Instance Details

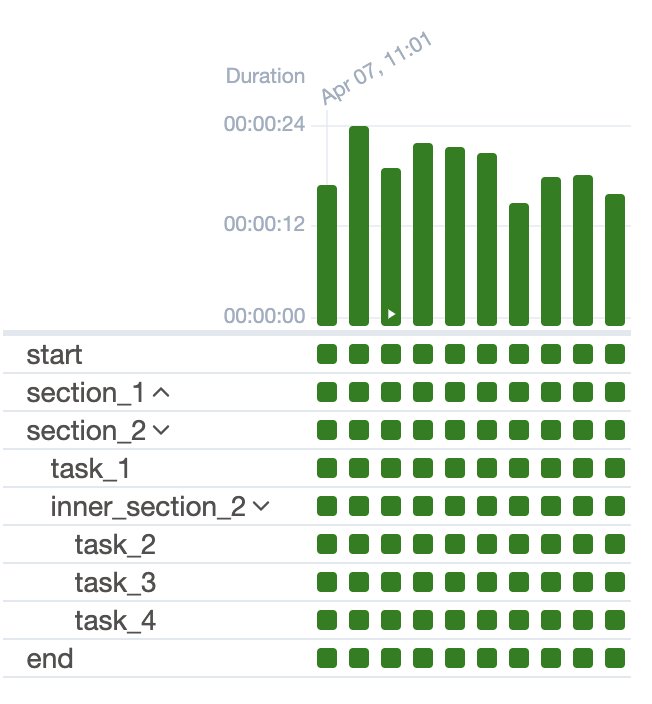

Tasks → Task Groups

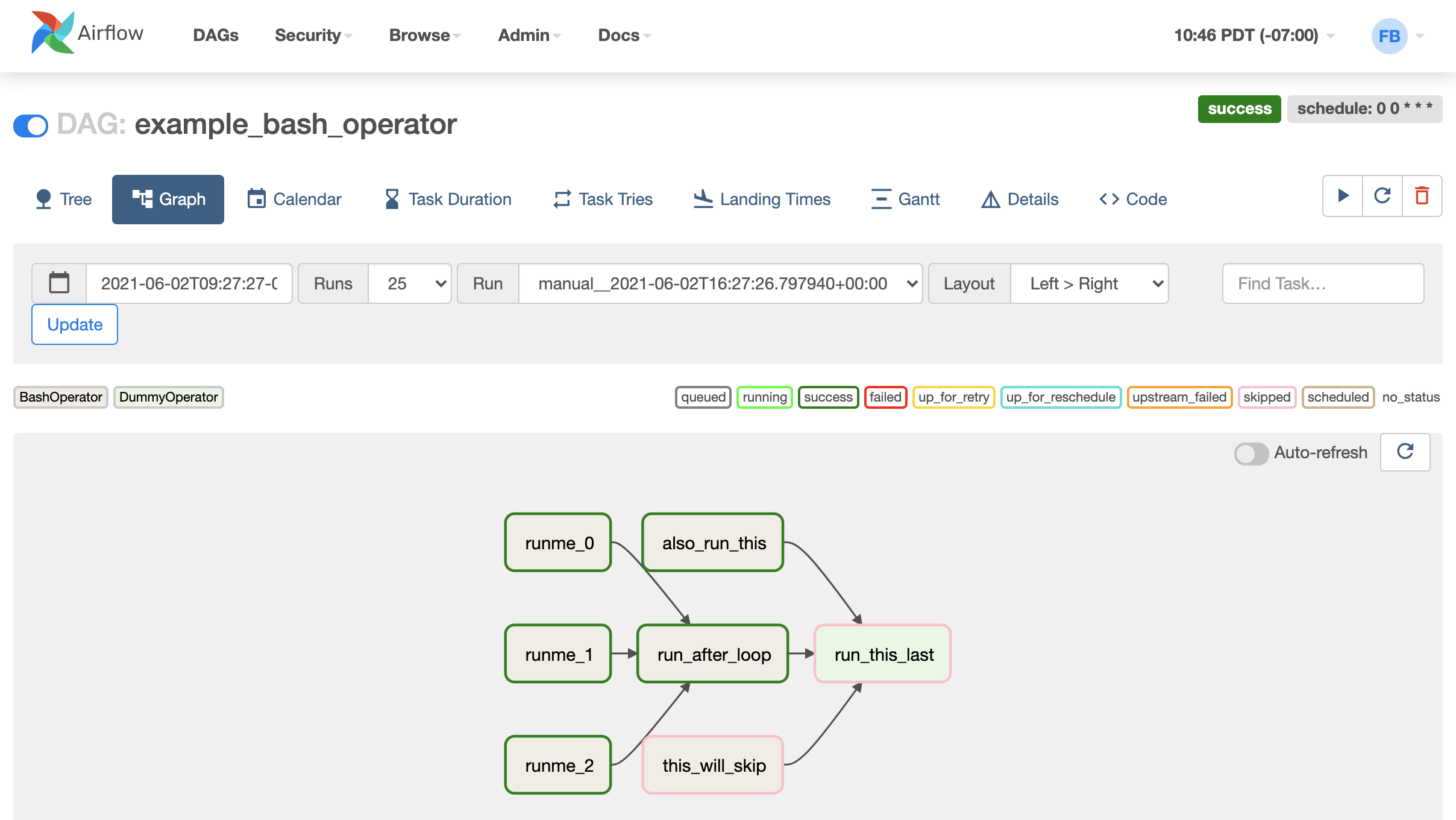

Graph View

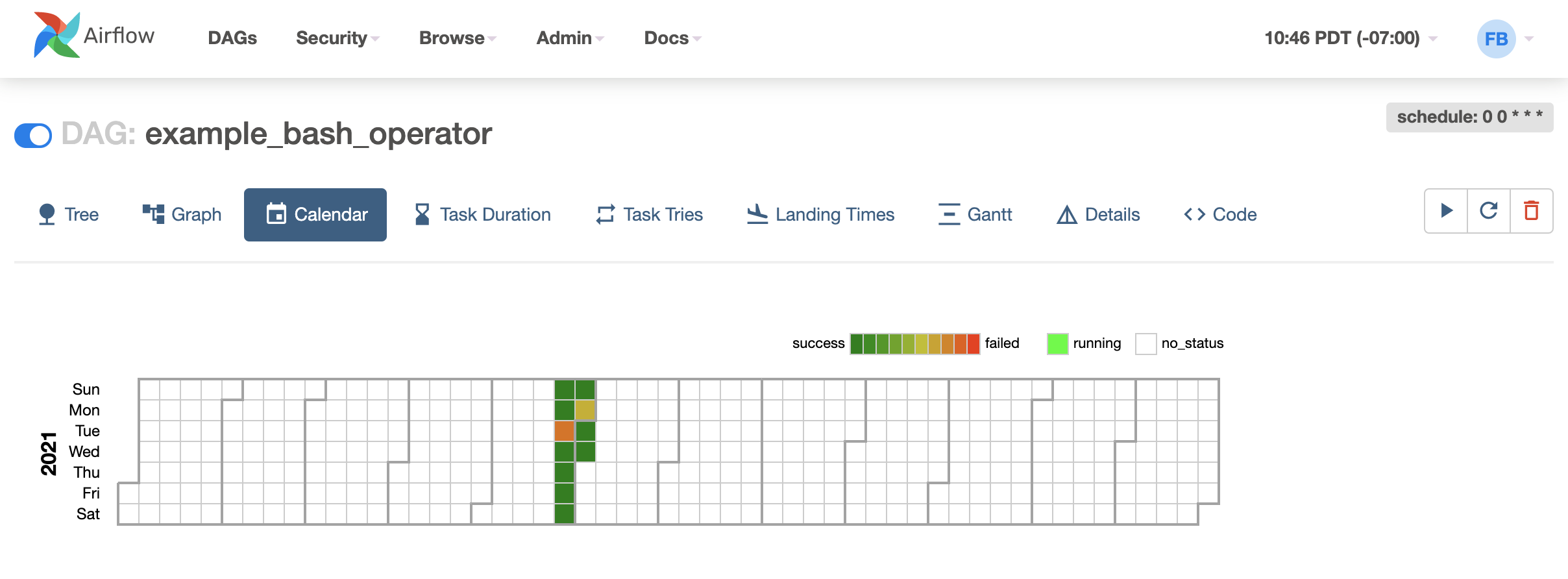

Calendar View

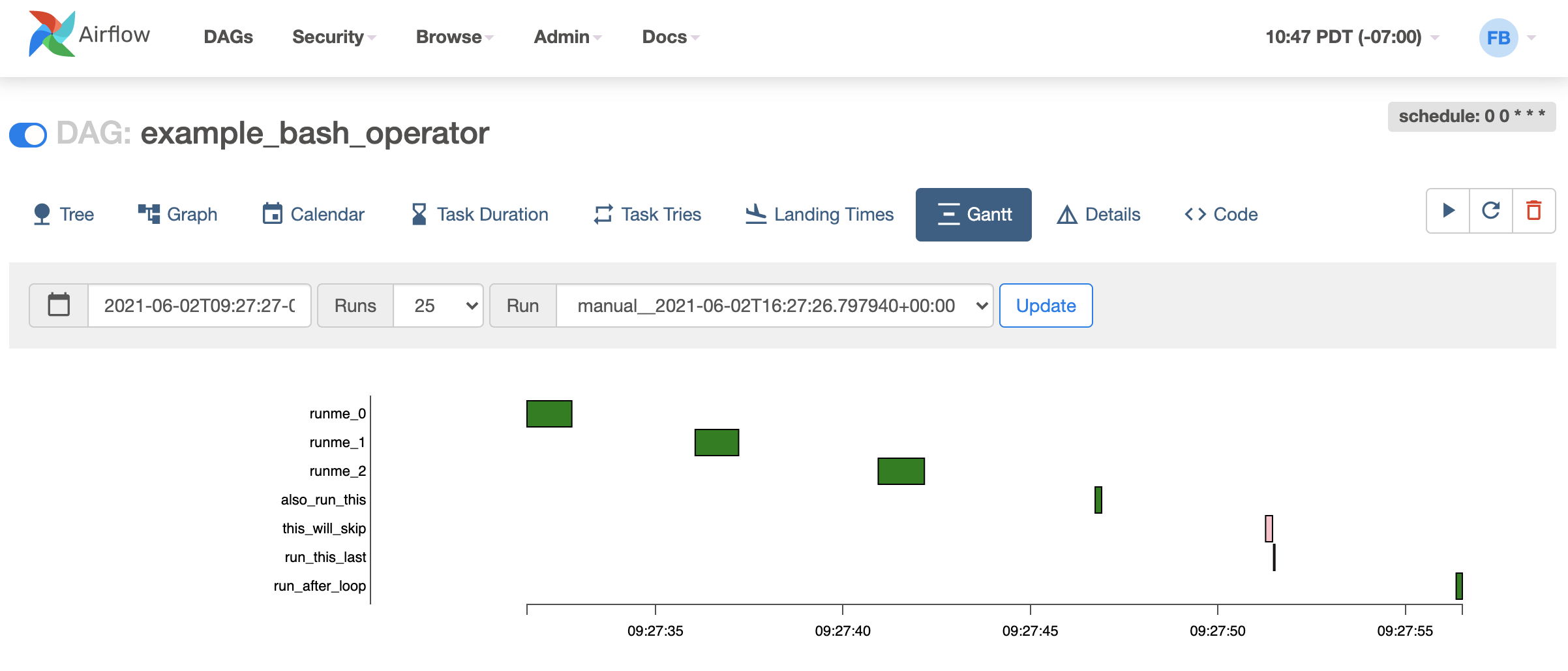

Gantt View

New Book: Up-To-Date (Airflow 3.0) Demos 🤓

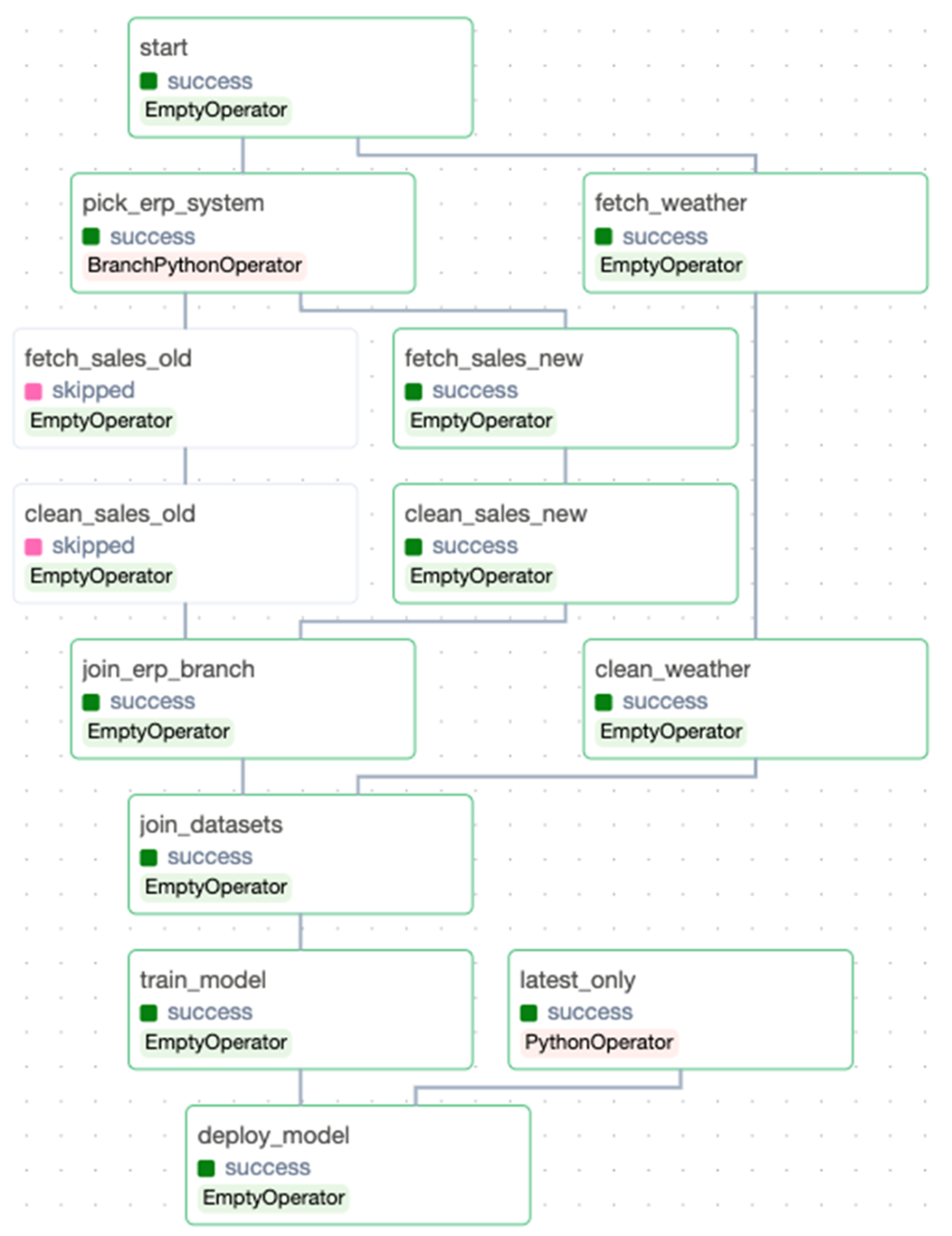

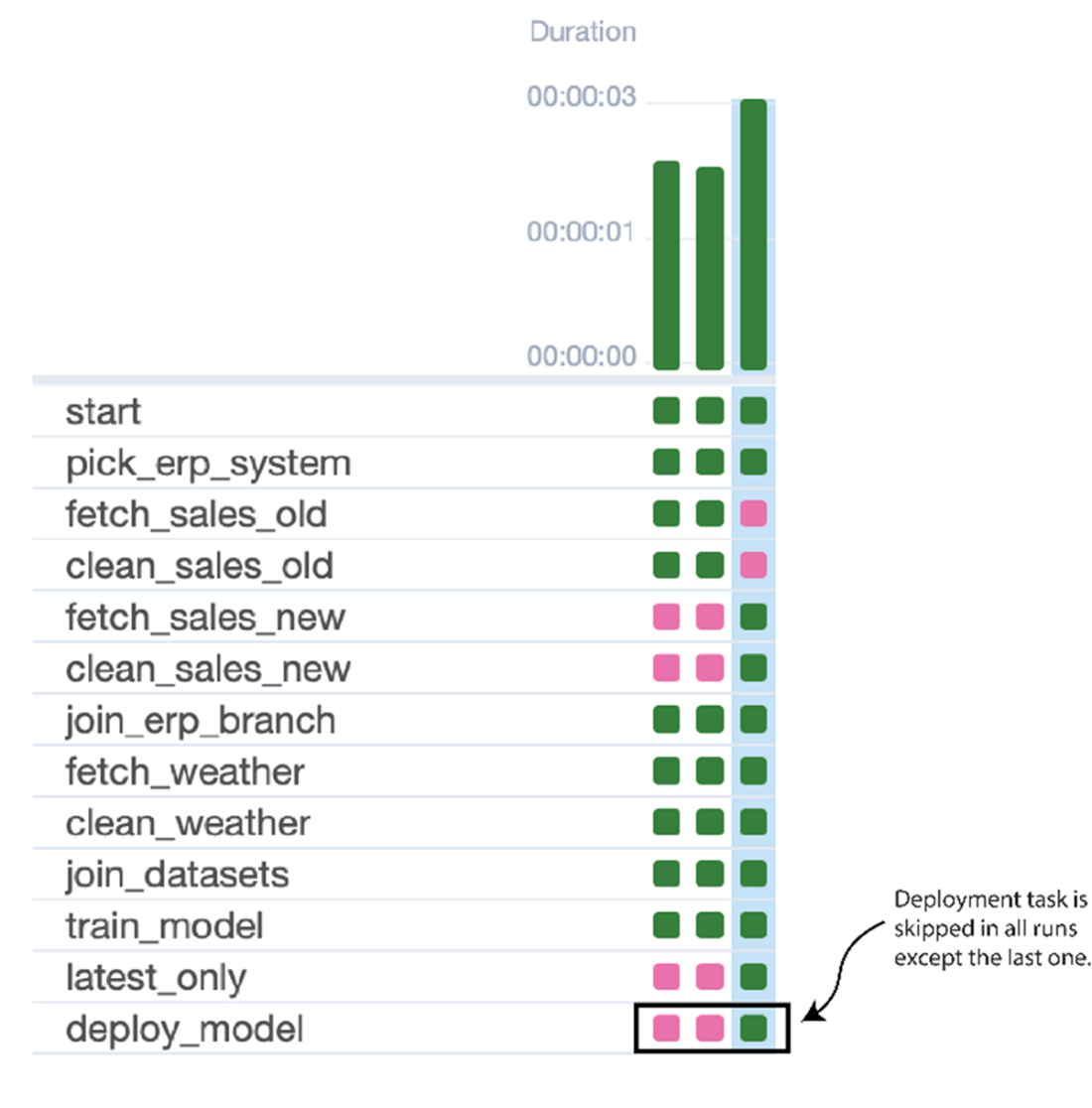

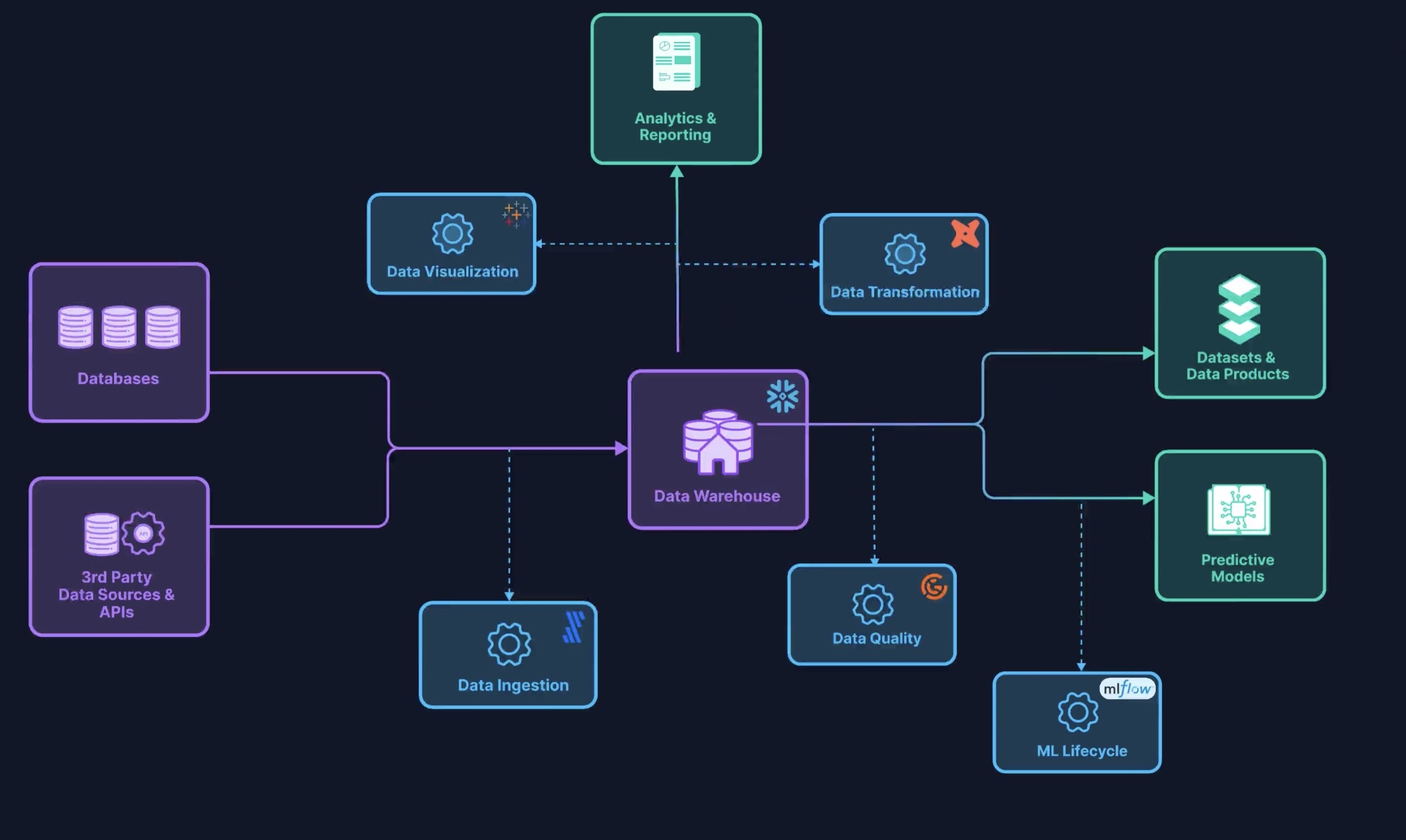

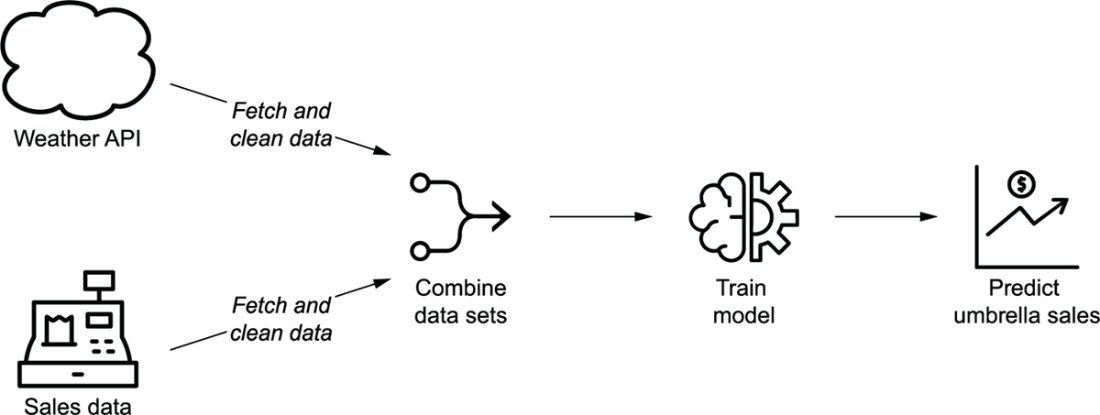

Conceptual Pipelines \(\leadsto\) DAGs

Main challenge: converting “intuitive” pipelines in our heads:

Into DAGs with concrete Tasks, dependencies, and triggers:

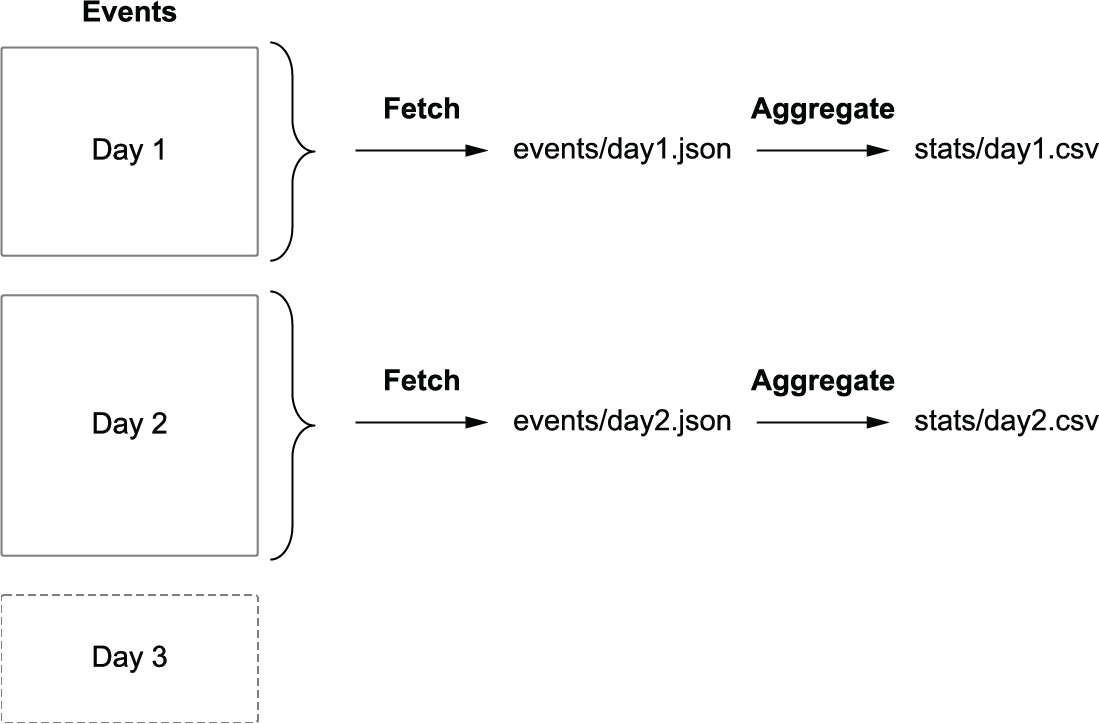

Implementation Detail 1: Backfilling

Implementation Detail 2: Train Model Only After Backfill

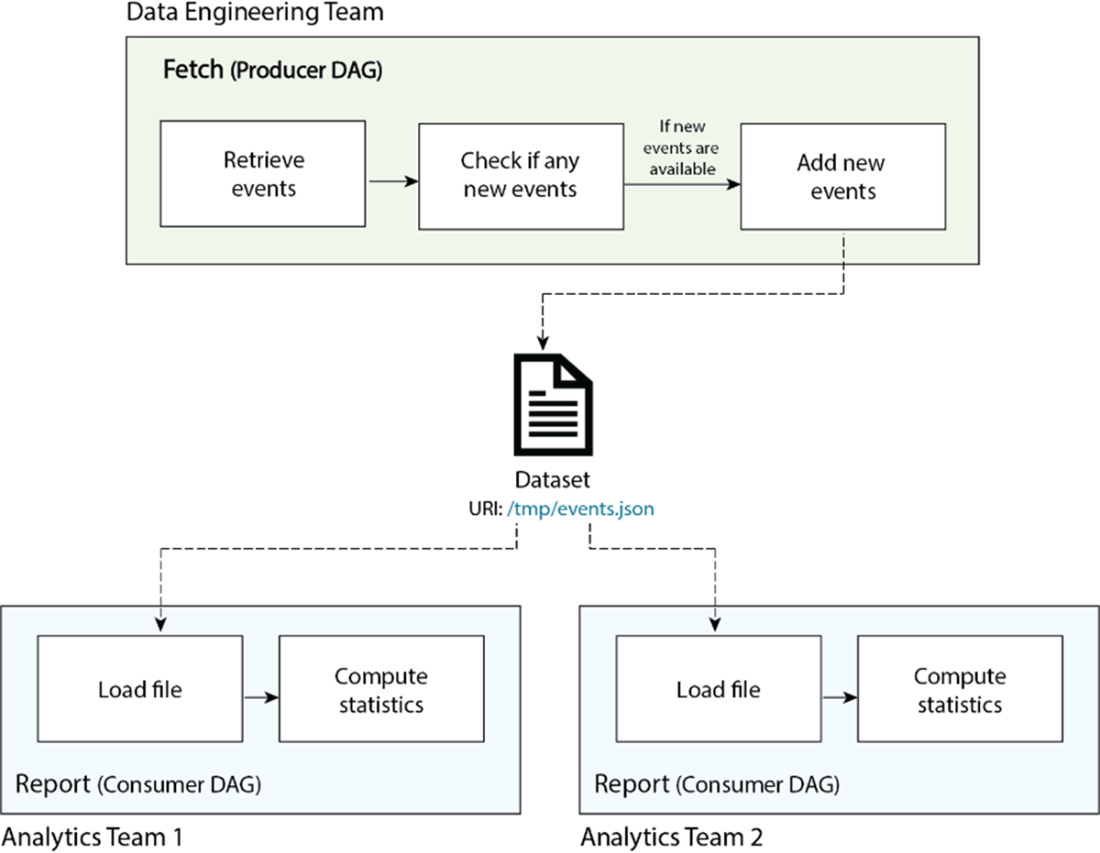

Implementation Detail 3: Downstream Consumers

From Ruiter et al. (2026)

Lab Time!

References

DSAN 6000 Week 10: ETL Pipelines with Airflow