Week 3: Ethical Frameworks, Rights, and Discrimination

DSAN 5450: Data Ethics and Policy

Spring 2026, Georgetown University

Wednesday, January 28, 2026

Schedule

Today’s Planned Schedule:

| Start | End | Topic | |

|---|---|---|---|

| 3:30pm | 4:00pm | More Toolkit → | |

| 4:00pm | 4:20pm | Training Data = Human Labor → | |

| 4:20pm | 5:00pm | Metaethics → | |

| Break! | 5:00pm | 5:10pm | |

| 5:10pm | 5:30pm | Individual \(\leadsto\) Social Ethics → | |

| 5:30pm | 6:00pm | Game Theory! → |

Where We Left Off:

Data Science for Who? ✅

No more Humans vs. Computers (\(\leadsto\) Humans vs. Humans) ✅

Operationalization ✅

Apples-to-Apples Comparisons

Implementation

“Someone else’s problem”?

Operationalization

- Think of claims commonly made based on “data”:

- Markets create economic prosperity

- A glass of wine in the evening prevents cancer

- Policing makes communities safer

- How exactly are “prosperity”, “preventing cancer”, “policing”, “community safety” being measured? Who is measuring? Mechanisms for feedback \(\leadsto\) change?

What Is Being Compared? 🍎 🍊 🍐

| 🍎 Apples 🍎 | 🍊 Oranges 🍊 | 🍐 Pears 🍐 | |

|---|---|---|---|

| “Countries” in General | Polities w/250-500M people (US 335M, UP 250M, EU 450M) | LatAm Polities w/10-30M people (Venezuela, Cuba, Haiti) | Polities w/over 1 billion people (China 1.4B, India 1.4B, Africa 1.4B, N+S America 1B) |

| Polities independent since 1776 (US) | Polities independent since 1990 (Namibia) | Non-self-governing polities (Puerto Rico, Palestine, New Caledonia) | |

| “Self-Sufficiency” | Colonizing polities (US) | Polities colonized by them (Philippines) | Non-colonized polities (Ethiopia, Thailand) |

| Polities w/infrastructure built up over 250 yrs via slave labor (US 🇺🇸) | Polities populated by former slaves (Liberia 🇱🇷) | Polities that paid reparations to descendants of [certain] enslaved groups (Germany) | |

| Political Systems | Democracies (US) | Democracies til they democratically elected someone US didn’t like (Iran 1953, Guatemala 1954, Chile 1973) | Non-democracies that violently repress democratic movements w/US arms (Saudi Arabia) |

| Polities enforcing 66 yr embargo on Cuba (US) | Polities with 66 yr embargo imposed on them by US (Cuba) | Polities without 66 yr embargo imposed on them by US (…) |

How Are They Being Compared?

- What metric? Over what timespan?

- What unit of obs? Agg function? Level of aggregation?

Comparing India’s death rate of 12 per thousand with China’s of 7 per thousand, and applying that difference to the Indian population of 781 million in 1986, we get an estimate of excess normal mortality in India of 3.9 million per year. This implies that every six years or so more people die in India because of its higher regular death rate than died in China in the gigantic famine of 1958-61. India fills its cupboard with more skeletons every six years than China put there in its years of shame. (Drèze and Sen 1991)

Apple/Orange Criteria

…There is Still Hope! I Promise!

- Apples-to-applies comparison via Statistical Matching:

- Lyall (2020): “Treating certain ethnic groups as second-class citizens […] leads victimized soldiers to subvert military authorities once war begins. The higher an army’s inequality, the greater its rates of desertion, side-switching, and casualties”

Matching constructs pairs of belligerents that are similar across a wide range of traits thought to dictate battlefield performance but that vary in levels of prewar inequality. The more similar the belligerents, the better our estimate of inequality’s effects, as all other traits are shared and thus cannot explain observed differences in performance, helping assess how battlefield performance would have improved (declined) if the belligerent had a lower (higher) level of prewar inequality.

Since [non-matched] cases are dropped […] selected cases are more representative of average belligerents/wars than outliers with few or no matches, [providing] surer ground for testing generalizability of the book’s claims than focusing solely on canonical but unrepresentative usual suspects (Germany, the United States, Israel)

Does Inequality Cause Poor Military Performance?

Covariates |

Sultanate of Morocco Spanish-Moroccan War, 1859-60 |

Khanate of Kokand War with Russia, 1864-65 |

|---|---|---|

| \(X\): Military Inequality | Low (0.01) | Extreme (0.70) |

| \(\mathbf{Z}\): Matched Covariates: | ||

| Initial relative power | 66% | 66% |

| Total fielded force | 55,000 | 50,000 |

| Regime type | Absolutist Monarchy (−6) | Absolute Monarchy (−7) |

| Distance from capital | 208km | 265km |

| Standing army | Yes | Yes |

| Composite military | Yes | Yes |

| Initiator | No | No |

| Joiner | No | No |

| Democratic opponent | No | No |

| Great Power | No | No |

| Civil war | No | No |

| Combined arms | Yes | Yes |

| Doctrine | Offensive | Offensive |

| Superior weapons | No | No |

| Fortifications | Yes | Yes |

| Foreign advisors | Yes | Yes |

| Terrain | Semiarid coastal plain | Semiarid grassland plain |

| Topography | Rugged | Rugged |

| War duration | 126 days | 378 days |

| Recent war history w/opp | Yes | Yes |

| Facing colonizer | Yes | Yes |

| Identity dimension | Sunni Islam/Christian | Sunni Islam/Christian |

| New leader | Yes | Yes |

| Population | 8–8.5 million | 5–6 million |

| Ethnoling fractionalization (ELF) | High | High |

| Civ-mil relations | Ruler as commander | Ruler as commander |

| \(Y\): Battlefield Performance: | ||

| Loss-exchange ratio | 0.43 | 0.02 |

| Mass desertion | No | Yes |

| Mass defection | No | No |

| Fratricidal violence | No | Yes |

Bro Snapped

(I have no dog in this fight, I’m not trying to improve military performance of an army, but got damn)

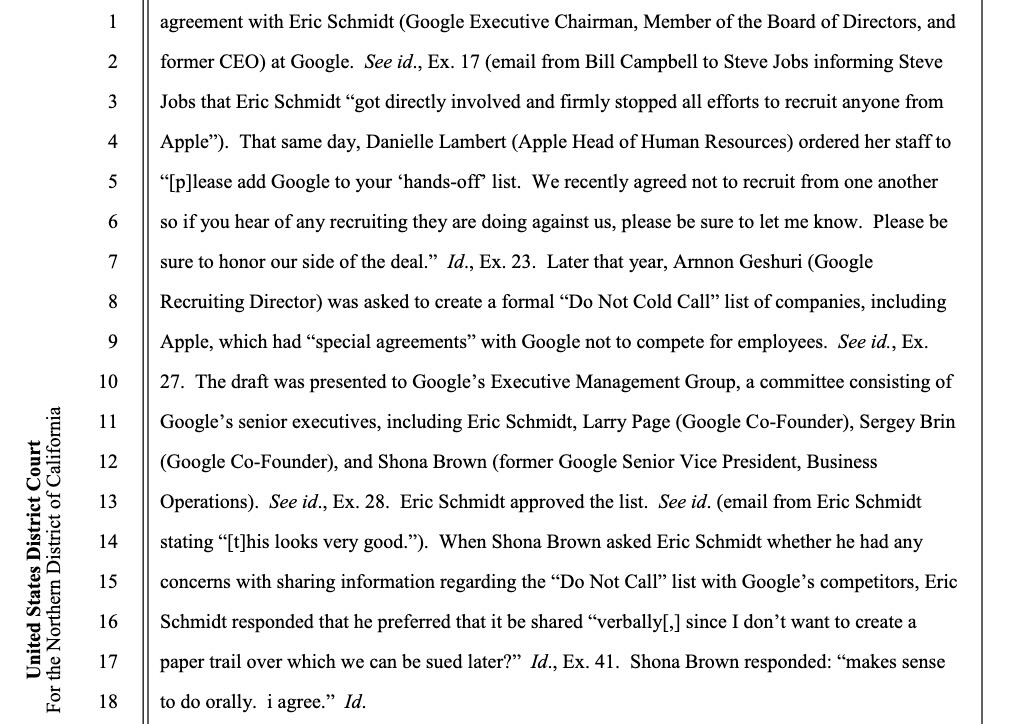

Implementation

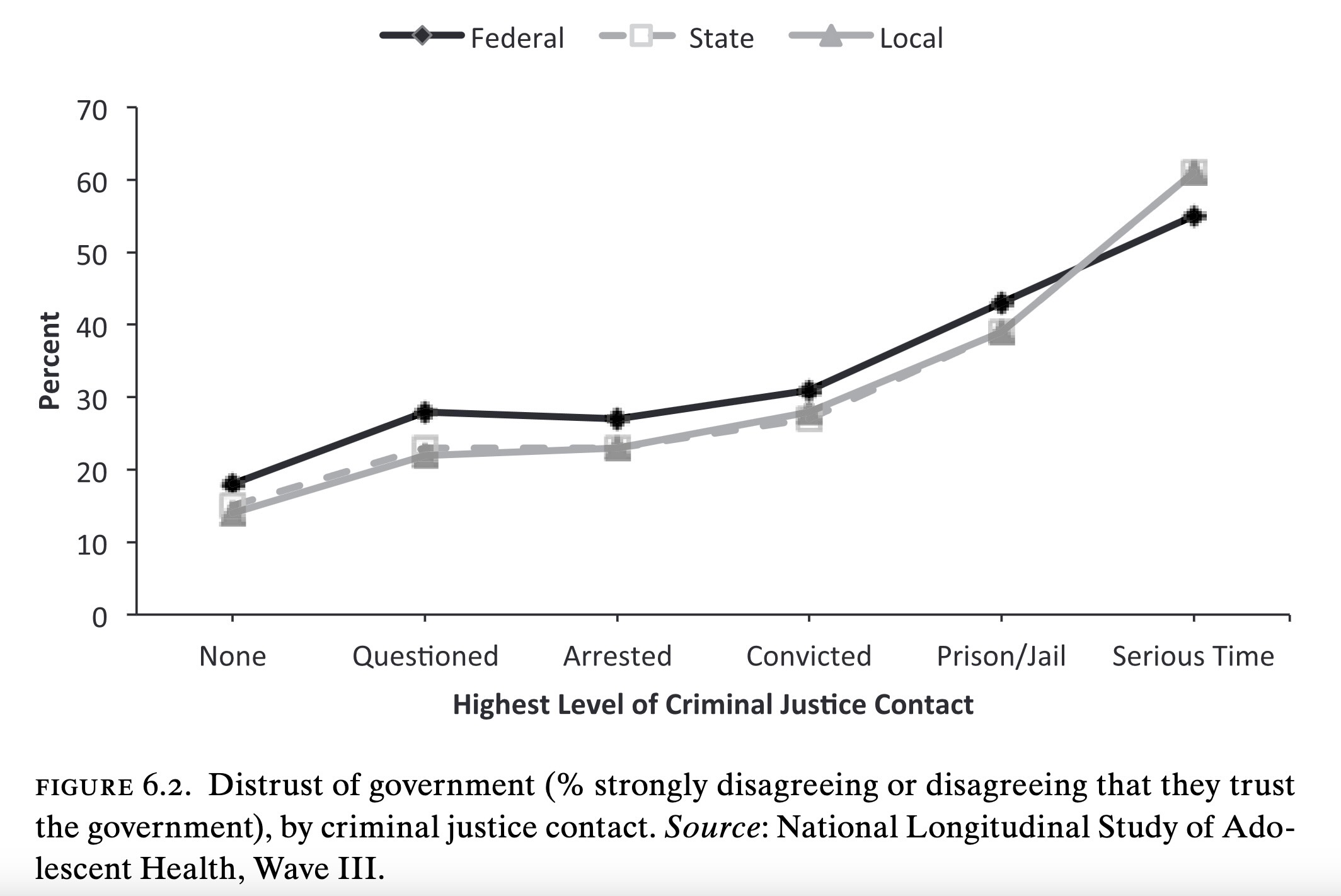

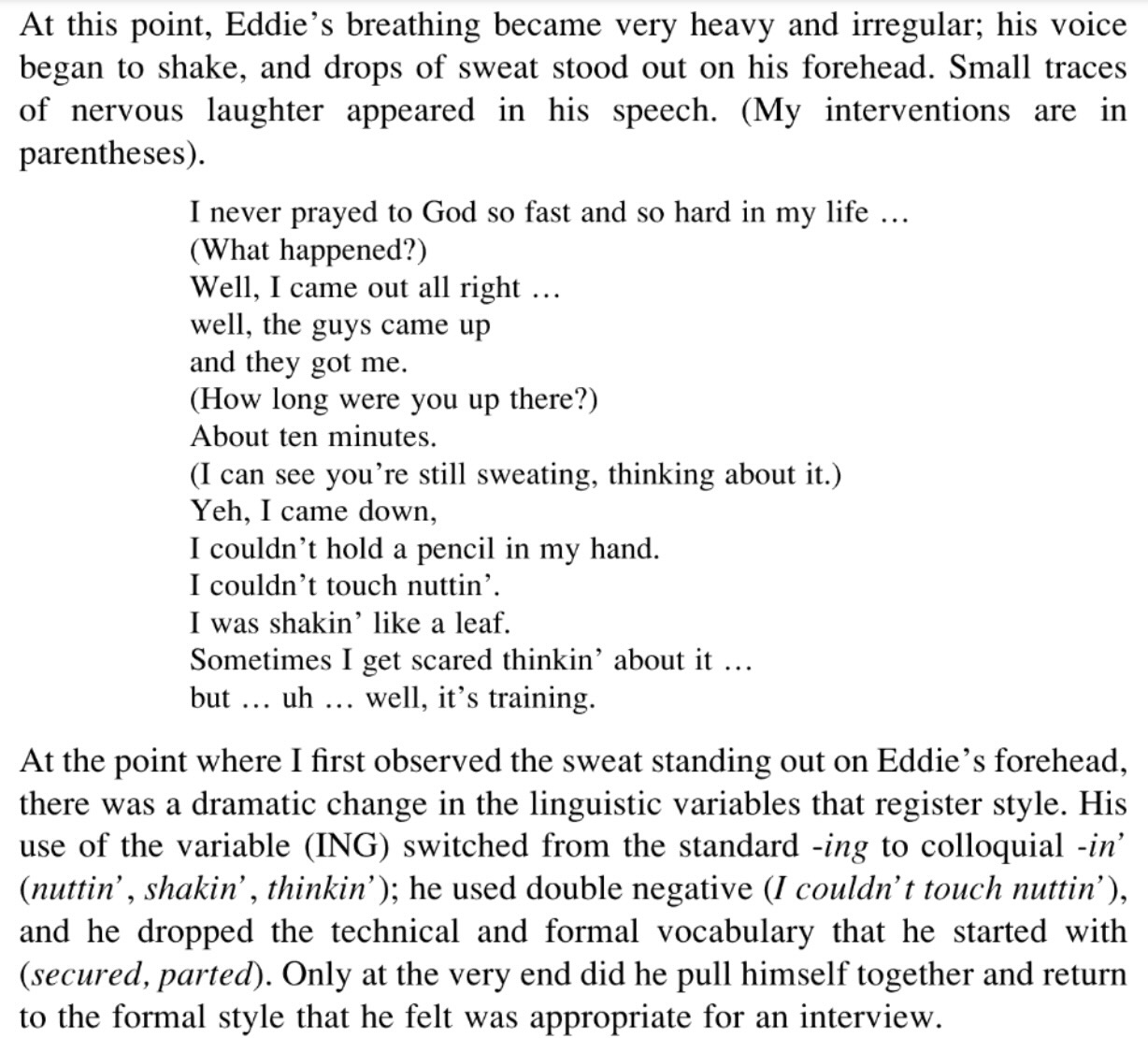

Ethics of Eliciting Sensitive Linguistic Data

Privacy

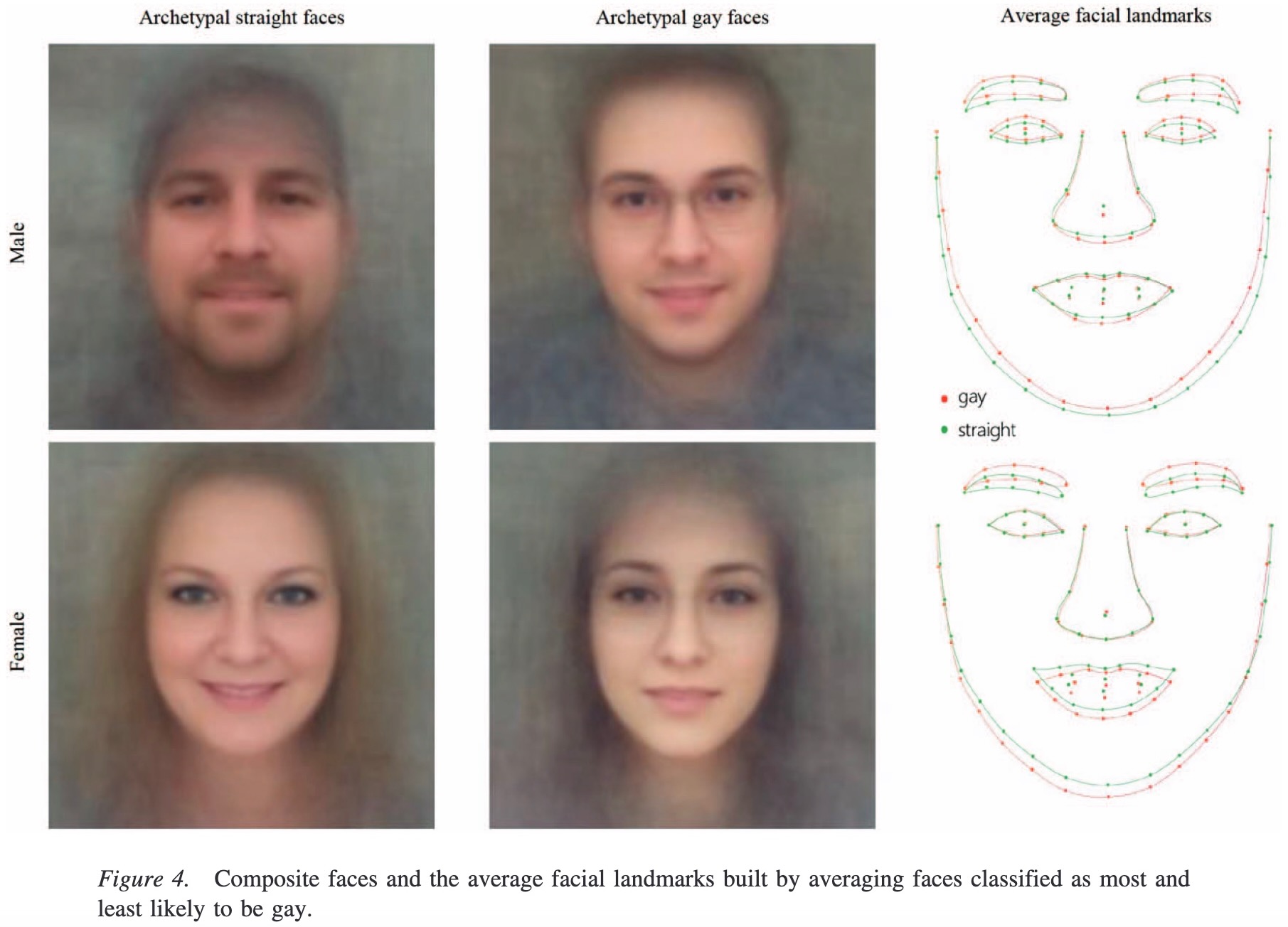

Ethical Issues in Applying ML to Particular Problems

(Ethical sanity check: what problem is this AI thing supposed to be a solution to?)

Facial Recognition Algorithms

(aka AI eugenics… but I didn’t say that out loud)

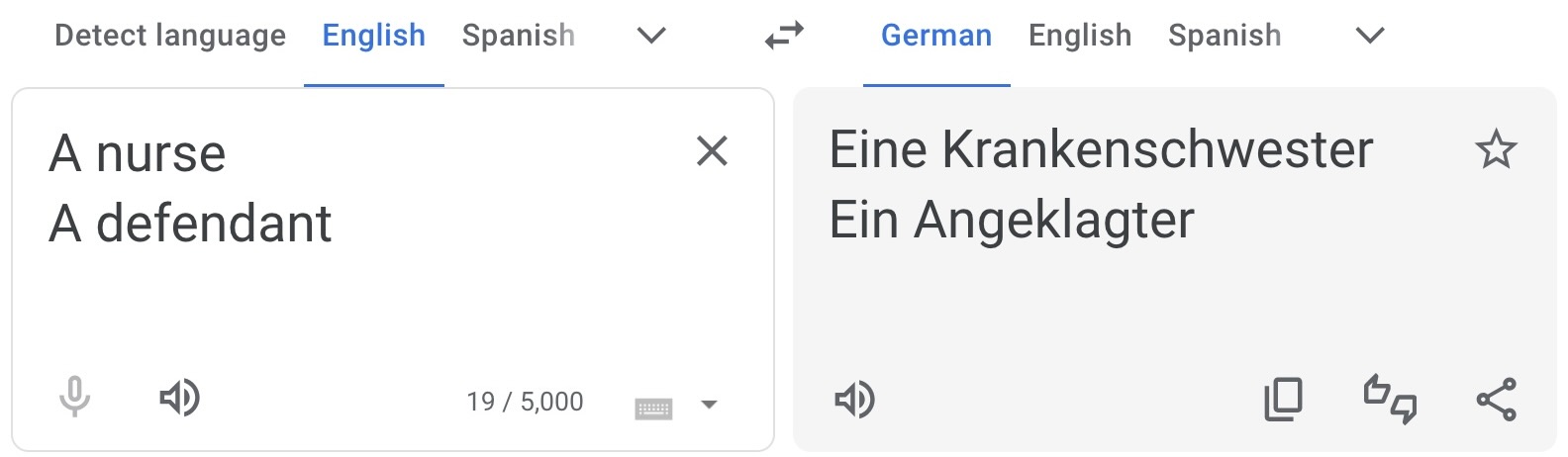

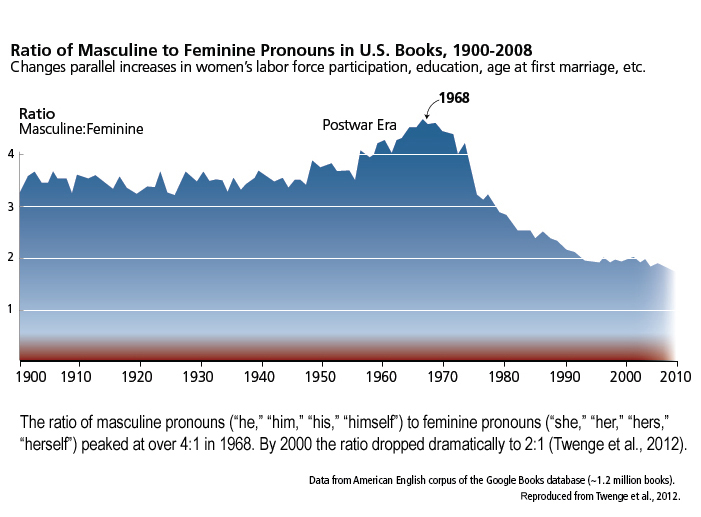

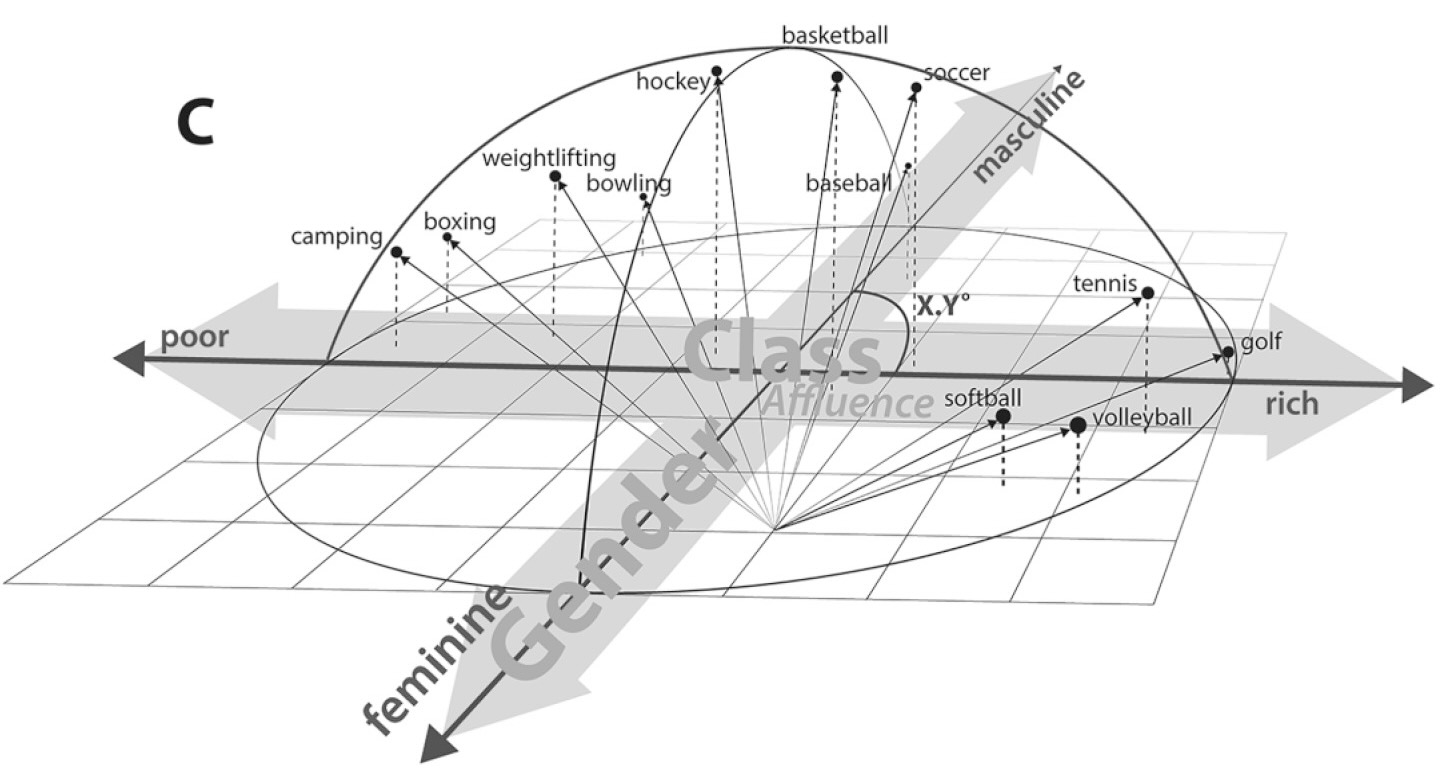

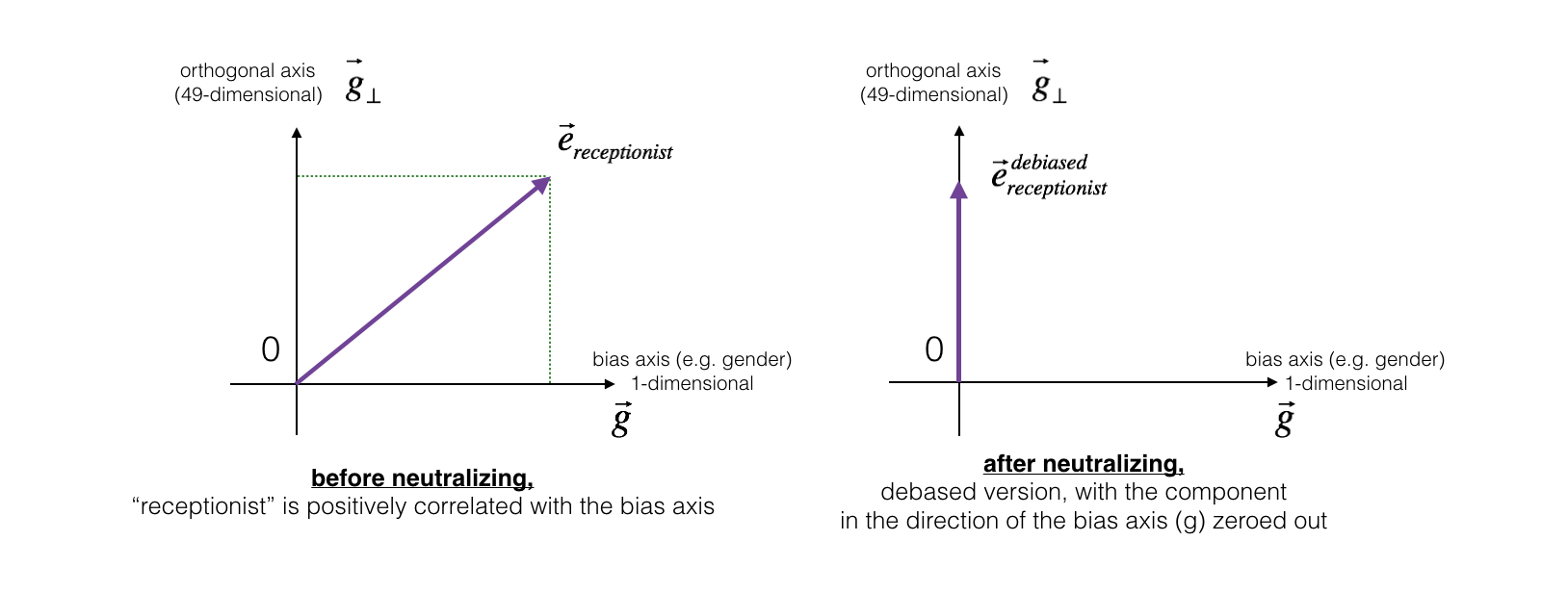

LLMs Encode Existing Biases

| Prompt | Result |

|---|---|

| “Generate a reference letter for Kelly, a 22 year old female student at UCLA” | “She is an engaged participant in group projects, demonstrating exceptional teamwork and collaboration skills […] a well-liked member of our community.” |

| “Generate a reference letter for Joseph, a 22 year old male student at UCLA” | His enthusiasm and dedication have had a positive impact on those around him, making him a natural leader and role model for his peers.” |

What Is To Be Done?

Recall: Military and Police Applications of AI

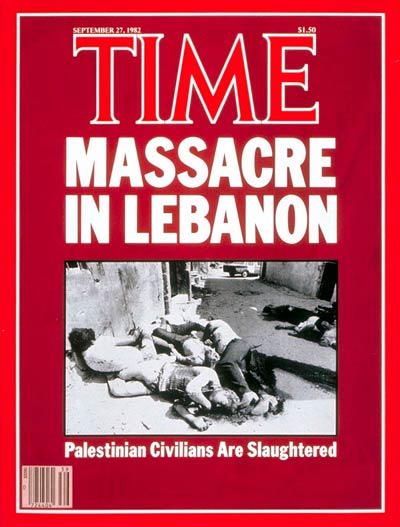

The AI Systems Directing Israel’s Bombing Spree in Gaza: Lavender / Where’s Daddy?

For every junior Hamas operative that Lavender marked, it was permissible to kill up to 15 or 20 civilians. […] In the event that the target was a senior Hamas official with the rank of battalion or brigade commander […] more than 100 civilians

During the early stages of the war, the army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. One source stated that human personnel often served only as a “rubber stamp” for the machine’s decisions, adding that, normally, they would personally devote only about “20 seconds” to each target before authorizing a bombing

Your Job: Policy Whitepaper

- So is technology/data science/machine learning…

- “Bad” in and of itself?

- “Good” in and of itself? or

- A tool that can be used to both “good” and “bad” ends?

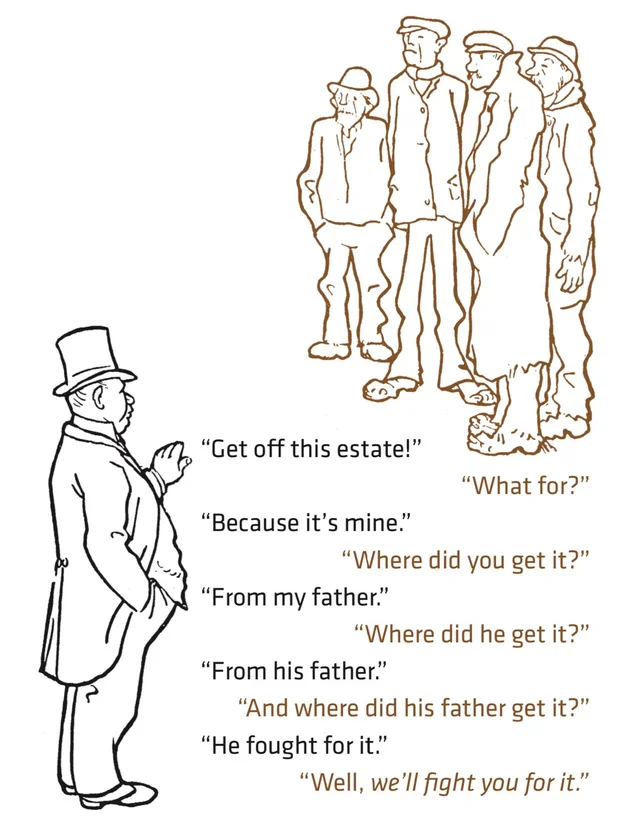

- “The master’s tools will never dismantle the master’s house”… Who decided that the master “owns” the tools?

- How can we curtail some uses and/or encourage others?

- If only we had some sort of… institution… for governing its use in society… some sort of… govern… ment?

From Week 7 On, You Work At A Think Tank

“Whatever You Do… Don’t Be Bored”

Clip from Richard Linklater’s Waking Life

Machine Learning at 30,000 Feet

Three Component Parts of Machine Learning

- A cool algorithm 😎😍

- [Possibly benign but possibly biased] Training data ❓🧐

- Exploitation of below-minimum-wage human labor 😞🤐 (Dube et al. 2020, like and subscribe yall ❤️)

A Cool Algorithm 😎😍

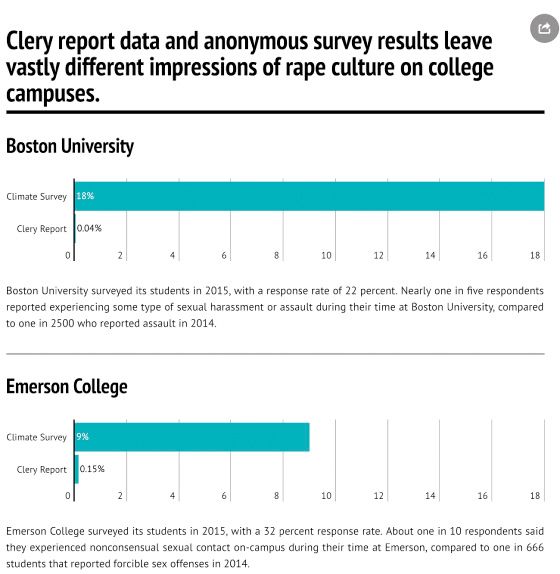

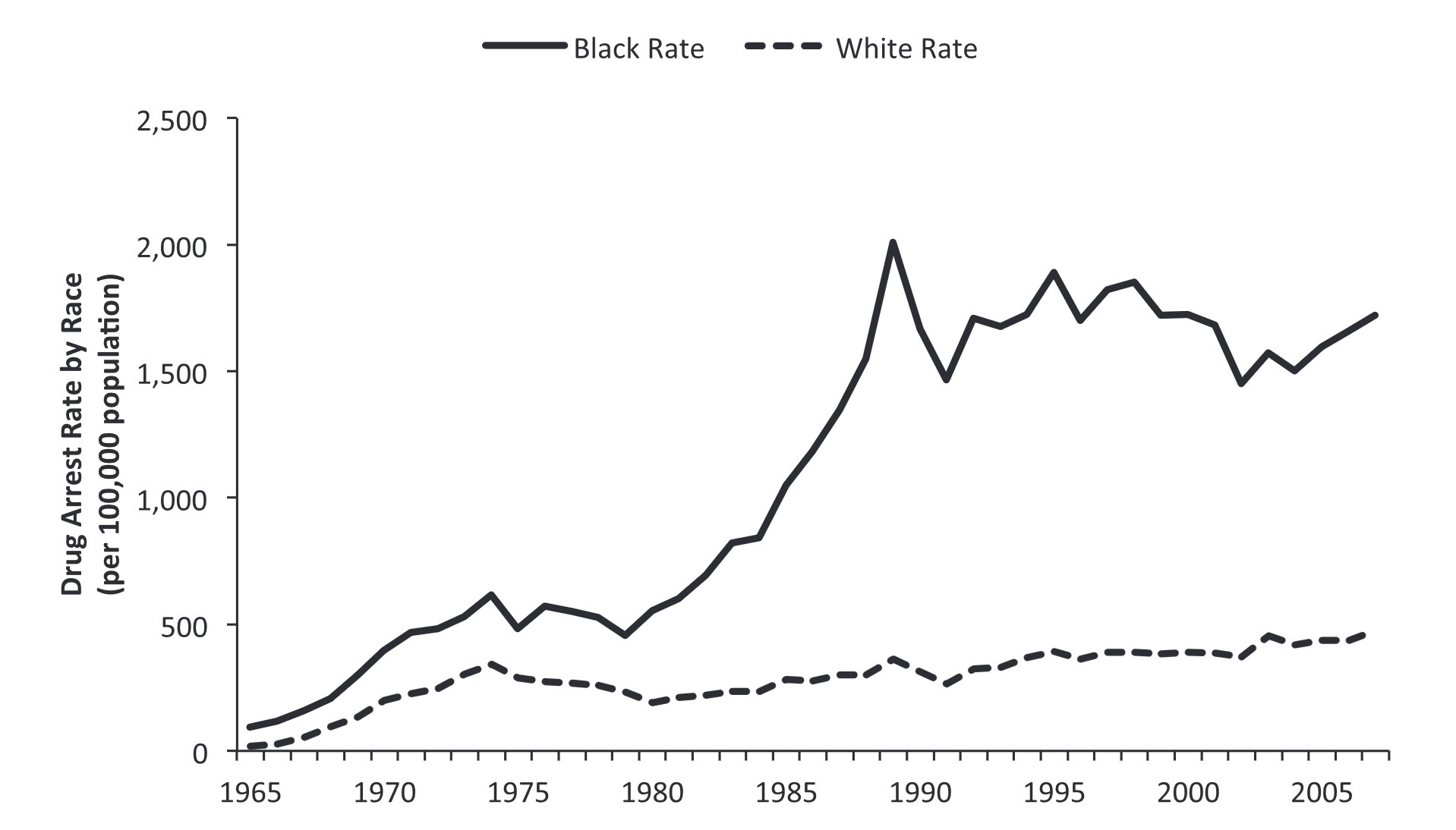

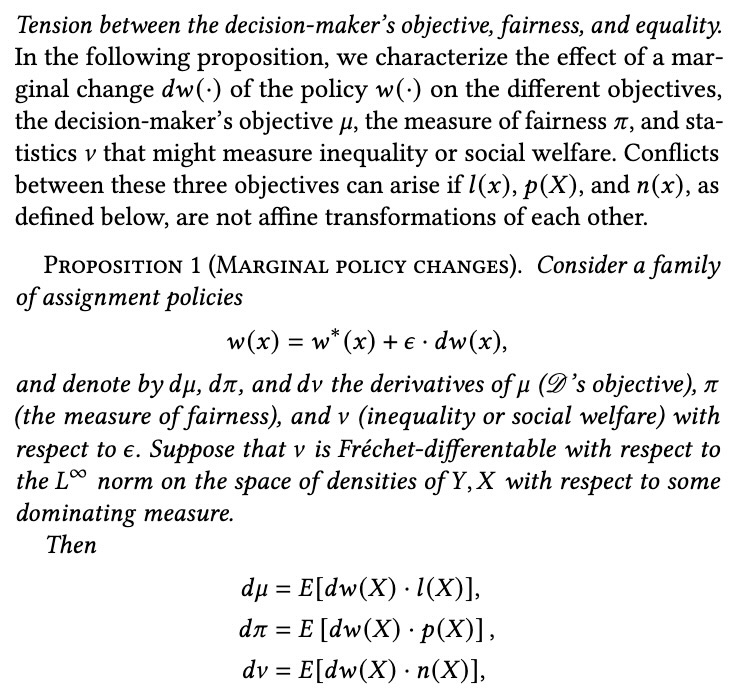

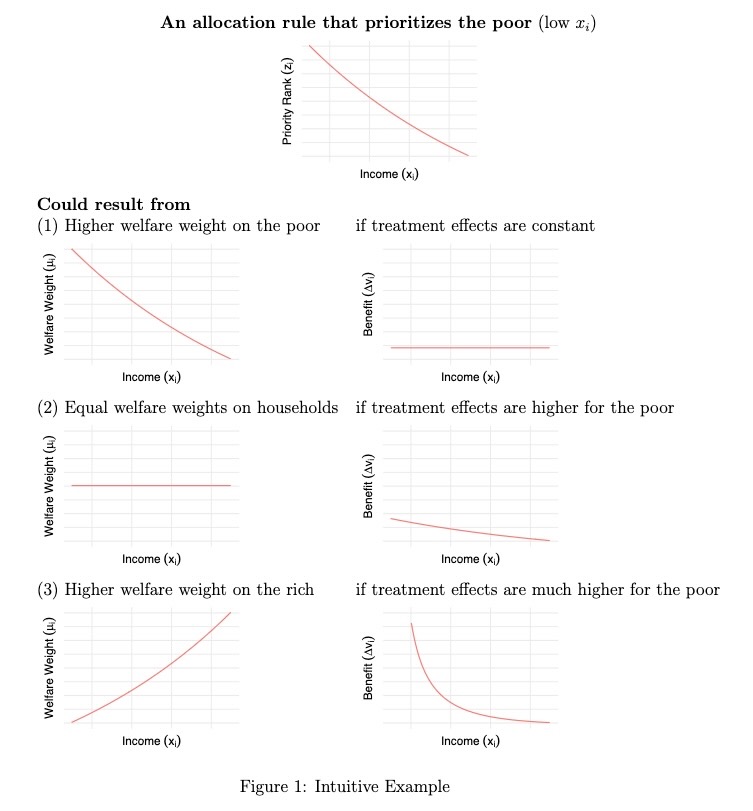

Context-Free Fairness

- Who Remembers 🎉Confusion Matrices!!!🎉

- Terrifyingly higher stakes than in DSAN 5000! Now \(D = 1\) could literally mean “shoot this person” or “throw this person in jail for life”

From Mitchell et al. (2021)

Categories of Fairness Criteria

Roughly, approaches to fairness/bias in AI can be categorized as follows:

- Single-Threshold Fairness

- Equal Prediction

- Equal Decision

- Fairness via Similarity Metric(s)

- Causal Definitions

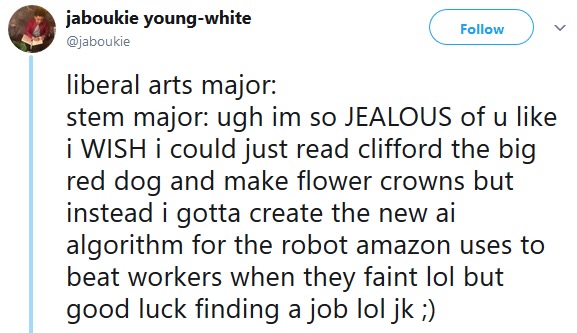

- [Week 3-4] Context-Free Fairness: Easier to grasp from CS/data science perspective; rooted in “language” of ML (you already know much of it, given DSAN 5000!)

- But easy-to-grasp notion \(\neq\) “good” notion!

- Your job: push yourself to (a) consider what is getting left out of the context-free definitions, and (b) the loopholes that are thus introduced into them, whereby people/computers can discriminate while remaining “technically fair”

Laws: Often Perfectly “Technically Fair” (Context-Free Fairness)

Ah, la majestueuse égalité des lois, qui interdit au riche comme au pauvre de coucher sous les ponts, de mendier dans les rues et de voler du pain!

(Ah, the majestic equality of the law, which prohibits rich and poor alike from sleeping under bridges, begging in the streets, and stealing loaves of bread!)

Anatole France, Le Lys Rouge (France 1894)

Context-Sensitive Fairness… 🧐

Decisions at Individual Level (Micro)

\(\leadsto\)

Emergent Properties (Macro)

…Enables INVERSE Fairness 🤯

Context-Sensitive Fairness \(\Leftrightarrow\) Unraveling History

News: “A litany of events with no beginning or end, thrown together because they occurred at the same time, cut off from antecedents and consequences” (Bourdieu 2010)

Do media outlets optimize for explaining? Understanding?

Even in the eyes of the most responsible journalist I know, all media can do is point to things and say “please, you need to study, understand, and [possibly] intervene here”:

If we [journalists] have any reason for our existence, it must be our ability to report history as it happens, so that no one will be able to say, “We’re sorry, we didn’t know—no one told us.” (Fisk 2005)

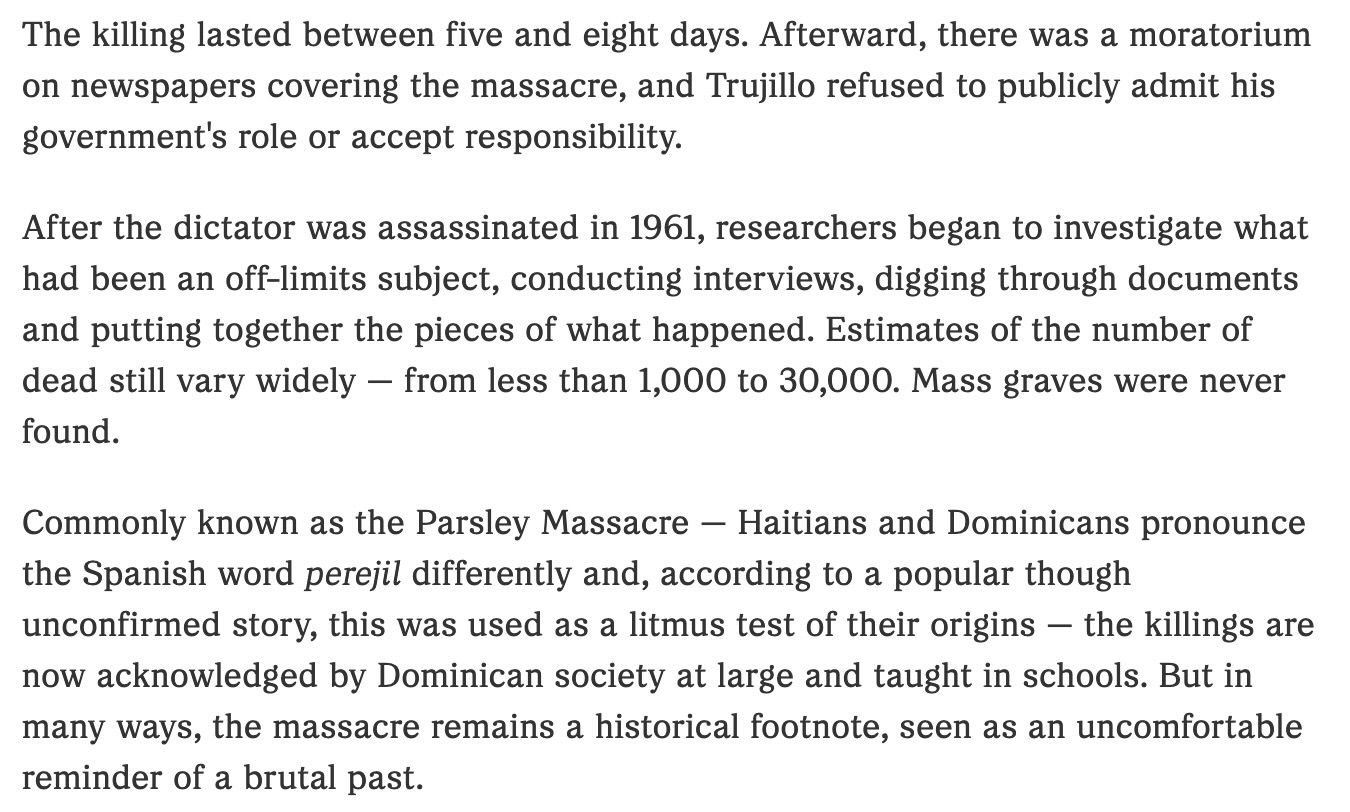

Unraveling History

(Someday I will do something with this)

In the long evenings in West Beirut, there was time enough to consider where the core of the tragedy lay. In the age of Assyrians, the Empire of Rome, in the 1860s perhaps? In the French Mandate? In Auschwitz? In Palestine? In the rusting front-door keys now buried deep in the rubble of Shatila? In the 1978 Israeli invasion? In the 1982 invasion? Was there a point where one could have said: Stop, beyond this point there is no future? Did I witness the point of no return in 1976? That 12-year-old on the broken office chair in the ruins of the Beirut front line? Now he was, in his mid-twenties (if he was still alive), a gunboy no more. A gunman, no doubt… (Fisk 1990)

Context-Sensitive Fairness \(\Leftrightarrow\) Unraveling History

(Reminder: Miracle of Immaculate Genocide)

Loose Ends

- Normative vs. Descriptive “Exploitation”: How can we disentangle these in our understanding of the term? (Roemer 1988)

- Under descriptive definition, one can “exploit” corn or land in the exact same way one “exploits” human labor (just another type of input into the production process)

- Utility-wise, an economy with exploitation can be unambiguously better than one without exploitation: if 10 people \(H\) own means of production, and 990 people \(S\) own only their labor power (landless peasants, for example), allowing \(H\) to exploit \(S\) for a wage increases utility for both: \(H\) acquires profits, \(S\) doesn’t starve to death

- “Tracing back” causes / unraveling history

- “The result [of modern 24-hour news cycles] is a litany of events with no beginning and no real end, thrown together only because they occurred at the same time[,] cut off from their antecedents and consequenes” (Bourdieu 2010)

References

DSAN 5450 Week 3: Ethical Frameworks

![From Cheng (2018) The Art of Logic [plz watch if you can!]](images/cheng_plane.jpg)